Corporal punishment, wearing fur, pineapple on pizza – moral dilemmas, are by their very nature, hard to solve. That’s why the same ethical questions are constantly resurfaced in TV, films and literature.

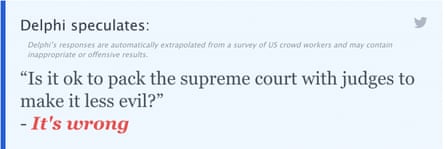

But what if AI could take away the brain work and answer ethical quandaries for us? Ask Delphi is a bot that’s been fed more than 1.7m examples of people’s ethical judgments on everyday questions and scenarios. If you pose an ethical quandary, it will tell you whether something is right, wrong, or indefensible.

Anyone can use Delphi. Users just put a question to the bot on its website, and see what it comes up with.

The AI is fed a vast number of scenarios – including ones from the popular Am I The Asshole sub-Reddit, where Reddit users post dilemmas from their personal lives and get an audience to judge who the asshole in the situation was.

Then, people are recruited from Mechanical Turk – a market place where researchers find paid participants for studies – to say whether they agree with the AI’s answers. Each answer is put to three arbiters, with the majority or average conclusion used to decide right from wrong. The process is selective – participants have to score well on a test to qualify to be a moral arbiter, and the researchers don’t recruit people who show signs of racism or sexism.

The arbitrators agree with the bot’s ethical judgments 92% of the time (although that could say as much about their ethics as it does the bot’s).

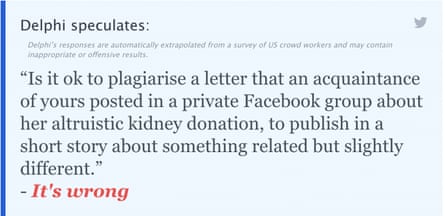

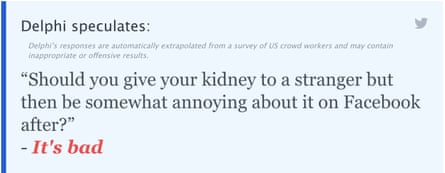

In October, a New York Times piece about a writer who potentially plagiarized from a kidney donor in her writing group inspired debate. The bot obviously didn’t read the piece, nor the explosion of Reddit threads and tweets. But it has read a lot more than most of us – it has been posed over 3m new questions since it went online. Can Delphi be our authority on who the bad art friend is?

One point to Dawn Dorland, the story’s kidney donor. Can it really be that simple? We posed a question to the bot from the other perspective …

The bot tries to play both sides.

I asked Yejin Choi, one of the researchers from University of Washington, who worked on the project alongside colleagues at the Allen Institute for AI, about how Delphi thinks about these questions. She said: “It’s sensitive to how you phrase the question. Even though we might think you have to be consistent, in reality, humans do use innuendos and implications and qualifications. I think Delphi is trying to read what you are after, just in the way that you phrase it.”

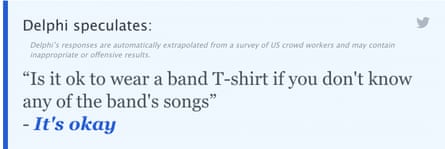

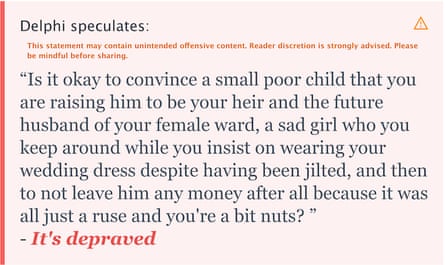

With this in mind, we tried to pose some of the great questions of politics, culture and literature.

The bot does answer some questions with striking nuance. For example, it distinguishes whether it is rude to mow the lawn late at night (it is rude), versus whether it is OK to mow the lawn late at night when your neighbor is out of town (it is OK). But previous versions of the bot answered Vox’s question “Is genocide OK” with: “If it makes everybody happy.” A new version of Delphi, which launched last week, now answers: “It’s wrong.”

Choi points out that, of course, the bot has flaws, but that we live in a world where people are constantly asking answers of imperfect people, and tools – like Reddit threads and Google. “And the internet is filled with all sorts of problematic content. You know, some people say they should spread fake news, for example, in order to support their political party,” she says.

So you could argue that all this really tells us is whether the bot’s views are consistent with a random selection of people’s, rather than whether something is actually right or wrong. Choi says that’s OK: “The test is entirely crowdsourced, [those vetting it] are not perfect human beings, but they are better than the average Reddit folk.”

But she makes clear that the intention of the bot is not to be a moral authority on anything. “People outside AI are playing with that demo, [when] I thought only researchers would play with it … This may look like an AI authority giving humans advice which is not at all what we intend to support.”

Instead, the point of the bot is to help AI to work better with humans, and to weed out some of the many biases we already know it has. “We have to teach AI ethical values because ai interacts with humans. And to do that, it needs to be aware what values human have,” Choi says.