This piece is part of Gizmodo’s ongoing effort to make the Facebook Papers available to the public. See the full directory of documents here.

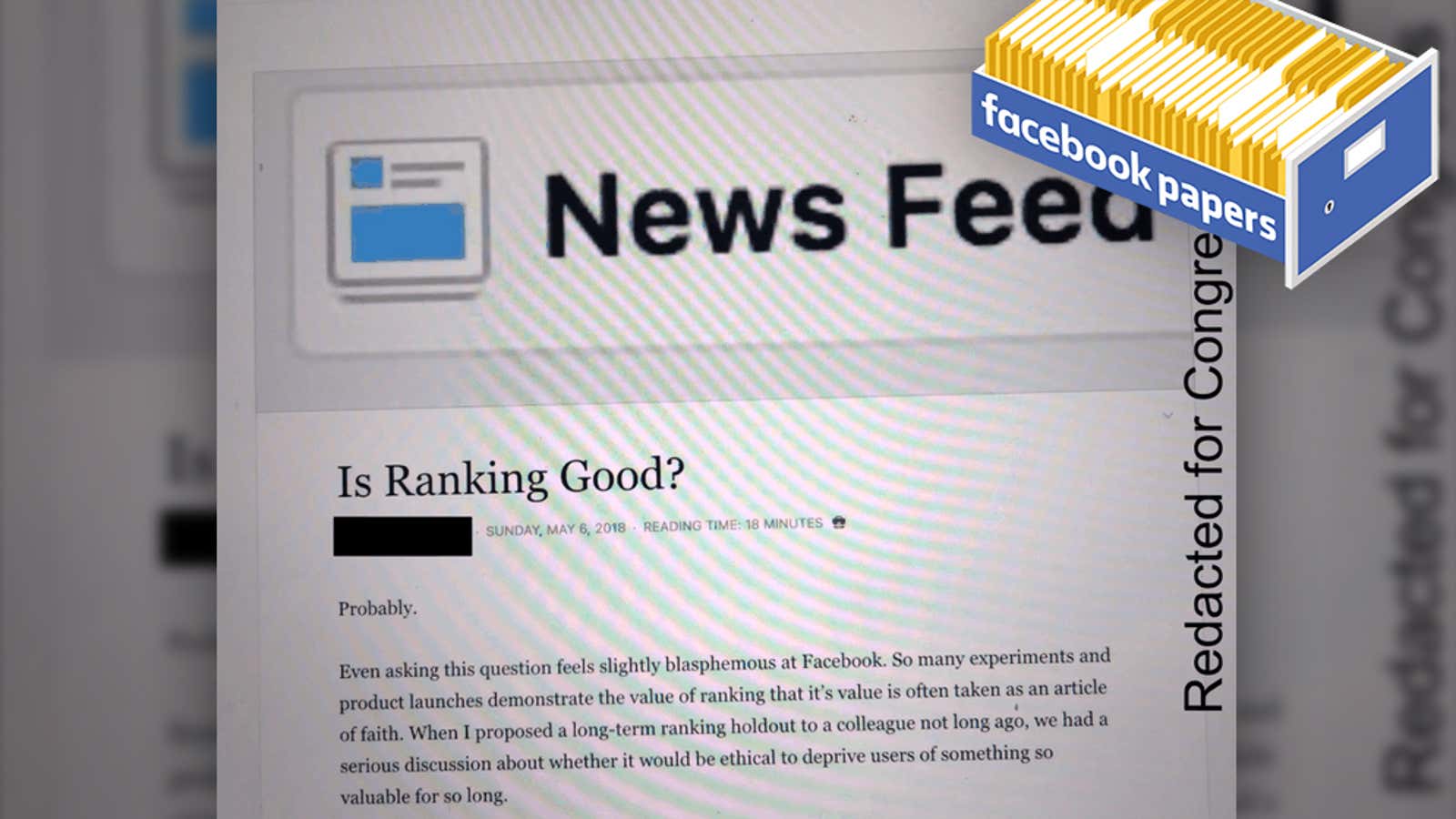

In a presentation dated May 6, 2018, a Facebook employee asked, “Is Ranking Good?”

“Probably. Even asking the question feels slightly blasphemous at Facebook,” the same employee answered in smaller text below. “So many experiments and product launches demonstrate the value of ranking that it’s [sic] value is often taken as an article of faith.”

“Ranking” refers to how the world’s biggest social network prioritizes what content users see in the News Feed, Facebook’s flagship product. The list of posts that users see when they first open Facebook was once in simple chronological order; now algorithms designed by the company arrange the posts based on value assigned to them. The employee struck at the heart of a central question within Facebook, now known as Meta: How to balance maximizing profit and growth with encouraging behaviors the algorithms’ designers knew weren’t healthy. The company’s ranking algorithms increased how much time users spend on Facebook, but employees found it fostered worse friendships, according to the slideshow. So is ranking good? Said another way: Is ranking worth its consequences?

The presentation is part of the Facebook Papers, a trove of documents that offer an unprecedented look inside the most powerful social media company in the world. The records were first provided to Congress last year by Frances Haugen, a Facebook product manager-turned-whistleblower, and later obtained by hundreds of journalists, including those at Gizmodo. Haugen testified before Congress about Facebook’s harms in October 2021.

Today, as part of a rolling effort to make the Facebook Papers available publicly, Gizmodo is releasing a second batch of documents—37 files in all. In our first drop, we shared 28 files related to the 2020 election and the Jan 6. attack on the U.S. Capitol. Only a few of the pages had ever been shown to the public before. Gizmodo has partnered with a group of independent experts to review, redact, and publish the documents. This committee serves to advise and monitor our work and facilitate the responsible disclosure of the greatest number of documents in the public interest possible. We believe in the value of open access to these materials. Our collective goal is to minimize any potential harms that could result from the disclosure of certain methods by which Meta tackles sensitive issues like sex trafficking, disinformation, and voter manipulation.

Today’s batch offers insight into how Meta chooses to rank the content submitted by its users. It’s a system that very few people seem to understand, a problem that the company appears short on clues how to solve. Choosing these documents as a follow-up to ones on the most important political events of the past two years speaks to how highly we consider their relevance to understanding Facebook’s effects on the world.

These documents offer an unfiltered, if fragmented, look at years’ worth of attempts by Facebook to assign emotional value to every swipe and click in its app. This process, which has an outsized impact on the kinds of information most frequently seen on the platform, is known as ranking. It underpins one of Facebook’s core features: the News Feed (or just the “Feed,” as the company now prefers we call it).

Several key documents concern what Facebook calls “meaningful social interactions,” a term introduced by the company in Jan. 2018. This metric, as CEO Mark Zuckerberg explained at the time, was meant to help prioritize “personal connections’’ over an endless online dribble of viral news and videos. This “major change,” as he put it, was framed as an effort to put first the “happiness and health” of the user—Facebook’s way of encouraging users to check Facebook more often by dialing up the feeling of personal connection to their Feeds.

What constitutes a meaningful social interaction? Meta’s phrasing seems intentionally opaque: It could be sharing a cold beer with an old friend or maybe a meal with the whole family under the same roof. To Facebook, it’s a math problem; a way of training an computer to assign some degree of worth to the most benign online behaviors—putting a sticker, for instance, on a random weirdo’s post.

Zuckerberg has denied before Congress that “increasing the amount of time people spend” staring at their feeds has ever been Facebook’s goal. It’s a claim that flies in the face of everything we know about his business and his own top deputy’s words. In fact, Meta’s financial disclosures have for years warned investors of the following: “If we fail to retain existing users or add new users, or if our users decrease their level of engagement with our products, our revenue, financial results, and business may be significantly harmed.” (Emphasis ours.) In February, Facebook lost hundreds of billions in stock market value on reports that its active user count dropped for the first time ever. The company has every motivation to acquire new users and increase the time existing users spend on the social network.

On the subject of ranking, the documents below contain an admission from one employee that is indicative of Facebook’s quandary of growth vs. user health. The employee wrote that the evidence favoring ranking is “extensive, and nearly universal.” People like ranked feeds more than chronological ones. According to the employee, “usage and engagement immediately drops” wherever the Feed’s ranking is disabled. The increased usage comes at a price, though. The quality of the user’s overall experience declines. The employee goes on to argue that, though the modified feeds undeniably boost “consumption”—internal Facebook code for time spent using Facebook—they also change the dynamics of “friending” to discourage “personal sharing.”

“The better the ranking algorithm, the lower the cost of ‘bad’ friendships,” the employee writes, noting that users who lack shared interests are unlikely to see each other’s posts very often. “By reducing the cost of friending close to zero, ranking changes the semantics of friending from ‘I care about you’ to ‘I might conceivably care about something you share someday.’”

In other words, ranking encourages the sharing of fewer meaningful posts, while allowing “bad content to spread farther due to the costless accumulation of friends,” according to the presentation. The sentiment is not universal within Facebook, however: employees in the comments disagreed.

What these documents show us is that, for all of Facebook hand-wringing over what it thinks is “meaningful” to users, or “worthy of their time,” internally, employees view ranking as far too complex, too incomprehensible, to ever get the job done right. They also know it’s a system on which Facebook’s future depends.

Ranking-Related Explainers

A document where one employee waxes poetic about the “purpose” of feeds, for both Facebook users and the world at large.

Another document waxing poetic. Spoiler: the answer is “sometimes, for some people.”

Ranking-Related Platform and Product Updates

A document announcing a new ranking experiment meant to reduce “inflammatory content and misinformation” in high-risk countries, like Sri Lanka and Ethiopia.

A document announcing a new ranking experiment regarding “civic” or political content, meant to bump up more content that’s “worth user’s time.”

Ranking-Related Proposals

This document proposes a new, somewhat esoteric way to think internally about “producers” and “consumers” of content in people’s feeds.

Papers Discussing “Demotions” in Feeds

An explainer from 2020 on some of the relatively new ways the company measures the overall effectiveness of demotions.

An internal flag from 2021 noting that certain demotions aren’t as accurate as they should be, in part due to the current system’s reliance on URLs.

A document describing potential strategies for being more transparent about demotions.

A document explaining why transparency about demotions in people’s feeds doesn’t necessarily make users understand how “legitimate” or “justified” those demotions are.

An internal notice clarifying how “transparent” the company should be about content demotions in the Global South. The answer is “not very!”

An internal flag about restrictions, “shadowbans,” and demotions disproportionally hitting content discussing Palestine.

A document where one employee discusses what it means to be “silenced” and to “have a voice” on the platform in regards to ranking.

The results of an experiment where the company tested out demoting “troll-like” comments on people’s posts.

A document describing the tradeoffs between content that’s worth people’s time (via internal metrics), versus content being demoted.

A timeline of the company’s myriad demotion experiments from the second half of 2020.

A 2020 document announcing an upcoming ranking change to political health-related content.

An internal audit of the company’s existing work in the demotions space.

A document describing how the company should measure and minimize the “collateral damage” that can happen to people’s feeds from demotions.

Potential ideas for demotions that might dissuade bad actors from posting bad, demotion-worthy content.

Papers Discussing “Meaningful Social Interactions” (MSI)

- MSI Metric Note Series

- The Meaningful Social Interactions Metric Revisited: Part 2

- The Meaningful Social Interactions Metric Revisited: Part 4

- The Meaningful Social Interactions Metric Revisited: Part 5

A series of notes explaining everything about “MSI.” Parts one and three contain sensitive information about Meta, so they have been omitted.

An internal list of “useful links” to explain what “meaningful social interactions” are, and how the company measures them.

A short brief explaining some of the basics of how “meaningful-ness” gets defined on a post-by-post basis.

- Evaluating MSI Metric Changes with a Comment-Level Survey

- Surveying The 2018 Relevance Ranking Holdout

Two docs describing an internal experiment conducted after the company tweaked its internal MSI metric in mid-2018, specifically looking at user’s overall response.

An overview of how MSI gets measured for pages small and large.

A note questioning how much “spammy” posting behaviors contribute to the company’s definition of “meaningful social interactions.”

A note describing how the company plans to stop “engagement-bait” (also known as clickbait), “bullying,” and spammy comment behavior from contributing to the MSI metric.

An internal test of reducing the impact that “angry” reacts have on ranking and demotions.

Memos from 2020 announcing upcoming changes to the way MSI were measured, meant to capture more “meaningful” or “useful” posts and comments.

An internal open forum asking whether the company should rethink how it measures “stickers” in comments, and perhaps make them less “meaningful.”

A note detailing the results of an experiment on the way “resharing” gets calculated in terms of MSI.

Miscellaneous Papers

An employee lamenting the loss of an internal tool that helped those within Facebook understand a given post’s ranking.

A post where an employee discusses Facebook’s “responsibility” in developing ranking algorithms.

Click here to read all the Facebook Papers we’ve released so far.