Meta Reality Labs Research: Codec Avatars 2.0 Approaching Complete Realism with Custom Chip

Researchers at Meta Reality Labs are reporting that their work on Codec Avatars 2.0 has reached a level where the avatars are approaching complete realism. The researchers created a prototype Virtual Reality headset that has a custom-built accelerator chip specifically designed to manage the AI processing capable of rendering Meta’s photorealistic Codec Avatars on standalone virtual reality headsets.

The prototype Virtual Reality avatars use very advanced machine learning techniques.

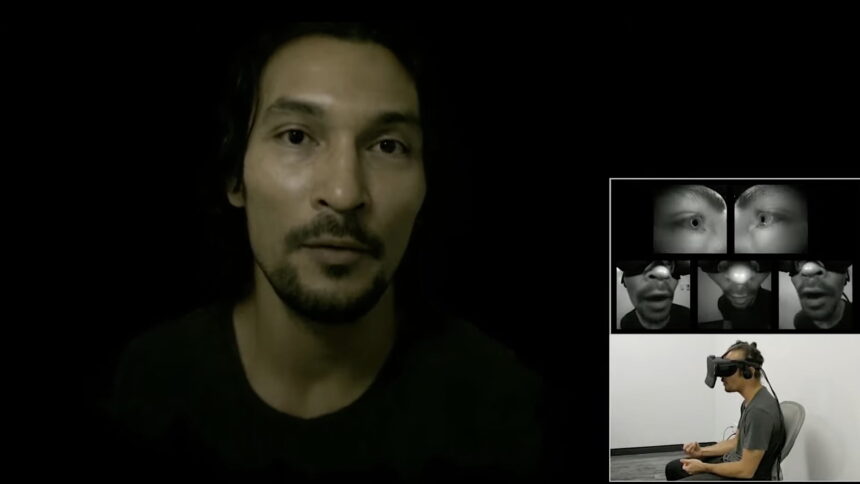

Meta first showcased the work on the sophisticated Codec Avatars far back in March 2019. The avatars are powered using multiple neural networks and are generated via a special capture rig that contains 171 cameras. After the avatars are generated, they are powered in real-time through a prototype virtual reality headset that has five cameras. Two cameras are internal viewing each eye while three are external viewing the lower face. It is though that such advanced and photoreal avatars may one day replace video conferencing.

Since then, Meta Reality Labs researchers have showcased various evolutions of the avatar system like its more realistic eyes as well as a version that only needs eye tracking as well as microphone input.

The Codec Avatar project began even before the company’s name change and rebranding from Facebook to Meta. The goal of the project is to create almost photorealistic avatars in virtual reality. The project uses various on-device sensors such as eye-tracking and mouth-tracking as well as AI processing to animate a very detailed render of the user in a very realistic way and in real-time.

Such AI processing shouldn’t be a problem in high-end PC hardware but what about in virtual reality headsets with mobile chipsets?

The first iterations of Meta’s Codec Avatars research relied on the powerful NVIDIA Titan X GPU. This is a considerably more powerful processor than the Snapdragon chip in Meta’s latest standalone virtual reality headset, Meta Quest 2.

Users put on a prototype virtual reality headset that uses the five sensors to record both eye and mouth movements. Then, using an AI model, a very realistic avatar is generated in real time from this data. The avatar exhibits very realistic features such as eye movements and very fine facial expressions.

The users must be scanned beforehand inside a very sophisticated 3D studio. The research team working on the Codec Avatar is hoping that as technology evolves, it will be possible to create these high-quality 3D scans in the future without the involvement of studios. For instance, it should be possible for one to generate such scans by scanning their faces using their smartphones.

Meta is currently using comic-style avatars on its platforms due to the severe processing power limitations of its headsets, including Meta Quest 2.

Enter Codec Avatar 2.0

Over the past few years, Meta has been gradually refining the codec avatars through the optimization of the AI algorithm and by providing the avatars with more realistic looking eyes.

In an MIT workshop, the project’s team leader Yaser Sheikh presented the team’s latest research and showed a brief video demonstrating the team’s latest iteration of the photorealistic avatars, dubbed “Codec Avatars 2.0.”

The scientific paper is from last year and details the researchers’ recent AI advances. The paper says the artificial neural network can only circulate an avatar’s visible pixels which allow for the rendering of up to five Codec Avatars at 50 frames per second on the standalone Quest 2 headset.

Sheikh says it’s still difficult to predict when this avatar technology might be production-ready. During the workshop, he said the project was “ten miracles” away from the prospect of commercialization when it began and that now it was just “five miracles” away.

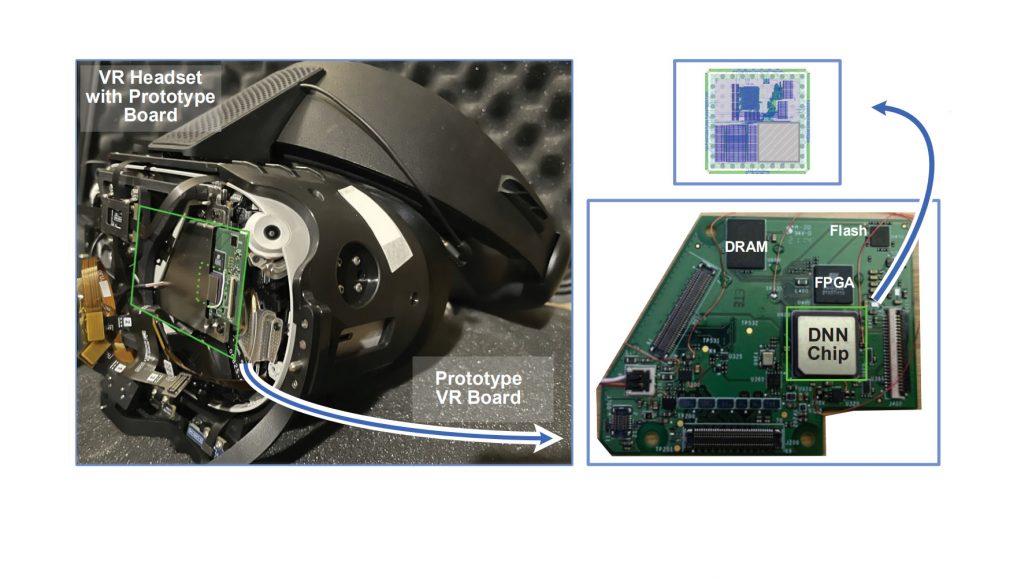

Meta has now cracked how to make the sophisticated Codec Avatars realizable in the lower-powered standalone VR headsets according to a paper the company published during last month’s 2022 IEEE CICC conference. In the paper, Meta describes how it created a custom chip that was developed via a 7nm process to work as an accelerator uniquely tailored for the Codec Avatars.

A Specially Made Chip

The researchers say the chip used was custom-made. It was designed by the research team by taking into account the Codec Avatars processing pipeline, specifically by analyzing incoming eye-tracking images and generating the needed data for the Codec Avatars model. The chip has a footprint of just 1.6 square millimeters.

The test chip was fabricated using a 7nm technology node and also has a Neural Network (NN) accelerator with a 1024 Multiply-Accumulate (MAC) array, a 32-bit RISC-V CPU as well as a 2MB on-chip SRAM.

The researchers also developed a part of the Codec Avatars model to leverage the specific architecture of the chip.

They re-architected the Convolutional-based eye gaze extraction model and tailored it for the custom hardware they developed. The whole model fits on the chip so as to mitigate system energy as well as the latency cost associated with the off-chip memory accesses, according to the researchers. The Reality Labs researchers write that the presented prototype managed to realize 30 frames per second performance at low form factors and with lower power consumption through the efficient acceleration of the convolution operation at a circuit level.

The chip accelerates an intensive portion of the Codec Avatars workload thereby enabling it to speed up the process while at the same time cutting down on the heat and power required. It accomplishes this more efficiently than a general-purpose CPU because of its custom design. This design also dictated the rearchitected software design of the Codec Avatars’ eye-tracking component.

However, the chip forms just one part of the pipeline in the rendering of hyper-realistic avatars. The general-purpose CPU of the standalone headset (such as the Snapdragon XR2 chip in Quest 2). The custom chip by Meta Reality Labs only deals with a part of the Codec Avatars encoding process while the XR2 chip will do the decoding part and also render the actual avatar visuals.

This was a multidisciplinary project. The paper credits a number of researchers who worked on the project including Tony F. Wu, Eli Murphy-Trotzky, H. Ekin Sumbul, William Koven, Yuecheng Li, Daniel H. Morris, Edith Beigne, Syed Shakib Sarwar, Doyun Kim, and Huichu Liu, all of them from Meta Reality Labs.

The fact that Meta’s Codec Avatars can now run on a standalone virtual reality headset is quite an impressive feat, even though the setup still requires a specialty chip. However, it still isn’t clear how well the system handles the visual rendering of avatars. Users’ underlying scans are richly detailed and could be too complex to be fully rendered on the Quest 2 headset. Although all the underlying pieces help to drive the animations, it isn’t clear yet how much of the ‘photorealism’ of the Codec Avatars is preserved.

This research is an illustration of the practical application of the new computing architecture that Michael Abrash, Reality Lab’s Chief Scientist, has said is required to bring XR to full maturity. Abrash has in the past said that the shift away from highly centralized processing to a more distributed processing is highly critical to the realization of both the power and performance requirements of such futuristic headsets that will be capable of rendering the kind of complete realism that Meta Reality Labs is trying to realize with Codec Avatars.

The use of chips that have been specially designed to accelerate certain functions as seen in this research could have massive benefits in different kinds of XR applications. For instance, spatial audio is currently a major wish list in XR to bring extra immersion on board but creating such realistic sound simulation consumes lots of power and is computationally expensive. Also required for a fluid XR experience are hand-tracking and positional-tracking features and these can benefit from the considerable speed and power should hardware and algorithms be designed to optimize their functions.

https://virtualrealitytimes.com/2022/05/05/meta-reality-labs-research-codec-avatars-2-0-approaching-complete-realism-with-custom-chip/https://virtualrealitytimes.com/wp-content/uploads/2022/05/Codec-Avatars-20-600x338.jpghttps://virtualrealitytimes.com/wp-content/uploads/2022/05/Codec-Avatars-20-150x90.jpgComputingTechnologyResearchers at Meta Reality Labs are reporting that their work on Codec Avatars 2.0 has reached a level where the avatars are approaching complete realism. The researchers created a prototype Virtual Reality headset that has a custom-built accelerator chip specifically designed to manage the AI processing capable of rendering...Sam OchanjiSam Ochanji[email protected]SubscriberVirtual Reality Times - Metaverse & VR