Data transfer between MySql and Cassandra using Sqoop

Integration of sqoop in DataStax Enterprise 2.0+

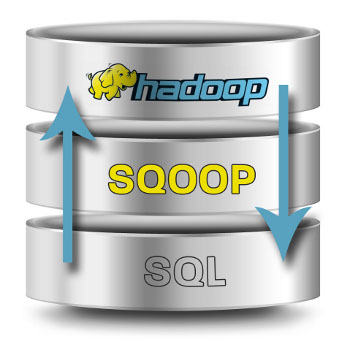

Sqoop is an Apache Software Foundation tool for transferring data between an RDBMS data source and Hadoop or between other data sources, such as NoSQL.

DataStax Enterprise support for Sqoopempowers you to import data from an external data source to Hadoop, Hive, or Cassandra column families. A DSE node runs the Hadoop/Analytics workload, and the Hadoop job imports data from a data source using Sqoop.

You can import data into Cassandra from any JDBC-compliant data source. For example:

- DB2

- MySQL

- Oracle

- SQL Server

- Sybase

Here we will talk about transfer of data from MySQL to Cassandra.You need a JDBC driver for the RDBMS or other type of data source, from where you want to transfer the data into Cassandra.

Data transfer between MySQL and Cassandra

Step-by-Step Procedure

Download the JDBC driver for MySQL from the MySQL site.

Here we are using mysql-connector-java-5.1.22-bin.jar

Put the connector in a directory included in the Sqoop classpath, such as the resources/sqoop subdirectory of your DataStax Enterprise (DSE) installation.

Start DataStax Enterprise, as an Analytics node

$ sudo bin/dse cassandra -t

(Using the command cassandra starts the Cassandra process plus CassandraFS and the -t option starts the Hadoop JobTracker and TaskTracker processes)

Start transferring the data using the below command :

import –connect jdbc:mysql://localhost:3306/<database Name>

–username <MySQL username> \

–password <MySQL password> \

–table <MySQL table Name> \

–split-by categoryName \

–cassandra-keyspace<Cassandra KeyspaceName> \

–cassandra-column-family <Cassandra column family> \

–cassandra-row-key <Cassandra row key> \

–cassandra-thrift-host localhost \

–cassandra-create-schema \

A Brief explanation of the Parameters given above

First, the dse command passes the external data source parameters:

-IP address of the server, the connection string for the external data source

-The database username

-Optional password (not shown in the example)

-The name of the external data source table to migrate

Next, the dse command passes the Cassandra parameters:

-Name of the new Cassandra keyspace to use

-Name of the new column family object

-Primary key of the column family

-IP address of the Cassandra node to connect to

-The cassandra-create-schema parameter, which tells Sqoop to create a new keyspace. You can also use existing keyspaces.