What Is Explainable Artificial Intelligence and Is It Needed?

The problem in artificial intelligence is the lack of transparency and interpretability.

Explainable Artificial Intelligence-XAI is a subject that has been frequently debated in recent years and is a subject of contradictions. Before discussing Artificial Intelligence (AI) reliability, if AI is trying to model our thinking and decision making, we should be able to explain how we really make our decisions! Is not it?

RELATED: ARTIFICIAL INTELLIGENCE AND THE FEAR OF THE UNKNOWN

There is a machine learning transformation that has been going on sometimes faster and sometimes slower since the 1950s. In the recent past, the most studied and striking area is machine learning, which aims to model the decision system, behavior, and reactions.

The successful results obtained in the field of machine learning led to a rapid increase in the implementation of AI. Advance work promises to be autonomous systems capable of self-perception, learning, decision making, and movement.

RELATED: 13 FREE SITES TO GET AN INTRODUCTION TO MACHINE LEARNING

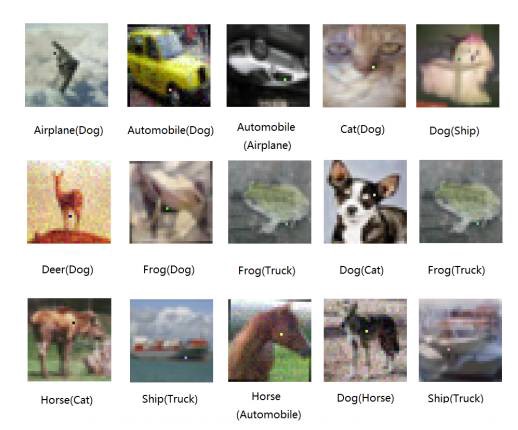

Especially after the 1990s, the concept of deep learning is based on the past, but the recursive neural networks, convolutional neural networks, reinforcement learning, and contentious networks are remarkably successful. Although successful results are obtained, it is inadequate to explain or explain the decisions and actions of these systems to human users.

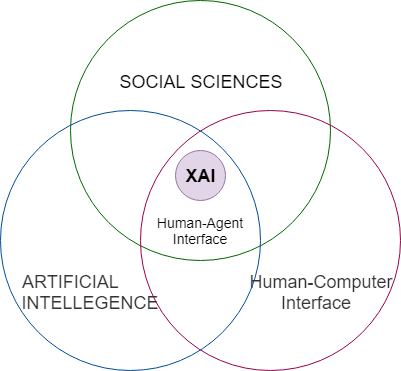

Scope of Explainable Artificial Intelligence

The deep learning models designed with hundreds of layered millions of artificial neural networks are not infallible. They can lose their credibility quickly, especially when they are simply misled as in the case of a one-pixel-attack! Then it becomes inevitable to ask the question of how successful or unsuccessful!

The Department of Defense (DoD) states that the smarter, autonomous and symbiotic systems are facing challenges.

“Explainable AI—especially explainable machine learning—will be essential if future warfighters are to understand, appropriately trust, and effectively manage an emerging generation of artificially intelligent machine partners.”

The complexity of this type of advanced applications increases with the successes and the understanding-explainability becomes difficult. Even in some conferences, there are only sessions where this topic is discussed.

The reasons for the new machine/deep learning systems

It is aimed to explain the reasons for new machine/deep learning systems, to determine their strengths and weaknesses and to understand how to behave in the future. The strategy to achieve this goal is to develop new or modified artificial learning techniques that will produce more definable models.

These models are intended to be combined with state-of-the-art human-computer interactive interface techniques, which can convert models into understandable and useful explanation dialogs for the end user.

With three basic expectations, it is desired to approach the system:

▪. Explain the purpose behind how the parties who design and use the system are affected.

▪. Explain how data sources and results are used.

▪. Explain how inputs from an AI model lead to outputs.

“XAI is one of a handful of current DARPA programs expected to enable -the third-wave AI systems- where machines understand the context and environment in which they operate, and over time build underlying explanatory models that allow them to characterize real-world phenomena.”

If we set out from medical practice, after examining the patient data, both the physician should understand and explain to the patient that he proposed to the concerned patient the risk of a heart attack on the recommendation of the decision support system.

At this stage, firstly, which data is evaluated is another important criterion. It is also important to identify what data is needed and what needs to be done for proper assessment.

The psychology of the explanation

Let’s look at the point where we refuse to use artificial learning technology because we cannot explain how artificial intelligence gives its decision. On the other hand, so many people cannot really explain how they made the decision!

Let’s imagine how a person came to a decision at the model level: When we approach our biological structure at the chemical and physical level, we are talking about electrical signals from one brain cell to another brain cell. If you will not be satisfied with this explanation, you tell me how you decided to order a coffee!

When one of your friends has ordered an iced coffee, the other one ordered hot coffee, and the other one orders a cup of tea in a cafe. Why do they choose iced coffee and hot coffee? Can anyone explain chemical and synapses in the brain? Can you explain? Do you want such an explanation? Do you know what it is? A human is starting to make up a story about how he/she decides! Hopefully, it will be a fantastic story you’ll listen to, try it!

Just look at your input and output data and then tell a fun story! In fact, there is a similar approach to analytical and important issues. Interpretations, transparency, and clarity are analytical, and analyzes without a test are like a one-way train ticket that causes a sense of security.

In perfect conditions;

▪, A system that produces the best performance,

▪. You want the best explanation.

But real life forces us to choose.

Performance vs Explainability

Interpretation: You understand, but it does not work well!

Performance: You do not understand but works well!

Especially academics, researchers and technology companies will generally not give much attention to the extent that they will give more importance to performance. However, the scenario with the people and institutions involved in the sector is slightly different. They want to trust and are waiting for an explanation.

AI approaches differ for banks, insurance companies, healthcare providers and other different industries. This is because the models for these sectors bring different legal regulations and ethical requirements. In this case, we come to the same point again. If you want your system to be explained in the following condition, you’ll have to replace it with the simpler one that’s not too strong, for now!

Research on this subject is mostly DARPA, Google, DeepMind and so on. While the institutions continue to be carried out intensively, it is understood from the reports; No matter what sector and who is used by artificial intelligence systems, there is such a relationship between clarity and accuracy that a trade-off is unavoidable and seems to continue for a while.

After all, AI should not be transformed into a divine power that we will be led to pursue without establishing a cause-effect relationship. On the other hand, we should not ignore the insight that will be provided to us.

Basically, we must think about creating flexible and interpretable models that can work together in harmony with the experts who have knowledge at the technical and academic level and the opinions from different sectors and disciplines.

Thanks

Gently thanks to Başak Buluz, Yavuz Kömeçoğlu ve Hakan Aydemir for their feedback.

SHOW COMMENT ()

SHOW COMMENT ()