Algorithms have an inordinate amount of influence on our lives. The predominant ones largely dictate the friends we interact with online, the goods we buy, and the news we see. An algorithm (YouTube’s) even made Justin Bieber happen—and that’s probably the least impressive example.

In a society as tech-obsessed as ours, this prevalence means that algorithms have obtained an almost magical quality. They represent the deus ex machina of the science-fiction thriller that is real life, a plot device that makes our tools do the things they do. According to anthropologist Nick Seaver of Tufts University, algorithms have so thoroughly graduated into the realm of cultural abstraction that they should be studied anthropologically.

Seaver studies technology’s effects on contemporary culture—he’s written a whole book about the codes that select music for us—and says algorithms’ outsize role in culture means that we have to understand what they are and how we think about them from a cultural perspective. In the latest issue of Big Data and Society (pdf), Seaver argues that even the word “algorithm” has moved beyond computer-science definitions and no longer refers to computer code compiled to perform a task. It belongs to all and is fair game for social scientists, like himself, to define in complex terms.

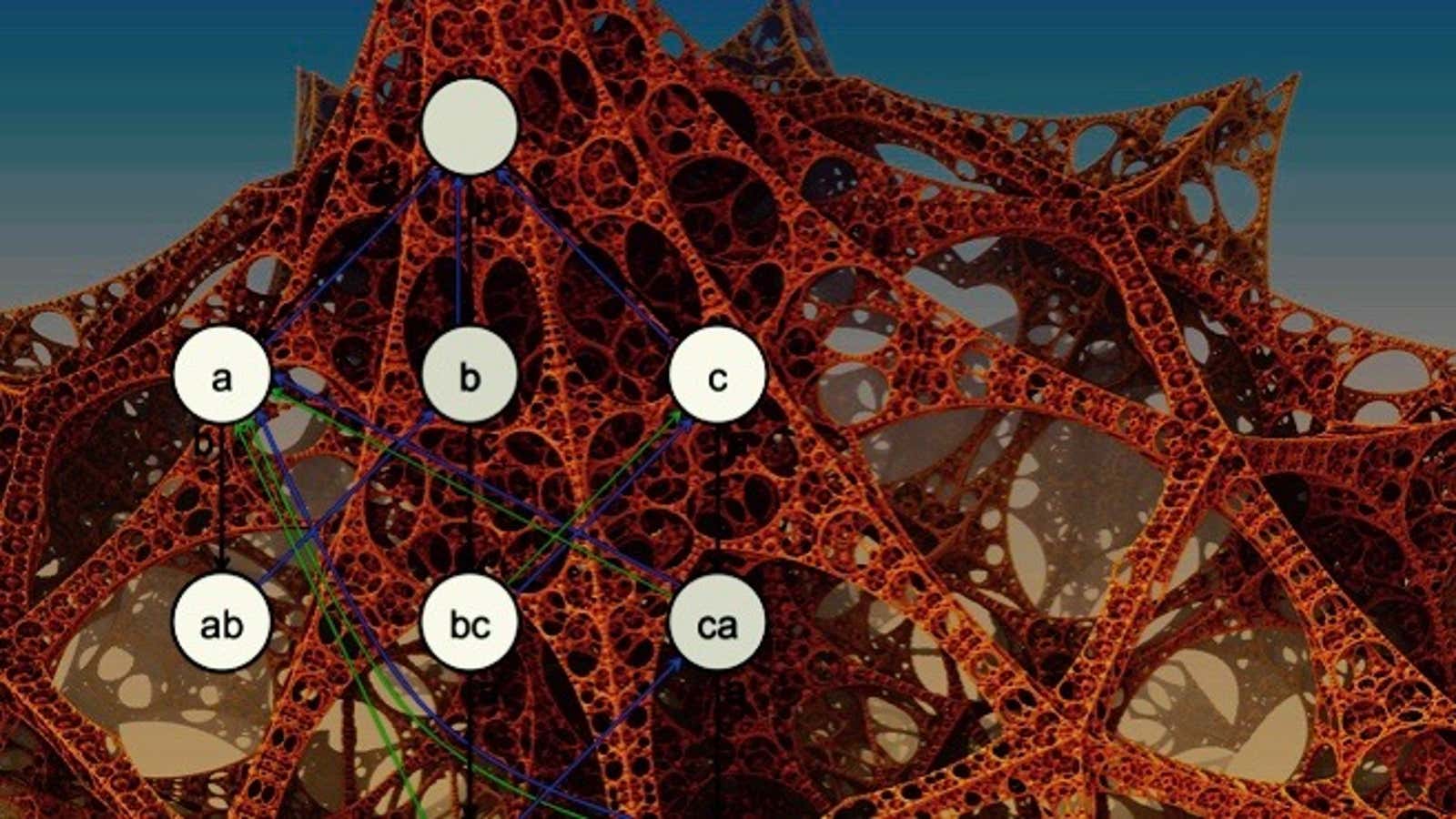

To reach his conclusion, Seaver talked to technologists, engineers, marketers, sociologists, and more. He asked coders at technology companies about their relationship to the word “algorithm” and discovered that even they feel alienated by the projects they work on, in part because they often tackle small pieces of bigger, non-personal projects—tentacles of an algorithmic organism if you will—and end up missing any closeness to the whole. Writes Seaver:

Sitting in on ordinary practical work—whiteboarding sessions, group trouble-shooting, and hackathon coding—I saw “algorithms’” mutate, at times encompassing teams of people and their decisions, and at other times referring to emergent, surprisingly mysterious properties of the code-base. Only rarely did ‘”algorithm’” refer to a specific technical object like bubble sort.

In other words, engineers end up relating to a company’s algorithms the way someone on the marketing or sales team might: as if they were some vague thing, sometimes a system, more often a concept. That matters because algorithms—the concept, processes, and tasks—underpin much of our society now, which means there are major implications in their use.

The word algorithm is most closely associated with tech giants, which have built the digital infrastructure our world now depends upon. Such massive networks—of people, information, or physical goods—are only possible due to the code delivered by these cultural algorithms. The code can almost be thought of as an intermediary, giving agency to a complex, serpentine cascade of decisions and allowing the actions of people to be magnified throughout trillions of digital interactions with other algorithms. The choices made by code reflect the choices of its creators.

When thinking about algorithms in this way, reports of bias being encoded into Facebook or Google’s software become more clear. When Google’s workforce is 2% black, it makes more sense that an algorithmic decision confused black people with gorillas. (That’s just one example: The algorithmic internet is littered with instances of bias, preferential treatment, and false senses of objectivity.) Seaver argues that only when algorithms are defined by social science and cultural commentators will we be able to discuss how to solve these systemic problems.

Some are already trying: The AI Now Institute, launched this week to study the societal impact of artificial intelligence and automated systems, has set a goal to create a shared language between the social, cultural, and scientific fields. The organization doesn’t just have the backing of big tech, though its founders work at Google and Microsoft. Their “algorithm” also includes civil rights activists, lawyers, and anthropologists.

“AI is like individual human intelligence or intelligence embodied in organizational forms like agencies or companies—it can deliver enormous social benefits as well as burdens,” said Mariano-Florentino Cuéllar, a California supreme court judge and advisory board member of AI Now in a Nov. 15 speech. “We’re in for some discussion of what it means to be human. And we will soon confront big questions that will drive the well-being of our kids and their kids.”