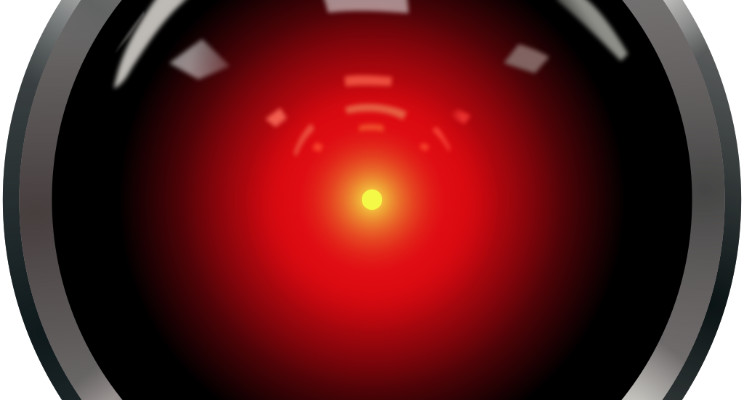

AI (Deep Learning) explained simply

Sci-fi level Artificial Intelligence (AI) like HAL 9000 was promised since 1960s, but PCs and robots were dumb until recently. Now, tech giants and startups are announcing the AI revolution: self-driving cars, robo doctors, robo investors, etc. PwC just said that AI will contribute $15.7 trillion to the world economy by 2030. "AI" it's the 2017 buzzword, like "dot com" it was in 1999, and everyone claims to be into AI. Don't be confused by the AI hype. Is this a bubble or real? What's new from older AI flops?

AI is not easy or fast to apply. The most exciting AI examples come from universities or the tech giants. Self-appointed AI experts who promise to revolutionize any company with the latest AI in short time are doing AI misinformation, some just rebranding old tech as AI. Everyone is already using the latest AI through Google, Microsoft, Amazon etc. services. But "deep learning" will not soon be mastered by the majority of businesses for custom in-house projects. Most have insufficient relevant digital data, not enough to train an AI reliably. As a result, AI will not kill all jobs, especially because it will require humans to train and test each AI.

AI now can "see", and master vision jobs, like identify cancer or other diseases from medical images, statistically better than human radiologists, ophthalmologists, dermatologists, etc. And drive cars, read lips, etc. AI can paint in any style learnt from samples (for example, Picasso or yours), and apply the style to photos. And the inverse: guess a realistic photo from a painting, hallucinating the missing details. AIs looking at screenshots of web pages or apps, can write code producing similar pages or apps.

(Style transfer: learn from a photo, apply to another. Credits: Andrej Karpathy)

AI now can "hear", not only to understand your voice: it can compose music in style of the Beatles or yours, imitate the voice of any person it hears for a while, and so on. The average person can't say what painting or music is composed by humans or machines, or what voices are spoken by the human or AI impersonator.

AI trained to win at poker games learned to bluff, handling missing and potentially fake, misleading information. Bots trained to negotiate and find compromises, learned to deceive too, guessing when you're not telling them the truth, and lying as needed. A Google translate AI trained on Japanese⇄English and Korean⇄English examples only, translated Korean⇄Japanese too, a language pair it was not trained on. It seems it built an intermediate language on its own, representing any sentence regardless of language.

Machine learning (ML), a subset of AI, make machines learn from experience, from examples of the real world: the more the data, the more it learns. A machine is said to learn from experience with respect to a task, if its performance at doing the task improves with experience. Most AIs are still made of fixed rules, and do not learn. I will use "ML" to refer "AI that learns from data" from now on, to underline the difference.

Artificial Neural Networks (ANN) is only one approach to ML, others (not ANN) include decision trees, support vector machines, etc. Deep learning is an ANN with many levels of abstraction. Despite the "deep" hype, many ML methods are "shallow". Winning MLs are often a mix, an ensemble of methods, like trees + deep learning + other, independently trained and then combined together. Each method might make different errors, so averaging their results it can win, at times, over single methods.

The old AI was not learning. It was rules-based, several "if this then that" written by humans: this can be AI since it solves problems, but not ML since it does not learn from data. Most of current AI and automation systems still are rule-based code. ML is known since the 1960s, but like the human brain, it needs billions of computations over lots of data. To train an ML in 1980s PCs it required months, and digital data was rare. Handcrafted rule-based code was solving most problems fast, so ML was forgotten. But with today's hardware (NVIDIA GPUs, Google TPUs etc.) you can train an ML in minutes, optimal parameters are known, and more digital data is available. Then, after 2010 one AI field after another (vision, speech, language translation, game playing etc.) it was mastered by MLs, winning over rules-based AIs, and often over humans too.

Why AI beat humans in Chess in 1997, but only in 2016 in Go: for problems that can be mastered by humans as a limited, well defined rule-set, for example, beat Kasparov (then world champion) at chess, it's enough (and best) to write a rule-based code the old way. The possible next dozen moves in Chess (8 x 8 grid with limits) are just billions: in 1997, computers simply became fast enough to explore the outcome of enough of the possible move series to beat humans. But in Go (19 x 19 grid, free) there are more moves than atoms in the universe: no machine can try them all in billion years. It's like trying all random letter combinations to get this article as result, or trying random paint strokes until getting a Picasso: it will never happen. The only known hope is to train an ML on the task. But ML is approximate, not exact, to be used only for intuitive tasks you can't reduce to "if this then that" deterministic logic in reasonably few loops. ML is "stochastic": for patterns you can analyse statistically, but you can't predict precisely.

ML automates automation, as long as you prepared correctly the data to train from. That's unlike manual automation where humans come up with rules to automate a task, a lot of "if this then that" describing, for example, what e-mail is likely to be spam or not, or if a medical photo represents a cancer or not. In ML instead we only feed data samples of the problem to solve: lots (thousands or more) of spam and no spam emails, cancer and no cancer photos etc., all first sorted, polished, and labeled by humans. The ML then figures out (learns) the rules by itself, magically, but it does not explains these rules. You show a photo of a cat, the ML says this is a cat, but no indication why.

(bidirectional AI transforms: Horse to Zebra, Zebra to Horse, Summer from/to Winter, Photo from/to Monet etc. credits: Jun-Yan Zhu, Taesung Park et all.)

Most ML is Supervised Learning, where the examples for training are given to ML along with labels, a description or transcription of each example. You first need a human to divide the photos of cats from those of dogs, or spam from legitimate emails, etc. If you label the data incorrectly, the ML results will be incorrect, this is very important as will be discussed later. Throwing unlabeled data to ML it's Unsupervised Learning, where the ML discovers patterns and clusters on data, useful for exploration, but not enough alone to solve many problems. Some MLs are semi-supervised.

In Anomaly Detection you identify unusual things that differ from the norm, for example frauds or cyber intrusions. An ML trained only on old frauds it would miss the always new fraud ideas. Then, you can teach the normal activity, asking the ML to warn on any suspicious difference. Governments already rely on ML to detect tax evasion.

Reinforcement Learning is shown in the 1983 movie War Games, where a computer decides not to start World War III by playing out every scenario at lightspeed, finding out that all would cause world destruction. The AI discovers through millions of trial and error, within rules of a game or an environment, which actions yield the greatest rewards. AlphaGo was trained this way: it played against itself millions of times, reaching super-human skills. It made surprising moves, never seen before, that humans would consider as mistakes. But later, these was proven as brilliantly innovative tactics. The ML became more creative than humans at the Go game. At Poker or other games with hidden cards, the MLs learns to bluff and deceive too: it does what's best to win.

The "AI effect" is when people argue that an AI it is not real intelligence. Humans subconsciously need to believe to have a magical spirit and unique role in the universe. Every time a machine outperforms humans on a new piece of intelligence, such as play chess, recognize images, translate etc., always people say: "That's just brute force computation, not intelligence". Lots of AI is included in many apps, but once widely used, it's not labelled "intelligence" anymore. If "intelligence" it is only what's not done yet by AI (what's still unique to the brain), then dictionaries should be updated every year, like: "math it was considered intelligence until 1950s, but now no more, since computers can do it", it's quite strange. About "brute force", a human brain got 100 trillion of neuronal connections, lots more than any computer on earth. ML can't do "brute force": trying all the combinations it would take billion years. ML do "educated guesses" using less computations than a brain. So it should be the "smaller" AI to claim that the human brain as not real intelligence, but only brute force computation.

ML is not a human brain simulator: real neurons are very different. ML it's an alternative way to reach brain-like results, similar to a brain like a horse is similar to a car. It matters that both car and horse can transport you from point A to point B: the car do it faster, consuming more energy and lacking most horse features. Both the brain and ML run statistics (probability) to approximate complex functions: they give result only a bit wrong, but usable. MLs and brains give different results on same task, as they approximate in different way. Everyone knows that while the brain forgets things and is limited in doing explicit math, the machines are perfect for memory and math. But the old idea that machines either give exact results or are broken is wrong, outdated. Humans do many mistakes, but instead of: "this brain is broken!", you hear: "study more!". MLs doing mistakes are not "broken" either, they must study more data, or different data. MLs trained with biased (human generated) data will end up racist, sexist, unfair: human in the worst way. AI should not be compared only with our brain, AI it is different, and that's an opportunity. We train MLs with our data, to imitate the human jobs, activity and brain only. But the same MLs, if trained in other galaxies, could imitate different (perhaps better) alien brains. Let's try to think in alien ways too.

AI is getting as mysterious as humans. The idea that computers can't be creative, liars, wrong or human-like comes from old rule-based AI, indeed predictable, but that seems changed with ML. The ammunitions to reduce each new capability mastered by AI as "not real intelligence" are ending. The real matter left it is: general versus narrow AI.

(Please forget the general AI seen in movies. But the "narrow AI" is smart too!)

Unlike some other sciences, you can't verify if an ML is correct using a logical theory. To judge if an ML it is correct or not, you can only test its results (errors) on unseen new data. The ML is not a black box: you can see the "if this then that" list it produces and runs, but it's often too big and complex for any human to follow. ML it's a practical science trying to reproduce the real world's chaos and human intuition, without giving a simple or theoretical explanation. It gives the too big to understand linear algebra producing the results. It's like when you have an idea which works, but you can't explain exactly how you came up with the idea: for the brain that's called inspiration, intuition, subconscious, while in computers it's called ML. If you could get the complete list of neuron signals that caused a decision in a human brain, could you understand why and how really the brain took that decision? Maybe, but it's complex.

Everyone can intuitively imagine (some even draw) the face of a person, in original and in Picasso style. Or imagine (some even play) sounds or music styles. But no one can describe, with a complete and working formula, the face, sound or style change. Humans can visualize only up to 3 dimensions: even Einstein it could not conceive, consciously, ML-like math with let's say 500 dimensions. Such 500D math is solved by our brains all the time, intuitively, like magic. Why it is not solved consciously? Imagine if for each idea, the brain also gave us the formulas used, with thousands of variables. That extra info would confuse and slow us down a lot, and for what? No human could use pages-long math, we're not evolved with an USB cable on the head.

Faulty automation increases (rather than kill) human jobs. 2 days after I published this article, suddenly my profile was blocked. Google for "LinkedIn account restricted" to learn this happens to many for simply too much activity, even if not messaging. I had only opened, for curiosity, the profiles of the hundreds who clicked "I like" on this article (thanks to you all). Then, a naive rule "x pages opened within time y" AI decided I was an AI too, of the "web bot" kind (programs browsing all the pages of a site to copy its contents). It blocked me without any "Slow down" warning, why to warn a bot?

I was not a bot, I swear I am human. Counting "x pages opened within time y" it catches many bots, but also "false positives": curious humans in activity peaks. This frustrated the LinkedIn staff too: who to trust, the AI or the user? I had to send my ID as proof, it took days. This rule-based "AI" created extra human support jobs to just handle unnecessary "AI" errors that humans alone would not do. Think at: "flag all emails from Nigeria as spam" or "flag all people with long beard as terrorists". You can improve rules by adding parameters, past activity, etc., but never reaching the accuracy of humans or fine trained MLs. Beware of "automation" or "AI" claims: most it is still too simple rules, no any deep learning. Microsoft acquired LinkedIn for $26 billion, will surely upgrade this old piece to real ML. But until then, don't browse LinkedIn too fast!

If no human can predict something, often the ML can't too. Many people trained MLs with years of market price changes, but these MLs fail to predict the market. The ML will guess how things will go if the learned past factors and trends will keep the same. But stock and economy trends change very often, like at random. MLs fail when the older data gets less relevant or wrong very soon and often. The task or rules learned must keep the same, or at most rarely updated, so you can re-train. For example learning to drive, play poker, paint in a style, predict a sickness given health data, translate between languages are jobs for MLs: old examples will keep valid for the near future.

ML can find correlation on data, but correlation does not imply causation, and ML it's unreliable at guessing cause-effects. Do not let ML try to find correlations that do not exist in the data set: ML will find other, unrelated patterns, easy to mistake for what you meant to find. In the weird research "Automated inference on criminality using face images", the ML was trained on labeled face photos of jailed and honest guys (some of whom, let me add, could be criminals who was not discovered?). Authors claimed that the ML learned to catch new bad guys from just a face photo, but "feeling" that further research will refute the validity of physiognomy (racism). Really, their data set is biased: some white collar criminals pose as honest guys, laughing about that. The ML learned the only relations it could find: happy or angry mouths, type of collar (neck cloth). Those smiling with white collar are classified as honest, those sad with dark collar are rated as crooks. The ML authors tried to judge the people by their faces (not science! no correlation), but failed to see that the ML learned to judge by clothes (social status) instead. The ML amplified an injustice bias: street thieves in cheap clothes (perhaps with darker skin) are discovered and jailed more often than corrupt politicians and top level corporate fraudsters. This ML will send to jail all the street guys, and not a single white collar, if not also told that street thieves are discovered x% more frequently than white collars. If told so, again, it would take random or no decisions, this is not science. A lesson is: MLs do not experienced living in our world like an adult human. MLs can't can't know what's outside the data given, including the "obvious", for example: the more a fire is damaging, the more fire trucks are sent to stop it. An ML will note: the more firefighters at a fire scene, the more damage the day after, so the fire trucks cause fire damage. Result: the ML will send to jail the firefighters for arson, cause: "95% correlation"!

(ML can't find correlations that do not exist, like: face with criminality. But this data set is biased: no smiling white collar criminals in it! ML will learn the bias)

MLs can predict what humans can't, in some cases. "Deep Patient", trained from 700,000 patients data by M. Sinai Hospital in New York, it can anticipate the onset of schizophrenia: no one knows how! Only the ML can: humans can't learn to do the same by studying the ML. This is an issue: for an investment, medical, judicial or military decision, you may want to know how the AI reached its conclusions, but you can't. You can't know why the ML denied your loan, advised a judge to jail you or gave the job to someone else. Was the ML fair or unfair? Unbiased or biased by race, gender or else? The ML computations are visible, but too many to make a human-readable summary. The ML speaks like a prophet: "You humans can't understand, even if I show you the math, so have faith! You tested my past predictions, and these were correct!".

Humans are never fully explaining their decisions too: We give reasonable-sounding, but always incomplete, over-simplified reasons. For ex: "We invaded Iraq due to its weapons of mass destruction" looked right, but there were dozens more reasons. This looks wrong, even when the ML is right: "We bombed that village since a reputable ML said they was terrorists". It only lacks explanation. People getting almost always right answers from MLs will start to make up fake explanations, just for the public to accept the MLs predictions. Some will use MLs in secret, crediting the ideas to themselves.

The ML results are only as good the data you train the ML with. In ML you rarely write software, that's provided by Google (Keras, Tensorflow), Microsoft etc. and the algorithms are open source. ML is an unpredictable science defined by experimentation, not by theory. You spend most of the time preparing the data to train and studying the results, then doing lots of changes, mostly by guessing, and retrying. ML's fed with too few or inaccurate data will give wrong results. Google Images incorrectly classified African Americans as gorillas, while Microsoft’s Tay bot learned nazi, sex and hate speech after only hours training on Twitter. The issue was the data, not the software.

Undesirable biases are implicit in human-generated data: an ML trained on Google News associated “father is to doctor as mother is to nurse” reflecting gender bias. If used as is, it might prioritize male job applicants over female ones. A law enforcement ML could discriminate by skin color. During the Trump campaign, some ML may have reduced recommending "Mexican" restaurants, as a side effect of reading many negative posts about Mexican immigration, even if no one complained about Mexican food or restaurants specifically. You can't simply copy data from the internet into your ML, and expect it to end up balanced. To train a wise ML it's expensive: you need humans to review and “de-bias” what's wrong or evil, but naturally happening in the media.

(Photo: James Bridle entraps a self-driving car inside an unexpected circle)

ML is limited since it lacks general intelligence and prior common sense. Even merging together all the specialized MLs, or training an ML on everything, it will still fail at general AI tasks, for example at understanding language. You can't talk about every topic with Siri, Alexa or Cortana like with real people: they're just assistants. In 2011, IBM Watson answered faster than humans at Jeopardy! TV quiz, but confused Canada with USA. ML can produce useful summaries of long texts, including sentiment analysis (opinions and mood identification), but not as reliable as human works. Chatbots fail to understand too many questions. No current AI can do what's easy for every human: to guess all the times when a customer is frustrated or sarcastic, and to change tone accordingly. There is no any general AI like in the movies. But we can get small sci-fi looking AI pieces, separately, that win humans at narrow (specific) tasks. What's new is that "narrow" can include creative or supposedly human-only tasks: paint (styles, geometries, less likely if symbolic or conceptual), compose, create, guess, deceive, fake emotions, etc. all of which, incredibly, seem not to require general AI.

No one knows how to build a general AI. This is great: we get super-human specialized (narrow AI) workers, but no any Terminator or Matrix will decide on its own to kill us anytime soon. Unfortunately, humans can train machines to kill us right now, for example a terrorist teaching self-driving trucks to hit pedestrians. An AI with general intelligence it would probably self-destruct, rather than obey to terrorist orders. For details on the AI apocalypse debate, read: Will AI kill us all after taking our jobs?

AI ethics will be hacked, reprogrammed illegally. Current ML, being not general or sentient AI, will always follow the orders (training data) given by humans: don't expect AI conscientious objectors. Each government will have to write laws detailing if a self-driving car will prefer to kill either its passenger(s) or pedestrian(s). Example: two kids run suddenly in front of a car with a single passenger, and to avoid the kids, the car can only run in a deadly option, like a cliff. Polls show that the majority of people would prefer to own a car that kills pedestrians rather than themselves. Most people don't think yet at these very rare events, but will overreact and question politicians when the first case it will happen, even if only once per billion cars. In countries where cars will be instructed to kill a single passenger to save multiple pedestrians, car owners will ask hackers to secretly reprogram cars to always save the passenger(s). But within pirated AI patches, hidden AI malware and viruses will probably be installed too!

To teach a human it's easy: for most tasks, you give a dozen of examples and let him/her try a few times. But an ML requires thousand times more labeled data: only humans can learn from little data. An ML must try a million more times: if real world experiments are mandatory (can't fully simulate like for chess, go, etc.), you'll have to crash thousands of real cars, kill or hurt thousands of real human patients, etc. before to complete a training. An ML, unlike humans, overfits: it memorizes too specific detail of the training data, instead of general patterns. So, it fails on real tasks over never seen before data, even just a little different from the training data. Current ML it lacks the human general intelligence that models each situation and relates it to prior experience, to learn from very few examples or trial and errors, memorizing just what's general, and ignoring what's not relevant, avoiding to try what it can be predicted as a fail.

After a million examples learnt, an ML can do less mistakes than humans in percentage, but errors can be of a different kind, that humans would never make, such as classify a toothbrush as a baseball bat. This difference with humans it can be used as malicious AI hacking, for example, painting small "adversarial" changes over street signals, unnoticeable by humans, but dramatically confusing for self-driving cars.

(AI trainers = puppy trainers, not engineers. Photo: Royal Air Force, Mildenhall)

The AI will kill old jobs, but create new ML-trainer jobs, similar to puppy trainers, not to engineers. An ML is harder to train than a puppy, since (unlike the puppy) it lacks general intelligence, and so it learns everything it spots in data, without any selection or common sense. A puppy it would think twice before to learn evil things, such as killing friends. Instead, for an ML it makes no difference to serve terrorists or hospitals, and it will not explain why it took the decisions. An ML will not apology for errors or fear to be powered off for errors: it's not sentient general AI. For safety and quality standards, each ML will be surrounded by many humans, skilled in: ML training, ML testing, but also in trying to interpret the ML decisions and ethics. All at once, in a same job title.

Practical ML training. If you train with photos of objects held by a hand, the ML will include the hand as part of the objects, failing to recognize the objects alone. A dog knows how to eat from a hand, the dumb ML eats your hand too. To fix, train on hands alone, then on objects alone, finally on objects held by hands, labeled as "object x held by hand". Same with object changes: a car without wheels or deformed by an accident, an house in ruins etc. Any human knows it's a car crashed in a wall, a bombed house etc. An ML sees unknown new objects, unless you teach piece by piece, case by case. Including weather! If you train with photos all taken in sunny days, tests will work with other sunny days photos, but not with photos of same things took in cloudy days. The ML learned to classify based on sunny or cloudy weather, not just on the objects. A dog knows that the task is to tell what's the object whatever it's seen in sunny or cloudy light. Instead, an ML picks all the subtle clues: you need to teach all 100% explicitly.

Copyright and intellectual property laws will need updates: MLs, like humans, can invent new things. An ML is shown existing things A and B, and produces C, a new, original thing. If C is different enough from both A and B, and from anything else on earth, C it can be patented as invention or artwork. Who is the author? Further, what if A and B it was patented or copyrighted material? When C is very different, the A and B authors can't guess that C exists thanks to their A and B. Let's say it is not legal to train MLs on recent copyrighted paintings, music, architecture, design, chemical formulas, perhaps stolen user data etc. Then, how do you guess the data sources used from just the ML results, when less recognizable than a Picasso style transfer? How you will know an ML was used at all? Many people will use MLs in secret, claiming results as their own.

For most tasks in small companies, it will keep cheaper to train humans than MLs. It's easy to teach a human to drive, but epic to teach the same to an ML. It needs to tell and let crash the ML in millions of handcrafted examples of all the road situations. After, perhaps the ML will be safer than any human driver, especially those drunk, sleepy, watching cell phone screens, ignoring speed limits or simply mad. But a so expensive and reliable training is viable for big companies only. MLs trained in cheap way will be faulty and dangerous, only a few companies will be able to deliver reliable MLs. A trained ML can be copied in no time, unlike a brain's experience transfer to another brain. Big providers will sell pre-trained MLs for reusable common tasks, like: "radiologist ML". The ML will complement one human expert, who keeps required, and replace just the "extra" staff. An hospital will hire a single radiologist to oversee the ML, rather than dozen(s) of radiologists. The radiologist job it is not extinct, just there will be fewer per hospital. Who trained the ML will get back the investment by selling to many hospitals. The cost of training an ML will decrease every year, as more people will learn how to train MLs. But due to data preparation and tests, reliable ML training will never end up cheap. Many tasks can be automated in theory, but in practice only a few will be worth the ML setup costs. For too uncommon tasks like ufologists, translators from ancient (dead) languages, etc., long term human salaries will keep cheaper than the one-time cost of training an ML to replace too few people.

Humans will keep doing general AI tasks, out of ML reach. The intelligence quotient (IQ) tests are wrong: they fail to predict the people's success in life because there are many different intelligences (visual, verbal, logical, interpersonal etc.): these cooperate in a mix, but results can't be quantified with a single IQ number from 0 to n. We define insects as "stupid" compared to human IQ, but mosquitoes win us all the time at the narrow "bite and escape" task. Every month, AIs beat humans at more narrow tasks, like mosquitoes. Waiting for the "singularity" moment, when AI would beat us at everything, it's silly. We are getting many narrow singularities, and once AI wins us at a task, everyone except who oversees the AI, can quit doing the task. I read around that humans can keep doing unique handcrafted stuff with imperfections, but really, AIs can do fake errors, learning to craft different imperfections per piece, too. It's impossible to predict what tasks will be won by AI next, being AI sort of creative, but it will lack "general intelligence". An example: comedians and politicians (interchangeably) are safe, despite not requiring special (narrow) studies or degrees: they just can talk about anything in funny or convincing way. If you specialize in a difficult, but narrow and common task (like, radiologist), MLs will be trained to replace you. Keep general!

Thanks for reading. If you feel good, please click the like and share buttons.

For more, please read my other articles: 1) Deep Learning is not the AI future and if interested in futuristic AI geopolitics: 2) Will AI kill us all after taking our jobs?

For questions, or consulting, message me on LinkedIn, or email at fabci@anfyteam.com

Apify Marketplace Developer at Apify

6yAny suggestion on APIs available? Two years ago I tried language recognition feature from two different AI providers, there was approx 1.5mil of fb group names in db and the task was to find the ones in English. Output was about 50% different, so for half of the names it was language "A" recognized by one api and language "B" recognized by another. Was surprised why it can be that different, after all vocabulary and math supposed to be at the same level, therefore output supposed to be more or less similar.

Sharing Economy of innovative startups & influencers, reshaping AI / Emtech at Meta Alliance. Investment events

6yGreat article. But what about capsule network, the latest AI technology?

Creative Director at boyworldwide

6yI just created a piece about this! https://everpress.com/9dantee

Senior Software Engineer at Oracle

6yBut I must admit, AI has moved a long way. Remember programming with Prolog in earlier days compared to R in recent times (Although R was available some time back) :)