Today at Nvidia GTC 2019, the company unveiled a stunning image creator. Using generative adversarial networks, users of the software are with just a few clicks able to sketch images that are nearly photorealistic. The software will instantly turn a couple of lines into a gorgeous mountaintop sunset. This is MS Paint for the AI age.

Called GauGAN, the software is just a demonstration of what’s possible with Nvidia’s neural network platforms. It’s designed to compile an image how a human would paint, with the goal being to take a sketch and turn it into a photorealistic photo in seconds. In an early demo, it seems to work as advertised.

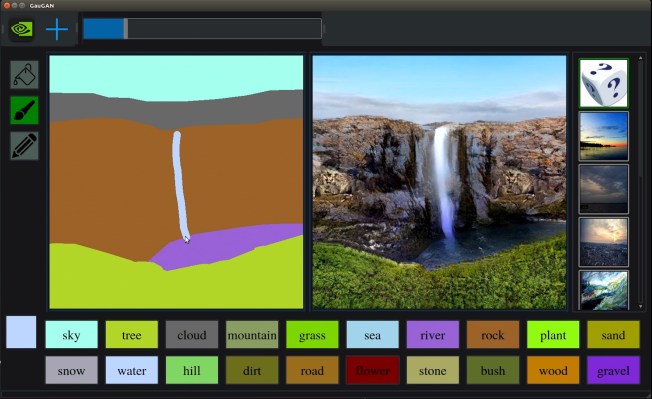

GauGAN has three tools: a paint bucket, pen and pencil. At the bottom of the screen is a series of objects. Select the cloud object and draw a line with the pencil, and the software will produce a wisp of photorealistic clouds. But these are not image stamps. GauGAN produces results unique to the input. Draw a circle and fill it with the paint bucket and the software will make puffy summer clouds.

Users can use the input tools to draw the shape of a tree and it will produce a tree. Draw a straight line and it will produce a bare trunk. Draw a bulb at the top and the software will fill it in with leaves producing a full tree.

GauGAN is also multimodal. If two users create the same sketch with the same settings, random numbers built into the project ensure that software creates different results.

In order to have real-time results, GauGAN has to run on a Tensor computing platform. Nvidia demonstrated this software on an RDX Titan GPU platform, which allowed it to produce results in real time. The operator of the demo was able to draw a line and the software instantly produced results. However, Bryan Catanzaro, VP of Applied Deep Learning Research, stated that with some modifications, GauGAN can run on nearly any platform, including CPUs, though the results might take a few seconds to display.

In the demo, the boundaries between objects are not perfect and the team behind the project states it will improve. There is a slight line where two objects touch. Nvidia calls the results photorealistic, but under scrutiny, it doesn’t stand up. Neural networks currently have an issue on objects it was trained on and what the neural network is trained to do. This project hopes to decrease that gap.

Nvidia turned to 1 million images on Flickr to train the neural network. Most came from Flickr’s Creative Commons, and Catanzaro said the company only uses images with permission. The company says this program can synthesize hundreds of thousands of objects and their relation to other objects in the real world. In GauGAN, change the season and the leaves will disappear from the branches. Or if there’s a pond in front of a tree, the tree will be reflected in the water.

Nvidia will release the white paper today. Catanzaro noted that it was previously accepted to CVPR 2019.

Catanzaro hopes this software will be available on Nvidia’s new AI Playground, but says there is a bit of work the company needs to do in order to make that happen. He sees tools like this being used in video games to create more immersive environments, but notes Nvidia does not directly build software to do so.

It’s easy to bemoan the ease with which this software could be used to produce inauthentic images for nefarious purposes. And Catanzaro agrees this is an important topic, noting that it’s bigger than one project and company. “We care about this a lot because we want to make the world a better place,” he said, adding that this is a trust issue instead of a technology issue and that we, as a society, must deal with it as such.

Even in this limited demo, it’s clear that software built around these abilities would appeal to everyone from a video game designer to architects to casual gamers. The company does not have any plans to release it commercially, but could soon release a public trial to let anyone use the software.