This post has been updated.

The photograph shows three malformed daisies, warped as if plucked from a Salvador Dali painting. An accompanying caption claims that the photo was taken near the site of the Fukushima nuclear explosion in Japan, noting, “This is what happens when flowers get nuclear birth defects.”

In 2015, 170 American high-school students were asked a simple question: Does the photo provide evidence that the caption is true?

The image looked sketchy, to say the least. It was posted on Imgur, a photo-sharing platform, by a user with the handle “pleasegoogleShakerAamerpleasegoogleDavidKelly.” It had no attribution as to the photographer, original publisher, or location. And yet, when asked whether the photo provided evidence that the Fukushima nuclear explosion caused malformations in daisies, nearly 40% of the students said yes. (As an aside, the photo was indeed real—but scientists say it’s unlikely the malformations were caused by radiation.)

The experiment was part of a larger study conducted by researchers from Stanford University’s History Education Group (HEG), who set out to measure what they called “civic online reasoning”—that is, young people’s ability to judge the credibility of the information they find online. To do this, they designed 56 different assessments for students in middle schools, high schools, and colleges across 12 states. The researchers say they collected 7,804 student responses.

In their own words, the researchers initially found themselves “rejecting ideas for tasks because we thought they would be too easy”—in other words, that students would find it obvious whether or not information was reliable. They could not have been more wrong. After an initial pilot round, the researchers realized that most students lacked the basic ability to recognize credible information or partisan junk online, or to tell sponsored content apart from real articles. As the team later wrote in their report, “many assume that because young people are fluent in social media they are equally savvy about what they find there. Our work shows the opposite.”

The Stanford study confirms what many teachers know to be true: Today’s students are not prepared to deal with the flood of information coming at them from their various digital devices. The stakes of the problem are high. As the past few years have shown, biased reporting and outright fake news have the potential to impact elections and referendums or lead to tragic, real-life consequences, as with the online spread of the #Pizzagate conspiracy theory, which led to a shooting at a DC pizzeria by a man who was convinced that Hillary Clinton ran a child-trafficking ring there. As the Stanford researchers wrote in their report, “democracy is threatened by the ease at which disinformation about civic issues is allowed to spread and flourish.”

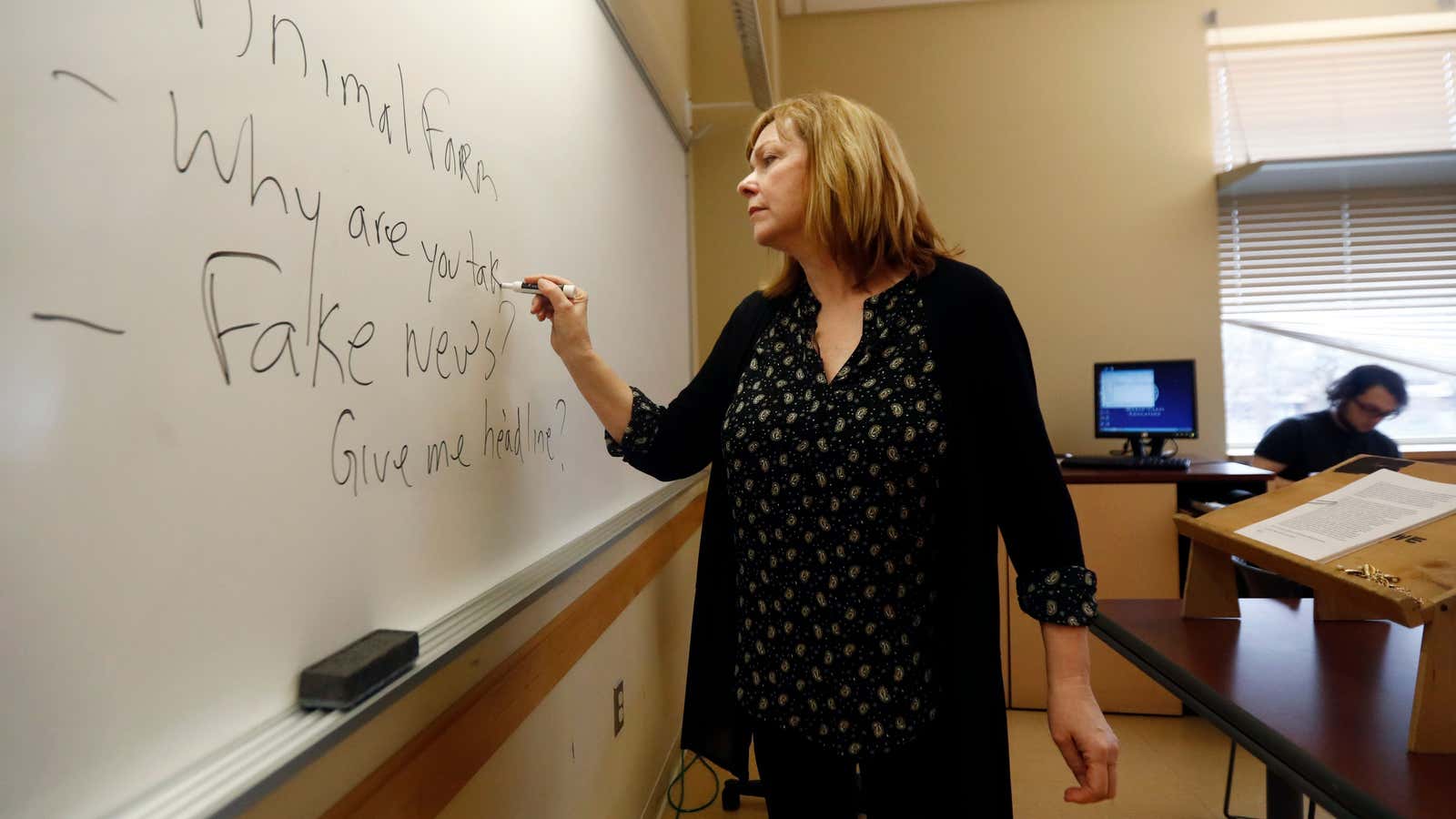

Schools in states like California, Iowa, New York, Hawaii, Arizona, and more are now at the center of a nascent effort to teach kids at a young age how to evaluate the stories they encounter online, as teachers, school districts, and nonprofits attempt to design curricula, apps, and assessments that can prepare students to become more critical consumers of information.

The lure of fake news

In the wake of the 2016 election, it’s easy to think of the problem as a simple binary between real and fake news. If we could just teach kids to tell those two apart, the thinking goes, the problem would be solved.

But Sam Wineburg, a professor of education and history at Stanford University and the founder of HEG, says it’s far more complicated than that. “‘Fake news’ conjures up Russian bots and troll farms in Saint Petersburg,” he says. The real problem, he says, is “how do we know who is producing the information that we’re consuming, and secondly, is that information reliable?”

For example, in Europe, there’s Russia Today and Sputnik, two government-funded news outlets. Both spread fake stories or unsubstantiated rumors about European politicians and regularly “pump out gloom about Europe, cheer about Russia and boosterism for pro-Russian populist parties,” according to The Economist (paywall). Russia Today and Sputnik may seem to have the trappings of respectability, with sizable newsrooms churning out content translated into five languages. But their articles are motivated by a government agenda.

Meanwhile, it’s true that social media and the ubiquity of digital platforms have made spreading false or biased information easier. But the core issue isn’t just technology—and neither can it be solved with better fake-news filters or algorithms. As Richard Hornik, director of overseas partnership programs at the Center for News Literacy at Stony Brook University, explains, “this is a human problem. This is us.”

In other words, people seem to be irresistibly drawn to fake news. Robinson Meyer writes in The Atlantic that “Fake news and false rumors reach more people, penetrate deeper into the social network, and spread much faster than accurate stories” because humans are drawn to those stories’ sense of novelty and the strong emotions they elicit, from fear to disgust and surprise. As the authors of a large MIT study wrote in 2018, fake news does so well online “because humans, not robots, are more likely to spread it.”

What’s more, “fake news is nothing new,” says Kelly Mendoza, senior director for education programs at Common Sense Education, an educator-focused branch of the digital nonprofit Common Sense Media. In 1672, King Charles II of England issued a “Proclamation To Restrain the Spreading of False News.” And during World War II, Nazi-run broadcasters spread fake news about the war to occupied people across Europe. As Jackie Mansky writes in Smithsonian, “[Fake news has] been part of the conversation as far back as the birth of the free press.” Still, says Mendoza, “we are at a unique moment because when something was on print, it could only spread so far and wide … And now, digitally, information can spread exponentially and it’s really easy to spread something that’s not true.”

The real problem is that we haven’t developed the skills to absorb, assess, and sort the unprecedented amounts of information coming from new technologies. We are letting our digital platforms, from our phones to our computers and social media, rule us. Or, as Wineburg says, “The tools right now have an upper hand.”

The problem with checklists

At the policy level, several state legislatures, including New Mexico, Rhode Island, Connecticut, and California, have passed laws calling on schools to teach media and news literacy. But critics have said funding for these programs is in short supply, and teachers are already overworked.

Some private foundations and nonprofits like Common Sense Education have developed checklists and other kinds of resources for educators to teach their students how to spot fake or biased information online. Those checklists, including the CRAAP Test (pdf) and the AAOCC criteria, focus on evaluating a given information source’s authority, credibility, currency, and purpose. They provide up to 30 questions, like “Is the author qualified to write on the topic?” or “Does the writing use inflammatory or biased language?”

But some teachers say these resources aren’t really helping their students. As Joanna Petrone, a teacher in California, writes in The Outline:

“To the extent that teachers and librarians have been training students to spot “fake news” and evaluate websites, we have been doing so using an outdated checklist approach that does more harm than good. Checklists…provide students with long lists of items for them to check off to verify a website as credible, but many of the items on the list can be poor indicators of reliability and even mislead students into a false sense of confidence in their own abilities to spot a lie.”

For example, in a 2017 working paper, Wineburg and his colleague Sarah McGrew studied the fact-checking process of 10 PhD historians, 10 professional fact-checkers, and 25 Stanford University undergraduate students. They found that the professional fact-checkers were about twice as successful as historians at evaluating the trustworthiness of two different online sources on school bullying, and five times more successful than students.

The authors explained that fact-checkers practiced “lateral reading,” meaning that they checked other available resources instead of staying only on the site at hand. That, they concluded, is a practice at odds with available fake-news checklists, which focus on the outward characteristics of a website, like its “about” page or its logo, and don’t encourage students to look for outside sources. “Designating an author, throwing together a reference list, and making sure a site is free of typos doesn’t confer credibility,” they write. “When the Internet is characterized by polished web design, search engine optimization, and organizations vying to appear trustworthy, such guidelines create a false sense of security.”

Moreover, as Petrone and others have pointed out, the checklists available to teachers often focus on abstract skills like critical thinking, which Wineburg says is not the right way to go. “The people who say ‘all we need are critical thinkers,’ I’m sorry, I could […] raise Socrates from the dead and he still wouldn’t know how to choose keywords, and he would know nothing about search engine optimization, and he would not know how to interpret the difference between a ‘.org’ and a ‘.com.’”

Ultimately, as Petrone writes, 21st-century citizens need more than a checklist—they “need a functioning bullshit detector.”

Building a bullshit detector

A better approach, according to experts like Hornik, would be to teach kids at a young age the skills of lateral learning, including how to “interrogate information instead of simply consuming it,” “verify information before sharing it,” “reject rank and popularity as a proxy for reliability,” “understand that the sender of information is often not its source,” and “acknowledge the implicit prejudices we all carry.” Anything short of that is a waste of time and resources.

“There’s been a lot of initiatives, there’s a lot of money being spent, but I don’t think it’s being spent in the right places to do the right things,” Hornik explains. He says more pilot programs need to be developed and tested for their efficacy by a “central clearing house” as part of a “national, coordinated effort.”

In the short term, experts say schools should teach kids the basic skills of fact-checking and train teachers to apply that knowledge to classroom learning across every subject. Schools that offer some sort of news literacy curriculum usually do so as a standalone course, or as part of the school’s civics curriculum. But fake news plagues every single field of study, from math to history to natural sciences, and schools need to think about how this problem affects every part of their curriculum. In a math class, this may mean teaching students how to recognize a deceptively-framed chart. In a history class, students might analyze war-time propaganda to learn how information can be weaponized.

Another possible model can be drawn from interdisciplinary programs that go beyond focusing on news literacy skills, showing students how search optimization and algorithms shape the content they see online and teaching them to recognize why their brains are more vulnerable to articles that trigger emotional responses. For example, in Ukraine, a nonprofit called IREX designed a program called Learn to Discern (L2D), which sought to train people to spot manipulative headlines and content and to look at the ownership structure of major newspapers in order to better understand “how the news media industry is structured and operates.” In a follow-up assessment given to 412 people, the group found that there was a difference between those who took the L2D training and those in the control group who didn’t. When shown some articles and asked to evaluate what was true and what was questionable, 63.8% of those who went through L2D answered correctly, compared to 56.5% of the control group.

As Hornik points out in an article for the Harvard Business Review, “That’s not much improvement.” But it’s a start. After all, researchers at the Center of News Literacy at Stony Brook University found that the effects from their programming faded within a year of participants taking the course or training. (Though another more recent assessment found that the effects of the literacy curriculum did not fade over time.) The IREX assessment was conducted a year and a half after the initial training.

This bleeds into a larger question: How can we know how well any of these programs are working, and which ones are working the best? Experts differ in their answers—though they all agree that changing students’ news consumption practices will take years. Says Wineburg: “We need the educational equivalent of the human genome project, which involved billions of dollars, a decade of work, thousands of scientists, and international cooperation.”