Abstract

Considering recent advancements and successes in the development of efficient quantum algorithms for electronic structure calculations—alongside impressive results using machine learning techniques for computation—hybridizing quantum computing with machine learning for the intent of performing electronic structure calculations is a natural progression. Here we report a hybrid quantum algorithm employing a restricted Boltzmann machine to obtain accurate molecular potential energy surfaces. By exploiting a quantum algorithm to help optimize the underlying objective function, we obtained an efficient procedure for the calculation of the electronic ground state energy for a small molecule system. Our approach achieves high accuracy for the ground state energy for H2, LiH, H2O at a specific location on its potential energy surface with a finite basis set. With the future availability of larger-scale quantum computers, quantum machine learning techniques are set to become powerful tools to obtain accurate values for electronic structures.

Similar content being viewed by others

Introduction

Machine learning techniques are demonstrably powerful tools displaying remarkable success in compressing high dimensional data1,2. These methods have been applied to a variety of fields in both science and engineering, from computing excitonic dynamics3, energy transfer in light-harvesting systems4, molecular electronic properties5, surface reaction network6, learning density functional models7 to classify phases of matter, and the simulation of classical and complex quantum systems8,9,10,11,12,13,14. Modern machine learning techniques have been used in the state space of complex condensed-matter systems for their abilities to analyze and interpret exponentially large data sets9 and to speed-up searches for novel energy generation/storage materials15,16.

Quantum machine learning17—hybridization of classical machine learning techniques with quantum computation—is emerging as a powerful approach allowing quantum speed-ups and improving classical machine learning algorithms18,19,20,21,22. Recently, Wiebe et al.23 have shown that quantum computing is capable of reducing the time required to train a restricted Boltzmann machine (RBM), while also providing a richer framework for deep learning than its classical analog. The standard RBM models the probability of a given configuration of visible and hidden units by the Gibbs distribution with interactions restricted between different layers. Here, we focus on an RBM where the visible and hidden units assume {+1,−1} forms24,25.

Accurate electronic structure calculations for large systems continue to be a challenging problem in the field of chemistry and material science. Toward this goal—in addition to the impressive progress in developing classical algorithms based on ab initio and density functional methods—quantum computing based simulation have been explored26,27,28,29,30,31. Recently, Kivlichan et al.32 show that using a particular arrangement of gates (a fermionic swap network) is possible to simulate electronic structure Hamiltonian with linear depth and connectivity. These results present significant improvement on the cost of quantum simulation for both variational and phase estimation based quantum chemistry simulation methods.

Recently, Troyer and coworkers proposed using a restricted Boltzmann machine to solve quantum many-body problems, for both stationary states and time evolution of the quantum Ising and Heisenberg models24. However, this simple approach has to be modified for cases where the wave function’s phase is required for accurate calculations25.

Herein, we propose a three-layered RBM structure that includes the visible and hidden layers, plus a new layer correction for the signs of coefficients for basis functions of the wave function. We will show that this model has the potential to solve complex quantum many-body problems and to obtain very accurate results for simple molecules as compared with the results calculated by a finite minimal basis set, STO-3G. We also employed a quantum algorithm to help the optimization of training procedure.

Results

Three-layers restricted Boltzmann machine

We will begin by briefly outlining the original RBM structure as described by24. For a given Hamiltonian, H, and a trial state, \(|\phi \rangle = \mathop {\sum}\nolimits_x \phi (x)|x\rangle \), the expectation value can be written as:24

where ϕ(x) = 〈x|ϕ〉 will be used throughout this letter to express the overlap of the complete wave function with the basis function |x〉, \(\overline {\phi (x)} \) is the complex conjugate of ϕ(x).

We can map the above to a RBM model with visible layer units \(\sigma _1^z,\,\sigma _2^z...\,\sigma _n^z\) and hidden layer units h1, h2... hm with \(\sigma _i^z\), hj ∈ {−1, 1}. We use a visible unit \(\sigma _i^z\) to represent the state of a qubit i—when \(\sigma_{i}^{z}=1, {\hskip2pt}|\sigma_{i}^{z}\rangle\) represents the qubit i in state \(|1\rangle\) and when \(\sigma_{i}^{z}=-1, {\hskip2pt}|\sigma_{i}^{z}\rangle\) represents the qubit i in state \(|0\rangle\). The total state of n qubits is represented by the basis \(|x\rangle = \left| {\sigma _1^z\sigma _2^z...\sigma _n^z} \right\rangle \). \(\phi (x) = \sqrt {P(x)} \) where P(x) is the probability for x from the distribution determined by the RBM. The probability of a specific set \(x = \{ \sigma _1^z,\sigma _2^z...\sigma _n^z\} \) is:

Within the above ai and bj are trainable weights for units \(\sigma _i^z\) and hj. wij are trainable weights describing the connections between \(\sigma _i^z\) and hj (see Fig. 1.)

Constructions of restricted Boltzmann machine. a The original restricted Boltzmann machine (RBM) structure with visible \(\sigma _z^i\) and hidden hj layers. b Improved RBM structure with three layers, visible, hidden and sign. ai, wij, bj, di, c are trainable weights describing the different connection between layers

By setting 〈H〉 as the objective function of this RBM, we can use the standard gradient decent method to update parameters, effectively minimizing 〈H〉 to obtain the ground state energy.

However, previous prescriptions considering the use of RBMs for electronic structure problems have found difficulty as ϕ(x) can only be non-negative values. We have thus appended an additional layer to the neural network architecture to compensate for the lack of sign features specific to electronic structure problems.

We propose an RBM with three layers. The first layer, σz, describes the parameters building the wave function. The h's within the second layer are parameters for the coefficients for the wave functions and the third layer s, represents the signs associated |x〉:

The s uses a non-linear function tanh to classify whether the sign should be positive or negative. Because we have added another function for the coefficients, the distribution is not solely decided by RBM. We also need to add our sign function into the distribution. Within this scheme, c is a regulation and di are weights for \(\sigma _i^z\). (see Fig. 1). Our final objective function, now with \(|\phi \rangle = \mathop {\sum}\nolimits_x \phi (x)s(x)|x\rangle \), becomes:

After setting the objective function, the learning procedure is performed by sampling to get the distribution of ϕ(x) and calculating to get s(x). We then proceed to calculate the joint distribution determined by ϕ(x) and s(x). The gradients are determined by the joint distribution and we use gradient decent method to optimize 〈H〉 (see Supplementary Note 1). Calculating the the joint distribution is efficient because s(x) is only related to x.

Electronic structure Hamiltonian preparation

The electronic structure is represented by N single-particle orbitals which can be empty or occupied by a spinless electron:33

where hij and hijkl are one and two-electron integrals. In this study we use the minimal basis (STO-3G) to calculate them. \(a_j^\dagger \) and aj are creation and annihilation operators for the orbital j.

Equation (5) is then transformed to Pauli matrices representation, which is achieved by the Jordan-Wigner transformation34. The final electronic structure Hamiltonian takes the general form with \(\sigma _\alpha ^i \in \left\{ {\sigma _x,\sigma _y,\sigma _z,I} \right\}\) where σx, σy, σz are Pauli matrices and I is the identity matrix:35

Quantum algorithm to sample Gibbs distribution

We propose a quantum algorithm to sample the distribution determined by RBM. The probability for each combination y = {σz, h} can be written as:

Instead of P(y), we try to sample the distribution Q(y) as:

where k is an adjustable constant with different values for each iteration and is chosen to increase the probability of successful sampling. In our simulation, it is chosen as \(O\big( {\mathop {\sum}\nolimits_{i,j} |w_{ij}|} \big)\).

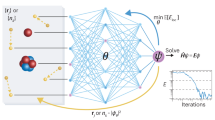

We employed a quantum algorithm to sample the Gibbs distribution from the quantum computer. This algorithm is based on sequential applications of controlled-rotation operations, which tries to calculate a distribution Q′(y) ≥ Q(y) with an ancilla qubit showing whether the sampling for Q(y) is successful23.

This two-step algorithm uses one system register (with n + m qubits in use) and one scratchpad register (with one qubit in use) as shown in Fig. 2.

All qubits are initialized as |0〉 at the beginning. The first step is to use Ry gates to get a superposition of all combinations of {σz, h} with \(\theta _i = 2arcsin\left( {\sqrt {\frac{{e^{a_i/k}}}{{e^{a_i/k} + e^{ - a_i/k}}}} } \right)\) and \(\gamma _j = 2arcsin\left( {\sqrt {\frac{{e^{b_j/k}}}{{e^{b_j/k} + e^{ - b_j/k}}}} } \right)\):

where \(O(y) = \frac{{e^{\mathop {\sum}\nolimits_i a_i\sigma _i^z/k + \mathop {\sum}\nolimits_j b_jh_j/k}}}{{\mathop {\sum}\nolimits_{y\prime } e^{\mathop {\sum}\nolimits_i a_i\sigma _i^{z\prime }/k + \mathop {\sum}\nolimits_j b_jh_j^\prime /k}}}\) and |y〉 corresponds to the combination \(|y\rangle = |\sigma _1^z...\sigma _n^zh_1...h_m\rangle -\) as before when \(h_{j}=1,{\hskip2pt}|\mathrm{h}{{j}}\rangle\) represents the corresponding qubit in state \(|1\rangle\) and when \(h_{j}=-1,{\hskip2pt}|\mathrm{h}{j}\rangle\) represents the corresponding qubit in state \(|0\rangle\).

The second step is to calculate \(e^{w_{ij}\sigma _i^zh_j}\). We use controlled-rotation gates to achieve this. The idea of sequential controlled-rotation gates is to check whether the target qubit is in state |0〉 or state |1〉 and then rotate the corresponding angle (Fig. 2). If qubits \(\sigma _i^z h_j\) are in |00〉 or |11〉, the ancilla qubit is rotated by Ry(θij,1) and otherwise by Ry(θij,2), with \(\theta _{ij,1} = 2arcsin\left( {\sqrt {\frac{{e^{w_{ij}/k}}}{{e^{\left| {w_{ij}} \right|/k}}}} } \right)\) and \(\theta _{ij,2} = 2arcsin\left( {\sqrt {\frac{{e^{ - w_{ij}/k}}}{{e^{|w_{ij}|/k}}}} } \right)\). Each time after one \(e^{w_{ij}\sigma _i^zh_j}\) is calculated, we do a measurement on the ancilla qubit. If it is in |1〉 we continue with a new ancilla qubit initialized in |0〉, otherwise we start over from the beginning (details in Supplementary Note 2).

After we finish all measurements the final states of the first m + n qubits follow the distribution Q(y). We just measure the first n + m qubits of the system register to obtain the probability distribution. After we get the distribution, we calculate all probabilities to the power of k and normalize to get the Gibbs distribution (Fig. 3).

The complexity of gates comes to O(mn) for one sampling and the qubits requirement comes to O(mn). If considering the reuse of ancilla qubits, the qubits requirements reduce to O(m + n) (see Supplementary Note 4). The probability of one successful sampling has a lower bound \(e^{\frac{{ - 1}}{k}\mathop {\sum}\nolimits_{i,j} 2|w_{ij}|}\) and if k is set to \(O\left( {\mathop {\sum}\nolimits_{i,j} |w_{ij}|} \right)\) it has constant lower bound (see Supplementary Note 3). If Ns is the number of successful sampling to get the distribution, the complexity for one iteration should be O(Nsmn) due to the constant lower bound of successful sampling as well as processing distribution taking O(Ns). In the meantime, the exact calculation for the distribution has complexity as O(2m+n). The only error comes from the error of sampling if not considering noise in the quantum computer.

Summary of numerical results

We now present the results derived from our RBM for H2, LiH and H2O molecules. It can clearly be seen from Fig. 4 that our three layer RBM yields very accurate results comparing to the disorganization of transformed Hamiltonian which is calculated by a finite minimal basis set, STO-3G. Points deviating from the ideal curve are likely due to local minima trapping during the optimization procedure. This can be avoided in the future by implementing optimization methods which include momentum or excitation, increasing the escape probability from any local features of the potential energy surface.

Results of calculating ground state energy of H2, LiH and H2O. a–c are the results of H2 (n = 4, m = 8), LiH (n = 4, m = 8) and H2O (n = 6, m = 6) calculated by our three layer RBM compared with exact diagonalized results of the transformed Hamiltonian. d is the result of LiH (n = 4, m = 8) calculated by the Transfer Learning method. We use STO-3G as basis to compute the molecular integrals for the Hamiltonian. Bond length represents inter-atomic distance for the diatomic molecules and the distance O-H of the optimized equilibrium structure of the water molecule. The data points of RBM are minimum energies of all energies calculated during the whole optimization by sampling

Further discussion about our results should mention instances of transfer learning. Transfer learning is a unique facet of neural network machine learning algorithms describing an instance (engineered or otherwise) where the solution to a problem can inform or assist in the solution to another similar subsequent problem. Given a diatomic Hamiltonian at a specific intermolecular separation, the solution yielding the variational parameters—which are the weighting coefficients of the basis functions—are adequate first approximations to those parameters at a subsequent calculation where the intermolecular separation is a small perturbation to the previous value.

Except for the last point in the Fig. 4d, we use 1/40 of the iterations for the last point in calculations initiated with transferred parameters from previous iterations of each points and still achieve a good result. We also see that the local minimum is avoided if the starting point achieve global minimum.

Discussion

In conclusion, we present a combined quantum machine learning approach to perform electronic structure calculations. Here, we have a proof of concept and show results for small molecular systems. Screening molecules to accelerate the discovery of new materials for specific application is demanding since the chemical space is very large! For example, it was reported that the total number of possible small organic molecules that populate the ‘chemical space’ exceed 1060 36,37. Such an enormous size makes a thorough exploration of chemical space using the traditional electronic structure methods impossible. Moreover, in a recent perspective38in Nature Reviews Materials the potential of machine learning algorithms to accelerate the discovery of materials was pointed out. Machine learning algorithms have been used for material screening. For example, out of the GDB-17 data base, consisting of about 166 billion molecular graphs, one can make organic and drug-like molecules with up to 17 atoms and 134 thousand smallest molecules with up to 9 heavy atoms were calculated using hybrid density functional (B3LYP/6-31G(2df,p). Machine learning algorithms trained on these data, were found to predict molecular properties of subsets of these molecules38,39,40.

In the current simulation, H2 requires 13 qubits with the number of visible units n = 4, the number of hidden units m = 8 and additional 1 reusing ancilla qubit. LiH requires 13 qubits with the number of visible units n = 4, the number of hidden units m = 8 and additional 1 reusing ancilla qubit. H2O requires 13 qubits with the number of visible units n = 6, the number of hidden units m = 6 and additional 1 reusing ancilla qubit. The order of scaling of qubits for the system should be O(m + n) with reusing ancilla qubits. The number of visible units n is equal to the number of spin orbitals. The choice of the number of hidden units m is normally integer times of n which gives us a scaling of O(n) with reusing ancilla qubits. Thus, the scaling of the qubits increases polynomially with the number of spin orbitals. Also, the complexity of gates O(n2) scales polynomially with the number of spin orbitals while the scaling of classical Machine Learning approaches calculating exact Gibbs distribution is exponential. With the rapid development of larger-scale quantum computers and the possible training of some machine units with the simple dimensional scaling results for electronic structure, quantum machine learning techniques are set to become powerful tools to perform electronic structure calculations and assist in designing new materials for specific applications.

Methods

Preparation of the Hamiltonian of H2, LiH and H2O

We treat H2 molecule with 2-electrons in a minimal basis STO-3G and use the Jordan-Wigner transformation34. The final Hamiltonian is of 4 qubits. We treat LiH molecule with 4-electrons in a minimal basis STO-3G and use the Jordan-Wigner transformation34. We assumed the first two lowest orbitals are occupied by electrons and the the final Hamiltonian is of 4 qubits. We treat H2O molecule with 10-electrons in a minimal basis STO-3G, we use Jordan-Wigner transformation34. We assume the first four lowest energy orbitals are occupied by electrons and first two highest energy orbitals are not occupied all time. We also use the spin symmetry in41,42 to reduce another two qubits. With the reduction of the number of qubits, finally we have 6 qubits Hamiltonian35,43. All calculations of integrals in second quantization and transformations of electronic structure are done by OpenFermion44 and Psi445.

Gradient estimation

The two functions ϕ(x) and s(x) are both real function. Thus, the gradient for parameter pk can be estimated as \(2\left( {\left\langle {E_{loc}D_{p_k}} \right\rangle - \left\langle {E_{loc}} \right\rangle \left\langle {D_{p_k}} \right\rangle } \right)\) where \(E_{loc}(x) = \frac{{\langle x|H|\phi \rangle }}{{\phi (x)s(x)}}\) is so called local energy, \(D_{p_k}(x) = \frac{{\partial _{p_k}(\phi (x)s(x))}}{{\phi (x)s(x)}}\). 〈...〉 represents the expectation value of joint distribution determined by ϕ(x) and s(x) (details in Supplementary Note 1).

Implementation details

In our simulation we choose small constant learning rate 0.01 to avoid trapping in local minimum. All parameter are initialized as a random number between (−0.02,0.02). The range of initial random parameter is to avoid gradient vanishing of tanh. For each calculation we just need 1 reusing ancilla qubit all the time. Thus, in the simulation, the number of required qubits is m + n + 1. All calculations do not consider the noise and system error (details in Supplementary Note 5).

Data availability

The data and codes that support the findings of this study are available from the corresponding author upon reasonable request.

References

Hinton, G. E. & Salakhutdinov, R. R. Reducing the dimensionality of data with neural networks. Science 313, 504–507 (2006).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436 (2015).

Häse, F., Valleau, S., Pyzer-Knapp, E. & Aspuru-Guzik, A. Machine learning exciton dynamics. Chem. Sci. 7, 5139–5147 (2016).

Häse, F., Kreisbeck, C. & Aspuru-Guzik, A. Machine learning for quantum dynamics: deep learning of excitation energy transfer properties. Chem. Sci. 8, 8419–8426 (2017).

Montavon, G. et al. Machine learning of molecular electronic properties in chemical compound space. New J. Phys. 15, 095003 (2013).

Ulissi, Z. W., Medford, A. J., Bligaard, T. & Nørskov, J. K. To address surface reaction network complexity using scaling relations machine learning and dft calculations. Nat. Commun. 8, 14621 (2017).

Brockherde, F. et al. Bypassing the kohn-sham equations with machine learning. Nat. Commun. 8, 872 (2017).

Wang, L. Discovering phase transitions with unsupervised learning. Phys. Rev. B 94, 195105 (2016).

Carrasquilla, J. & Melko, R. G. Machine learning phases of matter. Nat. Phys. 13, 431 (2017).

Broecker, P., Carrasquilla, J., Melko, R. G. & Trebst, S. Machine learning quantum phases of matter beyond the fermion sign problem. Sci. Rep. 7, 8823 (2017).

Ch’ng, K., Carrasquilla, J., Melko, R. G. & Khatami, E. Machine learning phases of strongly correlated fermions. Phys. Rev. X 7, 031038 (2017).

Van Nieuwenburg, E. P., Liu, Y.-H. & Huber, S. D. Learning phase transitions by confusion. Nat. Phys. 13, 435 (2017).

Arsenault, L.-F., Lopez-Bezanilla, A., von Lilienfeld, O. A. & Millis, A. J. Machine learning for many-body physics: the case of the anderson impurity model. Phys. Rev. B 90, 155136 (2014).

Kusne, A. G. et al. On-the-fly machine-learning for high-throughput experiments: search for rare-earth-free permanent magnets. Sci. Rep. 4, 6367 (2014).

De Luna, P., Wei, J., Bengio, Y., Aspuru-Guzik, A. & Sargent, E. Use machine learning to find energy materials. Nature 552, 23–25 (2017).

Wei, J. N., Duvenaud, D. & Aspuru-Guzik, A. Neural networks for the prediction of organic chemistry reactions. ACS Cent. Sci. 2, 725–732 (2016).

Biamonte, J. et al. Quantum machine learning. Nature 549, 195 (2017).

Lloyd, S., Mohseni, M. & Rebentrost, P. Quantum algorithms for supervised and unsupervised machine learning. Preprint at https://arxiv.org/abs/1307.0411v2 (2013).

Rebentrost, P., Mohseni, M. & Lloyd, S. Quantum support vector machine for big data classification. Phys. Rev. Lett. 113, 130503 (2014).

Neven, H., Rose, G. & Macready, W. G. Image recognition with an adiabatic quantum computer i. mapping to quadratic unconstrained binary optimization. Preprint at https://arxiv.org/abs/0804.4457 (2008).

Neven, H., Denchev, V. S., Rose, G. & Macready, W. G. Training a binary classifier with the quantum adiabatic algorithm. Preprint at https://arxiv.org/abs/0811.0416 (2008).

Neven, H., Denchev, V. S., Rose, G. & Macready, W. G. Training a large scale classifier with the quantum adiabatic algorithm. Preprint at https://arxiv.org/abs/0912.0779 (2009).

Wiebe, N., Kapoor, A. & Svore, K. M. Quantum deep learning. Quantum Inf. Comput. 16, 541–587 (2016).

Carleo, G. & Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 355, 602–606 (2017).

Torlai, G. et al. Neural-network quantum state tomography. Nat. Phys. 14, 447 (2018).

Kais, S. Introduction to quantum information and computation for chemistry. Quantum Inf. Comput. Chem. 154, 1–38 (2014).

Daskin, A. & Kais, S. Direct application of the phase estimation algorithm to find the eigenvalues of the hamiltonians. Chem. Phys. In press (2018).

Aspuru-Guzik, A., Dutoi, A. D., Love, P. J. & Head-Gordon, M. Simulated quantum computation of molecular energies. Science 309, 1704–1707 (2005).

O’Malley, P. et al. Scalable quantum simulation of molecular energies. Phys. Rev. X 6, 031007 (2016).

Kassal, I., Whitfield, J. D., Perdomo-Ortiz, A., Yung, M.-H. & Aspuru-Guzik, A. Simulating chemistry using quantum computers. Annu. Rev. Phys. Chem. 62, 185–207 (2011).

Babbush, R., Love, P. J. & Aspuru-Guzik, A. Adiabatic quantum simulation of quantum chemistry. Sci. Rep. 4, 6603 (2014).

Kivlichan, I. D. et al. Quantum simulation of electronic structure with linear depth and connectivity. Phys. Rev. Lett. 120, 110501 (2018).

Lanyon, B. P. et al. Towards quantum chemistry on a quantum computer. Nat. Chem. 2, 106 (2010).

Fradkin, E. Jordan-wigner transformation for quantum-spin systems in two dimensions and fractional statistics. Phys. Rev. Lett. 63, 322 (1989).

Xia, R., Bian, T. & Kais, S. Electronic structure calculations and the ising Hamiltonian. J. Phys. Chem. B 122, 3384–3395 (2017).

Dobson, C. M. Chemical space and biology. Nature 432, 824 (2004).

Blum, L. C. & Reymond, J.-L. 970 million druglike small molecules for virtual screening in the chemical universe database GDB-13. J. Am. Chem. Soc. 131, 8732–8733 (2009).

Tabor, D. P. et al. Accelerating the discovery of materials for clean energy in the era of smart automation. Nat. Rev. Mater. 3, 5–20 (2018).

von Lilienfeld, O. A. Quantum machine learning in chemical compound space. Angew. Chem. Int. Ed. 57, 4164–4169 (2018).

Ramakrishnan, R., Dral, P. O., Rupp, M. & Von Lilienfeld, O. A. Quantum chemistry structures and properties of 134 kilo molecules. Sci. Data 1, 140022 (2014).

Kandala, A. et al. Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 549, 242 (2017).

Bravyi, S., Gambetta, J. M., Mezzacapo, A. & Temme, K. Tapering off qubits to simulate fermionic Hamiltonians. Preprint at https://arxiv.org/abs/1701.08213 (2017).

Bian, T., Murphy, D., Xia, R., Daskin, A. & Kais, S. Comparison study of quantum computing methods for simulating the Hamiltonian of the water molecule. Preprint at https://arxiv.org/abs/1804.05453 (2018).

McClean, J. R. et al. OpenFermion: the electronic structure package for quantum computers. Preprint at https://arxiv.org/abs/1710.07629 (2017).

Parrish, R. M. et al. Psi4 1.1: an open-source electronic structure program emphasizing automation, advanced libraries, and interoperability. J. Chem. Theory Comput. 13, 3185–3197 (2017).

Acknowledgements

We would like to thank Dr. Ross Hoehn, Dr. Zixuan Hu, and Teng Bian for critical reading and useful discussions. S.K. and R.X. are grateful for the support from Integrated Data Science Initiative Grants, Purdue University.

Author information

Authors and Affiliations

Contributions

S.K. designed the research. R.X. performed the calculations. Both discussed the results and wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xia, R., Kais, S. Quantum machine learning for electronic structure calculations. Nat Commun 9, 4195 (2018). https://doi.org/10.1038/s41467-018-06598-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-018-06598-z

This article is cited by

-

Quantum confinement detection using a coupled Schrödinger system

Nonlinear Dynamics (2024)

-

Taking advantage of noise in quantum reservoir computing

Scientific Reports (2023)

-

Characterizing quantum circuits with qubit functional configurations

Scientific Reports (2023)

-

The unitary dependence theory for characterizing quantum circuits and states

Communications Physics (2023)

-

Optimization two-qubit quantum gate by two optical control methods in molecular pendular states

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.