Putting Autonomous Robotics to Work: Interview with Josh Meler at Hangar Technology

Hangar, headquartered in Austin, TX, is the world’s first 4D visual data acquisition platform, existing to serve and nurture an ecosystem of mobile robotics software, hardware, services and data for large enterprises and infrastructure owners. We spoke with Josh Meler Senior Director of Marketing at Hangar Technology on drones, business and Robotics-as-a-System.

Hi Josh, thanks for taking the time to talk to us. First of all, can you share some background on the early vision behind Hangar Technology?

The vision behind Hangar came from CEO Jeff DeCoux, who didn’t see a drone the way you and I might have first recognized them. Where most saw a hobbyist toy, he saw something closer to a smartphone with propellers – an evolution to satellite technology and 2D visual insights. To Jeff, drones are a mobile sensor platform, capable of acting as a vehicle to collect a new type of 4D visual insights about the physical world. The space between 10ft and 400ft is largely void. Void of people, cars, millions of other moving obstacles, and most importantly – void of insight.

We’re not trying to be another Drone Service Provider (DSP), or make some incremental improvement on the technology that already exists. We’re transforming the drones into autonomous robotics. We’re leaning into the innovation emerging out of the drone space, pairing like-minded systems and creating an automated visual insight supply chain. Our vision is to create a new category of offerings that elevates an autonomous world where people work hand-in-hand with robotics. Today, we’re giving whole industries visibility and spatial awareness that previously never existed.

In a nutshell, what’s Hangar all about?

Hangar is putting autonomous robotics to work. We’re defining a new category where businesses can task autonomous robotics, like drones, to collect a variety of visual insights at any desired frequency or scale.

We’re addressing a massive unsolved problem shared across just about every industry – a lack of visual perception. We’re blind to so much about the world around us. Every day, cracks form on bridges, mistakes go uncorrected on construction sites, and high winds damage cell towers. We don’t know what occurred last week, what’s happening today, and we have no way to predict what will happen in the future. We’re driving industrial economic transformation through visual awareness. We call this new spatial perception “4D Visual InSight.”

You are based in Texas, was there a reason behind this choice of location? Are there any advantages?

Austin is at the intersection of innovation, startups, software and technology. Dell, Samsung, Apple, Google, and many more technology giants are home here. The University of Texas shares the Texas capitol in Austin. Radionavigation Laboratory, who emphasizes location, collision avoidance and timing perception as they relate to the rise of autonomous vehicles and the smart grid, is based right here in Austin. DJI even opened their first US offices here in Austin, Texas. The list goes on.

What is 4D Data and Insight?

When a drone, or more accurately in context, when an autonomous robot can repeatedly fly the same path in the 3D world with absolute precision and accuracy, it ceases to provide unstructured data… or more simply put, photography. This is a game-changer. What emerges is a completely new class of spatial data, solving a bad data dilemma plaguing the industry since DSPs began offering photography to customers. When you can repeatedly and autonomously navigate the physical world, imagery becomes data, and data can then become insight.

This new class of data and insight has four dimensions. Drones autonomously capture precision data in the physical XYZ space, and then integrate the fourth dimension of time. We call this 4D data and insight.

What’s the role of this new ‘class of data’ and how can it transform specific industries?

Before 4D data, the only spatial data available with any repeatability or scale was low resolution, difficult to acquire, 2D satellite imagery. For industries like construction, infrastructure or energy, this data offers very little in terms of providing a real-time, multidimensional perspective of an environment.

4D data and insights fundamentally alter industrial economics by providing an absolute contextual representation of the physical condition of an environment at any given time. It also opens the door to computer analysis to reveal changes, patterns and trends, and for the application of machine learning and artificial intelligence in real-world settings.

Can you explain Robotics-as-a-System™?

Robotics-as-a-System (RaaS) is a way of explaining something that re-frames the current drone conversation. To unlock a never before seen category of visual awareness, it’s not just a matter of rethinking drones as autonomous robotics, we also require an underlying cloud-like system of systems to automate the end-to-end 4D insight supply chain.

Hangar RaaS is the world’s first partner-integrated, internet-scale platform designed to put robotics to work by automating a modular end-to-end data delivery process. RaaS is a system of many systems, linking entire technology partners that encompass hardware manufacturers, and sensor platforms, drones-as-a-service providers, data transformation innovators, and analytics software firms. RaaS represents a movement towards scalable autonomicity, enabling the rise of autonomous robotics and spatial data, at a rate not seen since the satellite.

We’ve all know SaaS but what’s RaaS?

The terms “as-a-service” and “cloud computing” have each in their own right assumed fairly narrow meanings. To enable scalable industry transformation, the robotics technology ecosystem requires a “System” of systems, services, hardware, and software that spans and interconnects service providers, cloud and edge technologies, autonomous mobile robotics, big data, analytics and machine learning.

The Hangar RaaS is extending a cloud-like technology into the physical world, incorporating people, robotics and the physical world as components. Rather than providing a rentable suite of computing services like storage, processing power or software – Hangar RaaS provides “rentable” access to networks of certified pilots, autonomous mission planning, specification-ready drone hardware, and the fundamental delivery of meaningful precision-grade 4D data. The ultimate goal of this “system” is to help industry understand and measure their worlds. Businesses generally don’t want to own a complex drone infrastructure, or necessarily know all the details about how their end data is acquired. They prefer a seamless ability to get data on demand, they want to pay for what they use, and they want simplicity that doesn’t add additional burden to their business model.

What can drones bring to a business?

Drones in and of themselves introduce new costs, complexity, regulations, certifications, specialized knowledge, skill sets, and many other hassles to business operations. Software or programs designed to help businesses streamline the introduction of drones as another tool of the respective trade, only serve to impede the adoption of the very thing drones enable – rapid, actionable 4D insight.

When drones act as autonomous agents in which businesses and industries can task to gain real world intelligence, drones bring industrial economic transformation. Instead of sensors covering objects, drones become a moving sensor that businesses can task to collect insights.

What are the challenges faced by a business when adopting drone tech and data into their project for the first time, how does Hangar help that process?

If I want insights that drones provide, does that mean I also want a drone department? That doesn’t make much sense, but that is often the decision and challenge facing businesses who see the value in drone insights.

This is the drone industry mold that Hangar is breaking, and the big way Hangar helps the process. With RaaS as a foundation, Hangar simplifies and automates the entire ecosystem and process down to a few simple commands. A business simply requests a location, sets a frequency and data type, and then insights begin appearing 48 hours later. Autonomous flight makes data is repeatable by any operator – whether that’s an in-house drone expert or an outsourced professional pilot. We’re making the drone tech invisible, and giving businesses data and insights they can act on.

How do you actually help integrate aerial data into a company’s workflow?

We level the playing field, and remove the complexity involved in acquiring 4D insights. Similar to how Amazon brought cloud services to enterprises and college students alike, we’re giving businesses of all sizes and types access to “pay by the sip” robotics services and visual insights.

Depending on industry and the type of project, we pair businesses with recommended data types and insights that add value, throughout each stage of the project. In the case of a company that would like to integrate 4D insight into their current workflow, RaaS encompasses many modular components that enable the end-to-end delivery of 4D visual insights. If an enterprise requires a preferred pilot network, an atypical sensor, specific data transforms or even a third-party viewer, we can integrate components into the supply chain that RaaS automates. Most commonly however, we see an industry like construction, looking to integrate 4D insights into a project management software like Procore. In this case, the integration is rather simple and straightforward. As Project Managers document projects within Procore, we provide users with the added ability to access and reference 4D visual insights in their observations and communications.

On your website there’s a great line …. ‘Syncing brains in the office to brains in the field’. Can you share a real world example of this?

Yes. This was in reference to the construction industry, and the JobSight viewer. When we brought visual awareness to construction, it was important to give businesses horizontal access to 4D visual insights, from the subcontractor all the way to the executive.

A great real-world example of this is an actual issue that was identified by client during a project inspection. A two-inch depression was observed in concrete footing, bringing quality and structural integrity into question. The issue was immediately highlighted within the JobSight viewer, enabling the Superintendent to explore the issue before arriving on-site. Typically, an issue like this would have required costly inspections, delays and unnecessary rework. Instead, within minutes, it was obvious that earth was removed and never replaced and compacted. The Superintendent shared the JobSight insights, providing the VDC team and the trade responsible with a visual history of the problem area and a snapshot of the issue source – all the same day the issue was observed. The trade team took responsibility and was able to excavate a small area and quickly resolve the issue. Ownership and Finance had clear visibility of the issue, as well as visual evidence of that could easily be referenced in the case of litigation.

How long before there’s a drone on every U.S. job site, and how are we moving towards this in 2018?

To answer this, we need to first re-frame what it means to have a drone on every job site. If you’re asking how long it is until every construction business adopts a “drone program,” I don’t think this will ever happen. When handheld drills started showing up on job sites, we didn’t hear Black + Decker say, “launch your drill program!” Why not? Drills are just enablers. They allow workers to do what they were already doing — except better, faster and more efficiently. The breakthrough had little to do with the actual tool itself, and more the new ability to enable faster holes. Drones are no different.

We need to stop putting drones on construction sites, and start giving the industry the very thing that drones enable — insight. Drones will be on every job site in the next few years, but not as another tool on the tool belt. The project manager isn’t adopting a drone program. They’re adopting a visual insights program that captures a new, historical perspective across their sites. They’re providing situational awareness holistically throughout their organization. They’re making decisions based on the actual state of projects, and the insights affordable by new perspectives and sensors.

To move forward in 2018, many DSP players must first move past “putting a drone on every construction site,” to enabling the industry with visual insights. Drones will occupy the airspace of job sites, but they won’t be another shovel, hammer or drill. Drones will become an invisible technology, and the value proposition will shift instead to visual insights on every job site.

In your opinion, how does the use of drones and drone data in business within Europe compare to the U.S.?

The value of drone data, better rephrased as visual insights, is ubiquitous. The problems visual insights solve doesn’t change based on country – but you are correct to point out that as rules and regulations change by country, so too does the economics.

Collectively, the U.S. is more receptive to drones occupying today’s airspace than Europe in general. In short, this is because the European Aviation Safety Agency (EASA) is made up of 28 separate countries, with 28 dissimilar legislatures. In contrast, the FAA has clear, strong mandate over all US airspace. This means Europe, in comparison to the US, will likely incorporate drones at a slower, more conventional rate.

There’s a lot of talk about ‘smart cities’ these days. Can you share a vision of the Kinetic Edge city of the future? How do drones fit in?

The Kinetic Edge is the project name for a forward-looking collaboration that was originally established between Hangar and Vapor IO, in an effort to lay the groundwork for a necessary infrastructure to help propel smart cities. The Kinetic Edge provides an open platform for all types of autonomous robotics and data processing at the edge, nationwide. As autonomous robotics go to work, they will depend on low-latency services at the edge, including precision navigation, connectivity, situational and airspace awareness, storage, recharging, and high-speed data ingest to assure safe and secure deployment.

Hangar’s RaaS will use the Kinetic Edge to plan and execute autonomous drone missions, producing 4D Visual Insights at the edge. This will lay the groundwork for completely autonomous robotic missions, paving the way to deliver critical infrastructure for all types autonomous vehicles, including cars, trucks or taxis.

The first Kinetic Edge deployment is underway in Chicago.

Moving on, what does your partnership with ClimaCell provide the client?

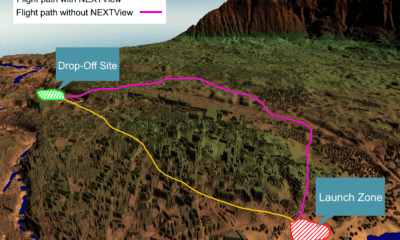

This partnership is under the Kinetic Edge umbrella, representing a fundamental piece of technology that must exist to enable autonomous vehicles. Hangar RaaS, running on Vapor IO’s Kinetic Edge, will utilize ClimaCell’s Micro Weather API to establish an infrastructure that enables micro weather intelligence. As autonomous robotics navigate tight spaces, especially aerial drones in outdoor settings, they’ll need near real-time awareness of the micro-weather patterns round them. ClimaCell delivers ground-level micro weather information on a minute-by-minute basis, giving robotics awareness of hidden weather systems and ensuring safe and efficient flight.

You also announced a partnership with Humatics Corporation, can you tell us more about what that brings to the table?

This is another partnership tied to the Kinetic Edge, again representing a fundamental technology that must be present in smart city infrastructure. Humatics provides autonomous vehicles with unprecedented centimeter- and millimeter-scale positioning, unlocking the possibility of real-time, scalable indoor and outdoor navigation for autonomous drones, cars and robotics in complex urban environments. The Humatics Spatial Intelligence Platform™ is comprised of its centimeter- and millimeter-scale microlocation systems and analytics software, capable of capturing and calculating precise 3D positions. This data stream allows for accurate, rapid drone positioning and navigation in any environment.

How do you bring GIS & drones together?

The world is full of billions of data points. Rather than continuing to add sensors to an always expanding number of stationary objects, for the first time ever, drones unlock the possibilities of mobile sensors. Hangar has the unique ability to pair visual data points with an XYZ coordinate and a time value. The accurate visual repository of ever-changing 4D environments we enable naturally compliment largely 2D GIS data and geospatial information. We’ve partnered with Esri to explore how we can bring visual intelligence to the GIS community at scale.

Human intelligence, augmented intelligence and pure computer-driven intelligence, please paint that picture for us?

I’d classify these intelligence types as evolutionary stages of 4D visual insights. Today, we require “human intelligence” to derive insights from the visual insights we provide. As we open the 4D Data spigot to technologies that identify patterns and detect changes, we’ll see a new era of “augmented intelligence” where computers cue humans of relevant events or pertinent actions. And finally, “pure computer-driven intelligence” is the much-hyped world of tomorrow where autonomous robotics collect, understand and react to 4D visual insights in real-time, without the interference of humans.

Do you think we are on the cusp of another industrial revolution?

Absolutely. The big question today centers around why digitization isn’t stimulating productivity growth? Fortunately, history is a good indicator of what is to come. In the 1980s, productivity waned, despite investments into the computer revolution. Worker productivity declined, as increasing investments were made into semiconductors. This counter-intuitive paradox became known as the Solow Paradox. Then in the 1990s, US productivity trends reversed, ushering in a new wave of innovation that exponentially boosted semiconductor power in relation to cost.

When we talk autonomous robotics and 4D visual insights, as they relate to fundamentally altering industry economics, I think we’re seeing the Solow Paradox all over again. We are on the cusp of industrial revolution, and we will see productivity rates explode as industries catch up with the opportunities afforded by visual insight and autonomous robotics services.

What other companies impress you in this space?

There’s a long list of companies who are doing incredible things with drone data and drone insights. Today, we’re very interested in technologies that extend visual insights across a multitude of dimensions. Autonomous robotics need to venture beyond line of sight. 4D data must extend indoors. 4D visual insights must be derived from machine learning and artificial intelligence. No one company can do it all. We’re impressed with companies that understand the bigger picture, and are working to incorporate innovations like edge computing, precision navigation or micro-climate dynamics into RaaS and autonomous robotic services.

Finally, Josh, anything else you’d like to add?

I believe we’re on the verge of a new category of autonomous robotics services, where businesses task autonomous robotics, including but not limited to drones, to perform any number of functions. The first application of Hangar technology uses autonomous drones to mirror this model, unlocking a previously unattainable volume and veracity of structured spatial data. This fundamentally alters industrial economics with transformative visual perception, and unleashes machine learning, AI and other data sciences in the context of the world around us.

About Josh Meler – Senior Director of Marketing at Hangar Technology

It’s no coincidence that my career is about as old as the first generation iPhone. I’ve seen firsthand a monumental shift in how the world works. For 500 years, the movement of ideas and data was dependent solely on people and physical mediums. Then, in the most disruptive fraction of years in our humanity, that model was violently turned upside down, giving rise to the age of digitalization, connectivity, mobility, and now – autonomicity.

It’s no coincidence that my career is about as old as the first generation iPhone. I’ve seen firsthand a monumental shift in how the world works. For 500 years, the movement of ideas and data was dependent solely on people and physical mediums. Then, in the most disruptive fraction of years in our humanity, that model was violently turned upside down, giving rise to the age of digitalization, connectivity, mobility, and now – autonomicity.

I’m obsessed with this idea, and have made it my career’s ambition to be a first-mover, shaping how these emerging technologies transform the global landscape.

Prior to working at Hangar, Josh Meler was Chief Marketing Officer at an Austin, TX startup that helped create a new category of PaaS offerings for mobile apps.