The simplest definition of the internet is as the interconnection of an infinite number of computers. This interconnection is based on all kinds of devices: cables, routers, dedicated computers, referred to as servers, or large numbers of these together as data centres. The internet becomes interactive through computer languages, the most common being html, created in 1992. Indeed, it was as a combination of machines and languages that the internet was invented in the late 1980s and early ’90s.

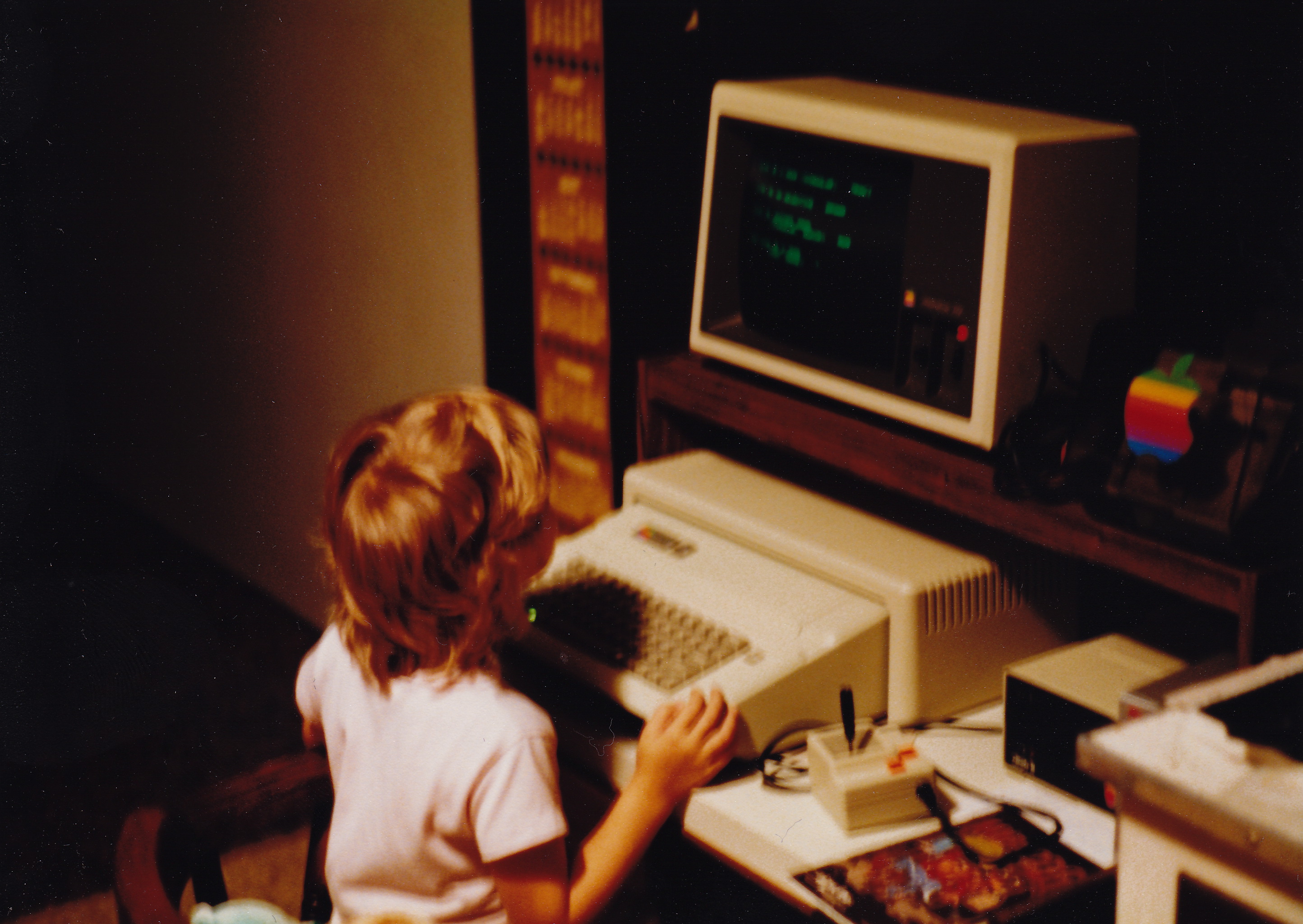

Without going into detail, it is instructive to look into the cultural contexts out of which the internet developed. The first was the US military, which was seeking a telecommunications system so decentralized that it would continue to function even in the event of Soviet nuclear strikes. The second was the scientific community, which instilled its own values into the system: cooperation, autonomy, consensus and non-commerciality. The third context was a disparate group of American entrepreneurs and technicians influenced by the post-hippie counter-culture of the West Coast. Radically individualistic and libertarian, this group was attracted by technology as a means of liberation on the margins of society. In the 1980s, the digital world was already marked by a tension between proprietary information technology – IBM was the first company to make a profit from this kind of technology with its PCs – and freeware and the licenses that prevented it from being privatized. The latter were to open the way to the creative commons, an alternative to the usual forms of intellectual property that were held to be over-restrictive.

This composite genealogy was to provide the structure for the subsequent development of the internet between the poles of openness and control, liberty and domination, free-to-use and free enterprise. This brief chronology will illustrate how the internet has a dynamic history of power relationships that continues to characterize our how we interact with digital technology. Without yielding to promises of commercial advantage or dystopian predictions about artificial intelligence and transhumanism, it will attempt to link three levels of development: technique, use and representation.

The dot.com economy (1992–2000)

The first half of the 1990s was dominated by researchers, technicians and artists. People found their way as best they could, from one link to the next, following the advice of manuals and other portals that were compiled for free and were more or less specialized. People used email to exchange website addresses. During those years, the concept that arose was fundamentally ambiguous. Cyberspace could be seen as being libertarian or liberal. Discourse was based on terms such as dematerialisation, reinvention of self, adaptation to change, the obsolescence of traditional media and of the state itself.

This period is still seen as foundational in the evolution of the internet. It was, nevertheless, short-lived. Between 1997 and 2001, a speculative bubble formed around companies that had developed new services and activities since the liberalization of the dot.com domain names in 1995. This was the period that saw a restructuring of the internet. Some sites began to amass very large concentrations of traffic, in particular certain portals and the earliest search engines (e.g. Altavista). Online shopping became established with Amazon, AOL and eBay. These companies broke all records when it came to capital investment, as did a few others operating in software (Netscape), micro-technology (Intel), communications (Alcatel, Lucent, Cisco) and content production (Vivendi). It was at this time that telecoms markets in OECD countries were deregulated, while national public telephone monopolies were either privatized or given independent status. Access to capital enabled them, along with their competitors, to develop and democratize high-speed networks (ADSL and cable) and mobile phones.

A lot of people made a lot of money thanks to the internet, sometimes with very small initial investment, something that whetted the appetites of entrepreneurs and nurtured ambitions among actors in financial markets and capital investment funds. This was the first internet bubble, which finally burst in March 2000 when web start-ups were no longer able to meet the expectations of their capital investors and creditors.

Some of the new services were soon relying on a little invention known as a cookie. This is nothing more than a simple text file left discreetly on your computer by a web site. When you revisit the same site, the cookie updates itself and passes details to the site about, for example, your online purchases. Whilst the basic idea is to authenticate access to your account and facilitate your browsing on the site, for example by automatically completing online forms, a cookie is a very powerful and not very transparent tool. Not only does it provide web companies with information about you (personal details, credit card number, passwords) and your browsing habits (time and place of log-in, hardware used, other pages visited), but it can also be left on your machine by a website you have never visited (but which embeds advertising within a site that you do visit). It is this technique that ensures you are sent targeted advertising.

These ‘third-party cookies’, as they are known, transform your favourite sites into nothing less than hijackers of your connection time. They monetize your attention, either by leasing access to your session to some third party or by selling data collected about you to specialist intermediaries – all without you even noticing. They transform your face-to-face session with your machine into a commodity, causing your actions to be anything but private or discreet. Now no longer in any sense disinterested, the internet was considered as a means of selling an audience to advertisers.

Disillusion (2000–2007)

The decade that followed saw three crucial developments: the supremacy of Google, the appearance of big data and the return of the state. From 2000 onwards, Google became the dominant search engine. This brought a paradigm change: previously, search engines had worked on the basis of popularity, using audience measurement techniques similar to those of the traditional press. With Google, the basis became authority. The criterion, like that of citations in scientific literature, is the number of links that point to a website. That is the key to one of the most well-known algorithms: Google’s ‘PageRank’, which calculates the order in which search results are shown. Similar criteria are also considered, especially in registering and handling the billions of search requests received. Google was soon to diversify its services to mapping, email, advertising, blogs, diaries, translation and audience measurement. Google, with many others following its example, began to process enormous quantities of user data in order to personalize its pages and to target advertising.

Around 2002, this development encouraged – and was encouraged by – a spectacular increase in information storage capacities and the power of processors, and by a reduction in the cost of both. The time had come when everything could be stored and processed. Google improved the quality of data in two crucial respects: diversity and free access. Henceforth, ‘big data’ was to be central to the functioning of the internet, a discreet shift that only came to public attention later.

The further important development was the arrival of governments on the scene, in the wake of 9/11. The Patriot Act of October 2001 gave US governments judicial freedoms and considerable budgets to monitor communications, the extent of which only emerged ten years later. Here too, big data is a central issue. While internet use continued to be democratized, big data technologies remained discreet, to say the least.

Keep smiling (2007–)

By the beginning of the 2000s, a new form of highly decentralized network interaction had appeared. Napster and exchange applications allowed peer-to-peer multimedia file-sharing. The idea was to escape the clutches of the market, the state and copyright. This technique very soon began to destabilize cultural industries and was in many cases banned (e.g. the HADOPI act in France). Banning had negligible effect and paved the way to an online pirate economy. Simultaneously, it encouraged new economic actors to transform file-sharing systems into business models – again, a mix of utopianism and enterprise.

As all the world knows, this gave birth to the social networks, first and foremost Facebook. From 2007 onwards, Facebook spread like wildfire beyond the US. At this point, a new generation of algorithms appeared, this time generated by reputation. Whatever anyone pays attention to acquires value, and this value in turn allows attention to be drawn. It is all calculated, still invisibly, to generate the greatest possible number of actions (views, clicks, likes, etc.), thus enabling the maximum possible amount of data to be captured. The example par excellence is the recommendation algorithm of Amazon, where if you purchase such-and-such a work, you will be recommended three or four others. If you have already consulted some other page immediately before, the recommendations will be even more explicitly tailored.

Social networks were the spearhead of a much wider development: Web 2.0. techniques for editing html pages and for communication between sites now enabled interaction with online content. This was a move from essentially one-way flows of information to an internet conceived and used as a real space for social interaction. And, once again, each interaction would produce data.

This shift was supported by the wave of mobile devices that swept onto markets, including Apple’s iPhone 3G, launched in mid-2008. Such devices are online everywhere, at any time and facilitate much more interaction. The smartphone is actually just a small computer linked to the internet and integrated with a mobile phone, together with systems for photography and video, geolocation (GPS) and others. Lots of variants (such as iPods, tablets, etc.) soon appeared, but also an enormous range of ‘apps’: small software packages providing an online service. The result was a spectacular increase in usage and traffic and, consequently, in the volume and variety of data exchanged and captured. Today, over a billion smartphones are sold every year, while all the apps that can be installed on them are, to all intents and purposes, available from only two platforms (Apple’s iTunes Store and Google Play).

Since each one of us has several points of access to the internet, we are storing more and more of our data online rather than in the memory of our devices. I refer, of course, to the cloud, or rather the servers of these same multinationals, which now no longer have to go to the trouble of seeking your data on your hard drive. Now, data flows are monetized increasingly effectively by specialist, highly discreet intermediaries. For example, the home page of the Guardian’s website connects you to at least sixteen other sites or third-party servers – companies that will convert your innocent browsing into a marketable asset. The commercialization of culture that was deplored by the opponents of television advertising in the 1980s appears, in retrospect, as a very innocent warning shot.

The financial and economic crisis of 2008 did not significantly affect the development of the internet. At the beginning of the 2010s, the quantity and variety of content circulating was unequalled and the systems carrying it had unprecedented capacities (4G for mobile phones plus fibre-optic cabling), while the economic ecosystem linked to the net was concentrated around a few American multinationals – Google, Apple, Facebook, Amazon and Microsoft – operating almost like a cartel.

The platform economy that applications of all kinds create cause the impact internet on daily life to become ever-more apparent. Airbnb, Uber, Deliveroo et al. all have economic impacts that are starting to have an effect on such parts of the market as housing and jobs. The internet is no longer a world apart, no longer merely virtual. Video games, learning software, e-readers and chatbots form part of the daily life of ever-increasing numbers of citizens. The data in circulation is no longer restricted to your identity or your log-on times: it also involves your health, career, purchasing power and what you consume, your travel, your interests, opinions and relationships. The emergent ‘Internet of Things’ is bringing a major expansion in the range of devices connected to the web: toys, clothing, ‘domotics’ (home automation), vehicles, drones, electricity metres, etc. Your ‘smart’ TV set is transmitting data about what you watch and, if it is fitted with a voice command, also conveying recordings of your voice – in order to improve voice-recognition software, of course.

We’re not very far from the ‘telescreens’ of George Orwell’s 1984. But it is not Big Brother who has forced omnipresent devices on you: it was you that bought the device. Nobody obliges you to read a particular newspaper online, but nor does anybody oblige that newspaper to tell you how many ‘partners’ it allows to turn your browsing time into a marketable asset. And it’s all done in a very friendly way, just like those television shows that sell your time to some brand or other. In short, it is Orwell’s nightmare, but it is also the nightmare of Guy Debord, sworn enemy of advertising and the ‘society of the spectacle’.

And let us not forget the final level, which is yet more worrying: the more or less intentional influencing of the way we see the world, of how we consume culture and of our political opinions. Since Trump and Brexit, western public opinion has begun to see that its relationship with reality, channelled by social media, is being manipulated by political forces, disinformation agencies and radical groups. Just as with 9/11 and the pirating of cultural products fifteen years ago, governments have become active again.

People may reassure themselves that the data harvested is so massive that no one is capable of processing it. The EU may have revised its regulatory framework on protection of personal data (the GDPR) and a computer-savvy user can always change the default settings on his applications. Meanwhile the utopians, libertarians and defenders of the creative commons are conquering new lands, with the collaborative encyclopaedia Wikipedia, the WikiLeaks site that serves as a loud-hailer and cover for whistle-blowers, the sub-cultures of makers and hackers of every kind, or even with Bitcoin and other decentralized cryptocurrencies.

The NSA leaks (2013)

Everything was going fine… until 6 June 2013, the day that the whistle-blower Edward Snowden began to disclose classified documents about the surveillance of electronic communications by the NSA and its transatlantic partners. Since the Patriot Act, it had been known that such activities went on and that they had already produced results. It was also known that it was not just data about terror suspects that were being collected, but about all of us. But what was striking was the servility of the Internet giants – Google, Apple, Facebook and others – and the companies who own the infrastructures of the web and provid the access points to it. The most shocking example lay in the ‘backdoors’ – in other words, bits of programme secretly installed within software packages to send out information about users to intelligence agencies, in real time and without any intermediary. The servility of the internet companies can be explained by the fact that they had always seen user data as raw material to be processed and exchanged, whether for profit or in return for governmental goodwill. The public rediscovered this at the beginning of 2018 with the revelations about how Facebook sold its databases to campaign marketing companies. During the US presidential campaign and Brexit referendum, micro-targeting appears to have indeed influenced the outcome of the vote.

Another lesson to be learned from the Snowden revelations concerns the methods of handling data collected. Whether you are an online multinational or an intelligence service, it is now possible to take immense quantities of data and process it in order to identify recurrences or ‘signatures’. This is what is known as ‘deep learning’ or semi-supervised machine learning – the diametrical opposite of probability theory, where the starting point is always a hypothesis. In other words, you start off by knowing what you are looking for. However, these ‘predictive algorithms’ analyse reality on the basis of crude data and create their own rules on the basis of whatever they discover.

This is a development that has appeared only this decade. It represents a change in the nature of the internet itself, because it contrives to increase the ways the internet can be used and the value that can be extracted from big data. In short, the internet has become organized into a vast machine for public and private surveillance and power over it is extremely concentrated; at the same time, changes in its technology provide powerful actors with tools to manage information that literally go beyond human understanding.

Despite all this, the web economy continues to thrive, posing serious questions about awareness raising. Fortunately, counter-proposals are on the increase. Admittedly, they are affected by the perennial tensions between utopianism and commerciality. Cooperative and non-commercial arguments are also beginning to use big data to reinvent themselves and engage politically. So far, however, there are no signs of a threshold effect. We might have to wait another thirty years for that.