Why chatbots still leave us cold

The results are in, and chatbots are still remarkably dissatisfying.

Researchers at Facebook and Microsoft, and a number of collaborating institutions, last week unveiled the formal write-up of something called the "Second Conversational Intelligence Challenge," a competition between chatbots that took place during the NeurIPS artificial intelligence conference in Montreal in December.

Although the competition found some better and some worse programs for chatbots, on the whole, the quality of dialog from these computers was just as lacking as any interaction you may have had with a chatbot on the Web.

Instances from the winning — let's emphasize they were winners — neural network approaches include such annoying goofs as inconsistencies in sequences of statements. For example, Lost in Conversation, the top team in the competition, engaged a human with a bot in a casual conversation about what they both like. The machine uttered the phrase "i love to paint." When the human responds "Awesome! What do you like to paint?" the machine responds not with something about painting, but with "i love to draw portraits of my favorite cars." (Let's call this doubly wrong: not only is drawing not painting, one doesn't generally draw or paint "portraits" of inanimate objects.)

Also: Facebook open sources tower of Babel, Klingon not supported

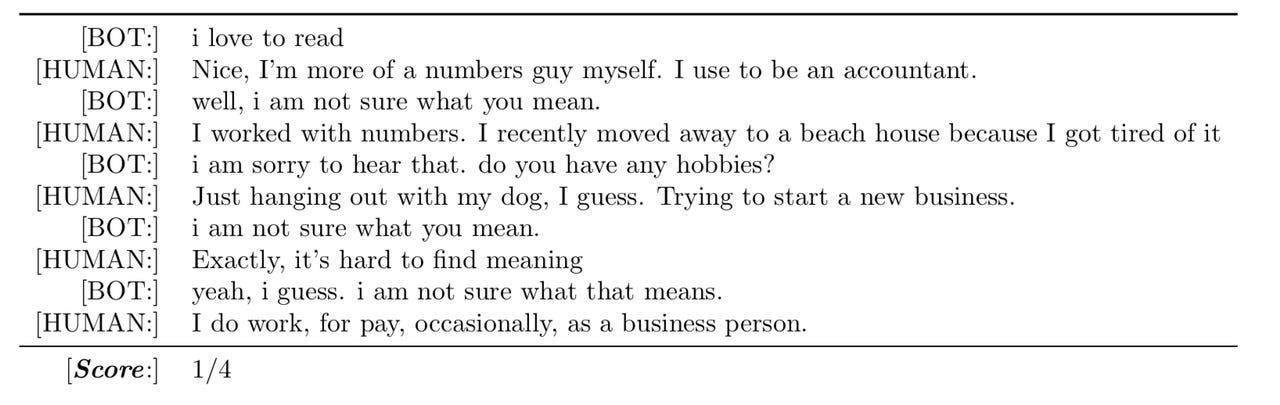

An example of the soul-crushing dialogue that can happen in a chit chat with a bot.

Other annoyances include mindless repetitions of phrases within the same utterances, such as "do you have any pets? do you have pets?" from the second-place team, Hugging Face. Hilarious examples include self-contradiction. The computer says, "Just got done reading a book," to which a human asks, "Which book?" to which the computer responds "I don't read much, i am more into reading."

Perhaps indicative of how loathsome chatbots are, humans who volunteered to test the things for free, by conversing on Facebook's Messenger app, mostly ended up tuning out the bots or engaging in "senseless" and even "offensive" conversations, the researchers write. Those free evaluations "in the wild" were such a mess they had to be completely eliminated from the evaluation of the bots.

Another group of humans were paid to test the machines on the Amazon Mechanical Turk crowdsourcing platform. They generally were more diligent in sticking with the task, no surprise, since they were being paid.

Also: Fairness in AI, StarCraft Edition

The authors, looking over the ratings given to the machines by Turk volunteers, note that even the top-performing neural networks like Lost in Translation and Hugging Face "suffered from errors involving repetition, consistency or being 'boring' at times." Another flaw was that the machines "asked too many questions."

"When the model asks too many questions," the authors write, "it can make the conversation feel disjointed, especially if the questions do not relate to the previous conversation."

The top competitors' neural networks "often failed to be self-consistent across a few dialogue turns," they note. "Even if they happen infrequently, these problems are particularly jarring for a human speaking partner when they do happen." The AI also "asked questions that are already answered. One model asks 'what do you do for a living?' even though the human earlier stated 'i work on computers' resulting in the human replying 'I just told you silly'."

Also: Google explores AI's mysterious polytope

The paper, "The Second Conversational Intelligence Challenge (ConvAI2)," is authored by Emily Dinan, Alexander Miller, Kurt Shuster, Jack Urbanek, Douwe Kiela, Arthur Szlam, Ryan Lowe, Joelle Pineau and Jason Weston of Facebook AI Research, along with Varvara Logacheva, Valentin Malykh and Mikhail Burtsev from the Moscow Institute of Physics and Technology; Iulian Serban of the University of Montreal; Shrimai Prabhumoye, Alan W Black, and Alexander Rudnicky of Carnegie Mellon; and Jason Williams of Microsoft. The paper is posted on the arXiv pre-print server.

The flaws in the chatbots come despite the fact the researchers took great lengths to improve upon the training and test framework in which the teams compete, relative to the previous competition, in 2017.

A snippet from the winning chatbot team, Lost in Translation. Far fewer goofs than others, but still not really sublime conversation.

This time around, the authors offered a benchmark suite of conversational data, published a year ago by Dinan, Urbanek, Szlam, Kiela, and Weston, along with Saizheng Zhang of Montreal's Mila institute for machine learning. That data set, called "Persona-Chat," consists of 16,064 instances of utterances by pairs of human speakers asked to chat with one another on Mechanical Turk. Another set of over 1,000 human utterances were kept in secret as a test set for the neural networks. The data set was provided to all the competing researchers, though not all of them used it.

Each human who helped crowdsource Persona-Chat was given a "profile" of who they're supposed to be — someone who likes to ski, say, or someone who recently got a cat — so that the human interlocutors play a role. Each of the two speakers try to keep their utterances consistent with that role as they engage in dialogue. Likewise, the profiles can be given to a neural network during training, so that sticking to personality is one of the embedded challenges of the competition.

As the authors describe the challenge, "The task aims to model normal conversation when two interlocutors first meet, and get to know each other.

"The task is technically challenging as it involves both asking and answering questions, and maintaining a consistent persona."

The different teams used a variety of approaches, but especially popular was the "Transformer," a modification of the typical "long short-term memory," or LSTM, neural network developed by Google's Ashish Vaswani and colleagues in 2017.

So why all the poor results?

Reviewing the shortcomings, it's clear some of the problem is the rather mechanical way in which the machines are trying to improve their score when being tested. For a neural network to represent a profile or persona, it seems the machine tries to produce the best score by repeating sentences, rather than creating genuinely engaging sentences. "We often observed models repeating the persona sentences almost verbatim," they write, "which might lead to a high persona detection score but a low engagingness score.

"Training models to use the persona to create engaging responses rather than simply copying it remains an open problem."

Must read

- 'AI is very, very stupid,' says Google's AI leader (CNET)

- How to get all of Google Assistant's new voices right now (CNET)

- Unified Google AI division a clear signal of AI's future (TechRepublic)

- Top 5: Things to know about AI (TechRepublic)

That goes back to the design and the intent of the test itself, they write. The tests may be too shallow to develop robust conversation skills. "Clearly many aspects of an intelligent agent are not evaluated by this task, such as the use of long-term memory or in-depth knowledge and deeper reasoning," the authors observe.

"For example, 'Game of Thrones' is mentioned, but a model imitating this conversation would not really be required to know anything more about the show, as in ConvAI2 speakers tend to shallowly discuss each other's interest without lingering on a topic for too long."

The authors suggest a lot of emerging technology in natural language processing may help with some of the shortcomings.

For example, these teams didn't have access to a language encoder-decoder neural network called "BERT" that was introduced by Google late last year. BERT can improve sentence representation.

Similarly, new directions in research might be a solution. For example, the Facebook AI authors late last year introduced something called "Dialogue Natural Language Inference," which trains a neural network to infer whether pairs of utterances "entail" or "contradict" one another or are neutral. That kind of approach may "fix the model," they suggest, by training on a very different kind of task.

Scary smart tech: 9 real times AI has given us the creeps

Previous and related coverage:

What is AI? Everything you need to know

An executive guide to artificial intelligence, from machine learning and general AI to neural networks.

What is deep learning? Everything you need to know

The lowdown on deep learning: from how it relates to the wider field of machine learning through to how to get started with it.

What is machine learning? Everything you need to know

This guide explains what machine learning is, how it is related to artificial intelligence, how it works and why it matters.

What is cloud computing? Everything you need to know about

An introduction to cloud computing right from the basics up to IaaS and PaaS, hybrid, public, and private cloud.