Last Monday evening — 12 November 2018 — Google and a number of other services experienced a 74 minute outage. It’s not the first time this has happened; and while there might be a temptation to assume that bad actors are at work, incidents like this only serve to demonstrate just how much frailty is involved in how packets get from one point on the Internet to another.

Our logs show that at 21:12 UTC on Monday, a Nigerian ISP, MainOne, accidentally misconfigured part of their network causing a "route leak". This resulted in Google and a number of other networks being routed over unusual network paths. Incidents like this actually happen quite frequently, but in this case, the traffic flows generated by Google users were so great that they overwhelmed the intermediary networks — resulting in numerous services (but predominantly Google) unreachable.

You might be surprised to learn that an error by an ISP somewhere in the world could result in Google and other services going offline. This blog post explains how that can happen and what the Internet community is doing to try to fix this fragility.

What Is A Route Leak, And How Does One Happen?

When traffic is routed outside of regular and optimal routing paths, this is known as a “route leak”. An explanation of how they happen requires a little bit more context.

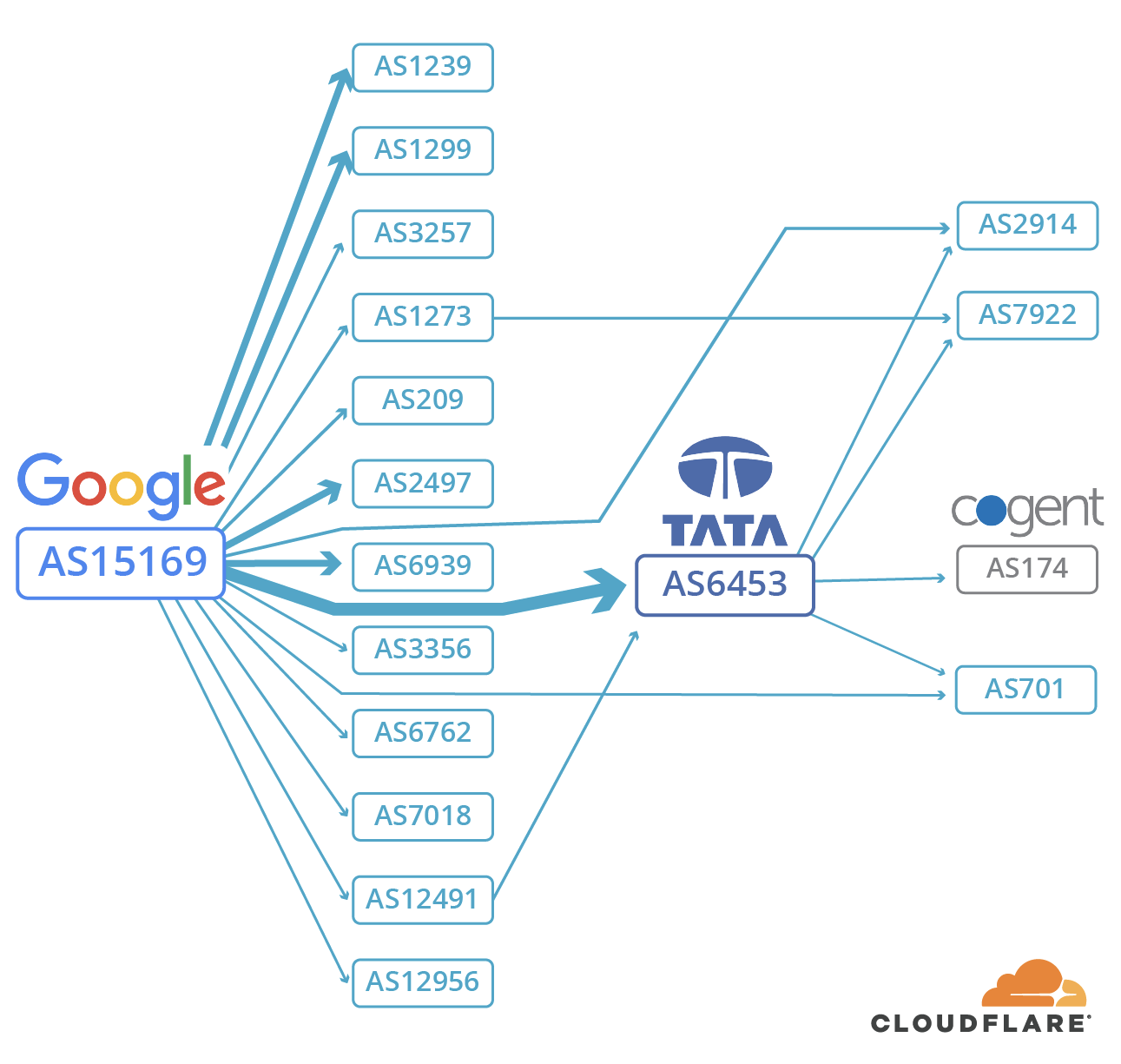

Every network and network provider on the Internet has their own Autonomous System (AS) number. This number is unique and indicates the part of the Internet that that organization controls. Of note for the following explanation Google’s primary AS Number is 15169. That's Google's corner of the Internet and where Google traffic should end up... by the fastest path.

A Typical view of how Google/AS15169’s routes are propagated to Tier-1 Networks.

As seen above, Google is directly connected to most of the Tier-1 networks (the largest networks link large swathes of the Internet). When everything is working as it should be, Google’s AS Path, the route packets take from network to network to reach their destination, is actually very simple. For example, in the diagram above, if you were a customer of Cogent and you were trying to get to Google, the AS Path that you would see is “174 6453 15169”. That string of numbers is like a sequence of waypoints: start on AS 174 (Cogent), go to Tata (AS 6453), then go to Google (AS 15169). So, Cogent subscribers reach Google via Tata, a huge Tier-1 provider.

During the incident, MainOne misconfigured their routing as reflected in the AS Path : “20485 4809 37282 15169”. As a result of this misconfiguration, any networks that MainOne peered with (i.e. were directly connected to) potentially had their routes leaked through this erroneous path. For example, the Cogent customer in the paragraph above (AS 174) wouldn’t have gone via Tata (AS 6453) as they should have. Instead, they were routed first through TransTelecom (a Russian Carrier, AS 20485), then to China Telecom CN2 (a cross border Chinese carrier, AS 4809), then on to MainOne (the Nigerian ISP that misconfigured everything, AS 37282), and only then were they finally handed off to Google (AS 15169). In other words, a user in London could have had their traffic go from Russia to China to Nigeria — and only then got to Google.

But… Why Did This Impact So Many People?

The root cause of this was MainOne misconfiguring their routing. As mentioned earlier, incidents like this actually happen quite frequently. The impact of this misconfiguration should have been limited to MainOne and its customers.

However, what took this from relatively isolated and turned it into a much broader one is because CN2 — China Telecom’s premium cross-border carrier — was not filtering the routing that MainOne provided to them. In other words, MainOne told CN2 that it had authority to route Google’s IP addresses. Most networks verify this, and if it is incorrect, filter it out. CN2 did not — it simply trusted MainOne. As a result of this, MainOne’s misconfiguration propagated to a substantially larger network. Compounding this, it is likely that the Russian network TransTelecom behaved similarly towards CN2 as CN2 had behaved towards MainOne — they trusted without any verification of the routing paths that CN2 gave to them.

This demonstrates how much trust is involved in the underlying connections that make up the Internet. It's a network of networks (an internet!) that works by cooperation between different entities (countries and companies).

This is how a routing mistake made in Nigeria then propagated through China and then through Russia. Given the amount of traffic involved, the networks were overwhelmed and Google was unreachable.

It is worth explicitly stating: the fact that Google traffic was routed through Russia and China before going getting to Nigeria and only then hitting the correct destination made it appear to some people that the misconfiguration was nefarious. We do not believe this to be the case. Instead, this incident reflects a mistake that was not caught by appropriate network filtering. There was too much trust and not enough verification across a number of networks: this is a systemic problem that makes the Internet more vulnerable to mistakes than it should be.

How to Mitigate Route Leaks Like These

Along with Cloudflare’s internal systems, BGPMon.net and ThousandEyes detected the incident. BGPMon has several methods to detect abnormalities; in this case, they were able to detect that the providers in the paths to reach Google were irregular.

Cloudflare’s systems immediately detected this and took auto-remediation action.

Thankfully our systems detected it and mitigated it! pic.twitter.com/qFiDkrn2Kd

— Jerome Fleury (@Jerome_UZ) November 13, 2018

Some more information about Cloudflare’s Auto Remediation system: https://blog.cloudflare.com/the-internet-is-hostile-building-a-more-resilient-network/

Looking Forward

Cloudflare is committed to working with others to drive more secure and stable routing. We recently wrote about RPKI and how we’ll start enforcing “Strict” RPKI validation, and will continue to strive for secure internet routing. We hope that all networks providing transit services can ensure proper filtering of their customers, end hijacks and route-leaks and implement best practices like BCP-38.