Text by: Michael Seemann / Data Visualization by: Michael Kreil

[Download as PDF]

[(original) German Version]

The Internet has always been my dream of freedom. By this I mean not only the freedom of communication and information, but also the hope for a new freedom of social relations. Despite all the social mobility of modern society, social relations are still somewhat constricting today. From kindergarten to school, from the club to the workplace, we are constantly fed through organizational forms that categorize, sort and thereby de-individualize us. From grassroots groups to citizenship, the whole of society is organized like a group game, and we are rarely allowed to choose our fellow players. It’s always like, „Find your place, settle down, be a part of us.“

The Internet seemed to me to be a way out. If every human being can relate directly to every other, as my naive-utopian thought went, then there would no longer be any need for communalized structures. Individuals could finally counteract as peers and organize themselves. Communities would emerge as a result of individual relationships, rather than the other way around. Ideally, there would no longer be any structures at all beyond the customized, self-determined network of relations of the individual.1

The election of Donald Trump was only the latest incident to tear me rudely from my hopes. The Alt-Right movement – a union of right-wing radical hipsterdom and the nihilistic excesses of nerd culture – boasts that it „shitposted“ Trump into the White House. This refers to the massive support of the official election campaign by an internet-driven grassroots meme campaign. And even though you can argue that the influence of this movement on the election was not as great as the trolls would have you believe, the campaign clearly demonstrated the immense power of digital agitation.

But it wasn’t the discursive power of internet-driven campaigns that frightened me so badly this time. This had been common knowledge since the Arab Spring and Occupy Wall Street. It was the complete detachment from facts and reality unfolding within the Alt-Right which, driven by the many lies of Trump himself and his official campaign, has given rise to an uncanny parallel world. The conspiracy theorists and crackpots have left their online niches to rule the world.

In my search for an explanation for this phenomenon, I repeatedly came across the connection between identity and truth. People who believe that Hillary and Bill Clinton had a number of people murdered and that the Democratic Party was running a child sex trafficking ring in the basement of a pizza shop in Washington DC are not simply stupid or uneducated. They spread this message because it signals membership to their specific group. David Roberts coined the term „tribal epistemology“ for this phenomenon, and defines it as follows:

Information is evaluated based not on conformity to common standards of evidence or correspondence to a common understanding of the world, but on whether it supports the tribe’s values and goals and is vouchsafed by tribal leaders. “Good for our side” and “true” begin to blur into one.2

New social structures with similar tribal dynamics have also evolved in the German-speaking Internet. Members of these „digital tribes“ rarely know each other personally and often don’t even live in the same city or know each other’s real names. And yet they are closely connected online, communicating constantly with one another while splitting off from the rest of the public, both in terms of ideology and of network. They feel connected by a common theme, and by their rejection of the public debate which they consider to be false and „mainstream“.

It’s hardly surprising, then, that precisely these „digital tribes“ can be discovered as soon as you start researching the spread of fake news. Fake news is not, as is often assumed, the product of sinister manipulators trying to steer public opinion into a certain direction. Rather, it is the food for affirmation-hungry tribes. Demand creates supply, and not the other way around.

Since the present study, I have become convinced that „digital tribalism“ is at the heart of the success of movements such as Pegida and the AfD party, as well as the Alt-Right and anti-liberal forces that have blossomed all over the world since 2016.

For the study at hand, we analysed hundreds of thousands of tweets over the course of many months, ranking research question by research question, scouring heaps of literature, and developing and testing a whole range of theories. On the basis of Twitter data on fake news, we came across the phenomenon of digital tribalism, and took it from there.3 In this study, we show how fake news from the left and the right of the political spectrum is disseminated in practice, and how social structures on the Internet can reinforce the formation of hermetic groups. We also show what the concept of „filter bubbles“ can and cannot explain, and provide a whole new perspective on the right-wing Internet scene in Germany, which can help to understand how hate is developed, fortified, spread and organized on the web. However, we will not be able to answer all the questions that this phenomenon gives rise to, which is why this essay is also a call for further interdisciplinary research.

Blue Cloud, Red Knot

In early March 2017, there was some commotion on the German-language Twitter platform: the German authorities, it was alleged, had very quietly issued a travel warning for Sweden, but both the government and the media were hushing the matter up, since the warning had been prompted by an elevated terrorism threat. Many users agreed that the silence was politically motivated. The bigger picture is that Sweden, like Germany, had accepted a major contingent of Syrian refugees. Ever since, foreign media, and right-wing outlets in particular, have been claiming that the country is in the grip of a civil war. Reports about the terrorism alert being kept under wraps fed right into that belief.

For proof, many of these tweets did in fact refer to the section of the German Foreign Office website that includes the travel advisory for Sweden.4 Users who followed the link in early March did in fact find a level-3 (“elevated”) terrorism alert, which remains in place to this day. The website also notes the date of the most recent update: March 1, 2017. What it did not mention at the time was that the Swedish authorities had issued their revised terrorism alert a while back – and that it had been revised downwards rather than upwards, from level 4 (“high”) to level 3 (“elevated”).

After some time, the Foreign Office addressed the rumors via a clarification of facts on its website. Several media picked up on the story and the ensuing corrections. But the damage was done. The fake story had already reached thousands of people who came away feeling that their views had been corroborated: firstly, that conditions in Sweden resembled a civil war, and secondly, that the media and the political sphere were colluding to keep the public in the dark.

What happened in early March fits the pattern of what is known as fake news – reports that have virtually no basis in fact, but spread virally online due to their ostensibly explosive content.5 Especially in the right-wing sectors of the web, such reports are now “business as usual”.6

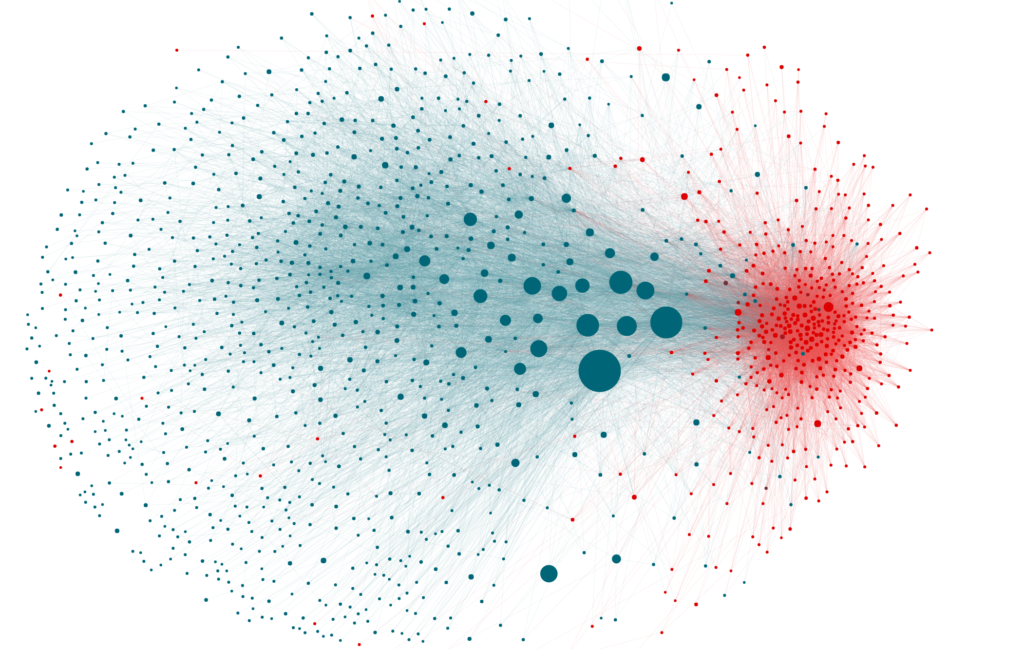

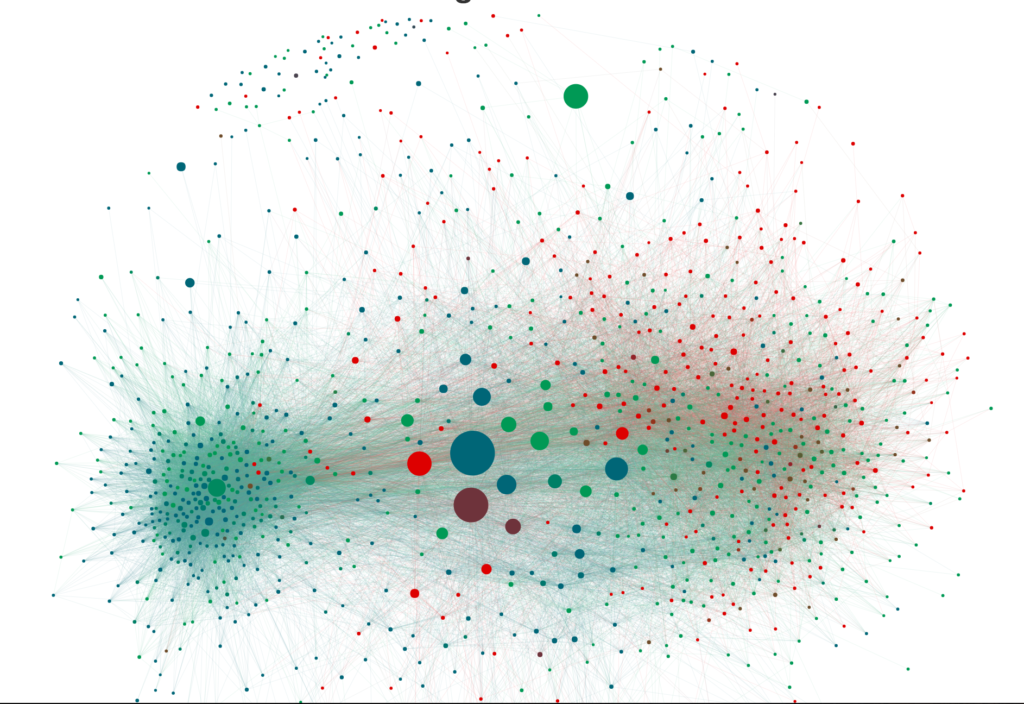

Data journalist Michael Kreil took a closer look at the case. He wanted to know how fake news spread, and whether corrections were an effective countermeasure. He collected the Twitter data of all accounts that had posted something on the issue, and flagged all tweets sharing the fake news as red and all those forwarding the correction as blue. He then compiled a graphic visualization of these accounts that illustrates the density of their respective networks. If two accounts follow each other and/or follow the same people, or are followed by the same people, they are represented by dots in greater proximity. In other words, the smaller the distance between two dots is, the more closely-knit the networking connections are between the accounts they refer to. The dot size corresponds to the account’s follower count.

The result is striking: where we might expect to see one large network, two almost completely distinct networks appear. The disparity between the two is revealed both by the coloring and by the relative position of the accounts. On the left, we see a fairly diffuse blue cloud with frayed edges. Several large blue dots relatively close together in the center represent German mass media such as Spiegel Online, Zeit Online, or Tagesschau. The blue cloud encompasses all those accounts that reported or retweeted, which is to say, forwarded, the correction.

On the other side, we see a somewhat smaller and more compact red mass consisting of numerous closely-spaced dots. These are the accounts that disseminated the fake news story. They are not only closely interconnected, but also cut off from the network represented by the large blue cloud.

What is crucial here is the clear separation between the red and blue clusters. There is virtually no communication between the two. Every group keeps to itself, its members connecting only with those who already share the same viewpoint.

“That’s a filter bubble”, Michael Kreil said when he approached me, graph in hand, and asked whether I wanted to join him in investigating the phenomenon. Kreil is on the DataScience&Story team at Tagesspiegel, a daily newspaper published in Berlin, which puts him at the forefront of German data journalism. I accepted, even though I was skeptical of the filter bubble hypothesis.

At first glance, the hypothesis is plausible. A user is said to live in a filter bubble when his or her social media accounts no longer present any viewpoints that do not conform to his or her own. Eli Pariser coined the term in 2011, with view to the algorithms used by Google and Facebook to personalize our search results and news feeds by pre-sorting search results and news items.7 Designed to gauge our personal preferences, these algorithms present us with a filtered version of reality. Filter bubbles exist on Twitter as well, seeing as that every user can create a customized small media network by following accounts that stand for a view of the world that interests them. Divergent opinions, conflicting worldviews, or simply different perspectives simply disappear from view.

This is why the filter bubble theory has frequently served as a convincing explanation in the debate about fake news. If we are only ever presented with points of view that confirm our existing opinions, rebuttals of those opinions might not even reach us any more. So filter bubbles turn into an echo chamber where all we hear is what we ourselves are shouting out into the world.

Verification

Before examining the filter bubble theory, however, we first tried to reproduce the results using a second example of fake news. This time, we found it in the mass media.

On February 5, 2017, the infamous German tabloid BILD reported on an alleged „sex mob“ of about 900 refugees who had allegedly harassed people on New Year’s Eve in Frankfurt’s Fressgass district. This was explosive news because similar riots had occurred in Cologne on New Year’s Eve the previous year. The BILD story quickly made the rounds, mainly because it gave the impression that the Frankfurt police force had kept the incident quiet for more than a month.

In fact, the BILD journalist had been told the story by a barkeeper who turned out to be a supporter of the right-wing AfD party, and BILD had printed it immediately without sufficient fact-checking. As it turned out, the police were unable to confirm any of this, and no other source could be found for the incident. Even so, other media outlets picked up on the story, though often with a certain caution. In the course of the scandal, it became clear that the barkeeper had made up the entire story, and BILD was forced to apologize publicly.

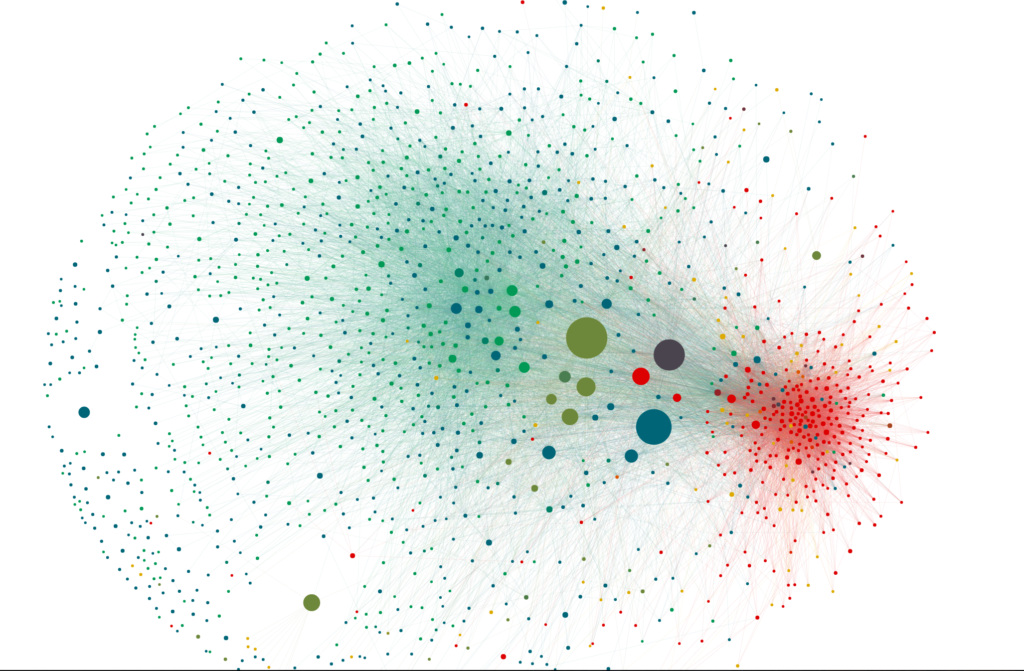

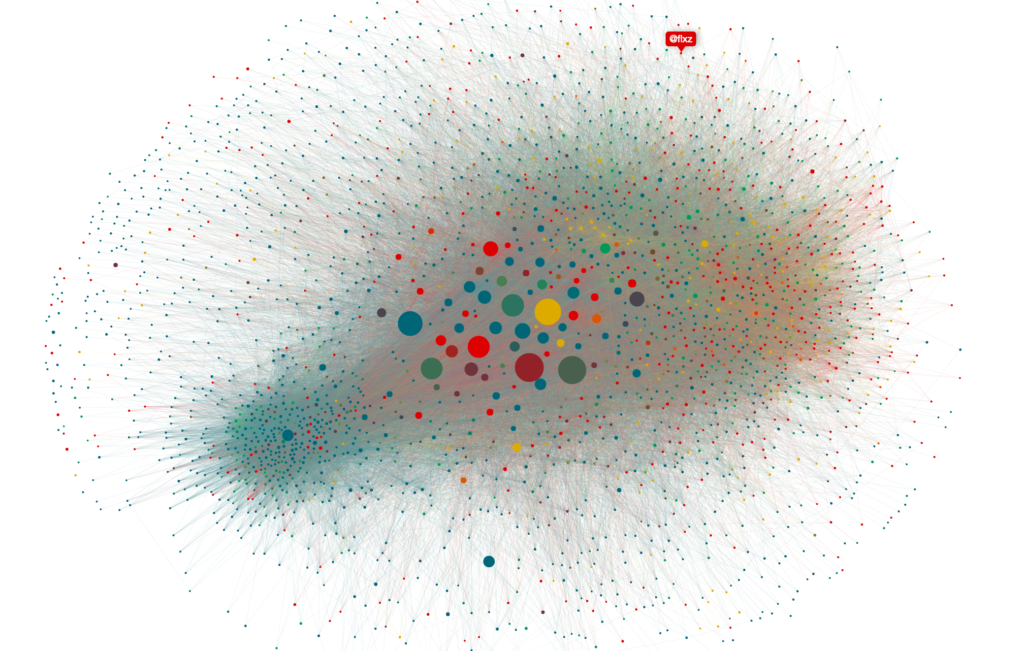

This time, we had to take a slightly different approach for evaluation, because this particular debate on Twitter had been far more complex, and so many reports couldn’t be clearly assigned to either of the two categories, „fake news“ or „correction“. We needed a third category in between. We collected all the articles on the topic in a spreadsheet and flagged them as either spreading the false report (red), or just passing it on in a distanced or indecisive manner (yellow). Phrases such as „According to reports in BILD…“, or indications that the police could not confirm the events, were sufficient for the label „indecisive“. Of course, we also collected articles disproving the fake news story (blue). We also assigned some of the tweets to a fourth category: Meta. The mistake the BILD newspaper made sparked a broader debate on how a controversial, but well-established media company could become the trigger point of a major fake news campaign. These meta-debate articles were colored in green.8

Despite these precautionary measures, it is obvious even at first glance that the results of our first analysis have been reproduced here. The cloud of corrections, superimposed with the meta-comments, is visible in blue and green, brightened up here and there by yellow specks of indecision. Most noticeably, the red cluster of fake news clearly stands out from the rest again, in terms of color and of connectivity. Our fake news bubble is obviously a stable, reproducible phenomenon.

The Filter Bubble Theory

So we were still dealing with the theory that we were seeing the manifestation of a filter bubble. To be honest, I was skeptical. The existence of a filter bubble is not what our examples prove: The filter bubble theory makes assertions about who sees which news, while our graph only visualizes who is disseminating which news. So to prove the existence of a filter bubble, we would have to find out who does or doesn’t read these news items.

This information, however, is also encoded in the Twitter data, and can simply be extracted. For any given Twitter account, we can see the other accounts it follows. In a second step, we can bring up all the tweets sent from those accounts. Once we have the tweets from all the accounts the original account follows, we can reconstruct the timeline of the latter. In other words, we can peer into the filter bubble of that particular account and recreate the worldview within it. In a third step, we can determine whether a particular piece of information penetrated that filter bubble or not.

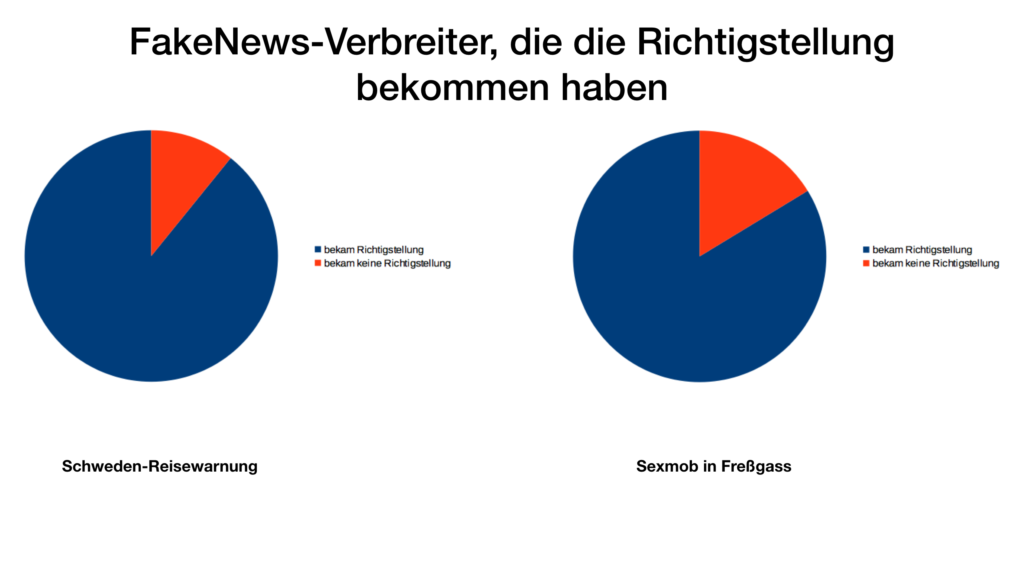

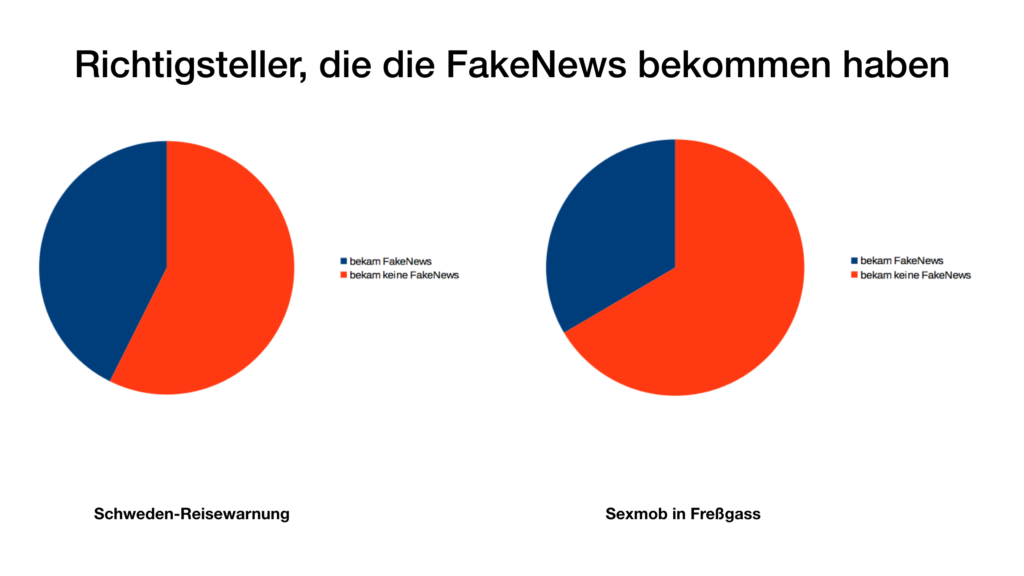

In this manner, we were able to retrieve the timelines of all accounts that had spread the fake news story, and scan them for links to the correction. The result is surprising: Almost all the disseminators of the false terrorism alert story – 89.2 percent, to be exact – had also had the correction in their timelines. So we repeated that test for the Fressgass story, too. In our first example, 83.7 percent of fake news distributors had at least technically been reached by the counterstatement.

This finding contradicts the filter bubble theory. It was obviously not for technical reasons that these accounts continued to spread the fake news story and not the subsequent corrections. At the very least, the filter bubbles of these fake news disseminators had been far from airtight.

Without expecting much, we ran a counter-test: What about those who had forwarded the correction – what did their timelines reveal? Had they actually been exposed to the initial fake news story they were so heroically debunking? We downloaded their timelines and looked into their filter bubbles. Once again, we were surprised by what we found: a mere 43 percent of the correction disseminators could have known about this fake news on Sweden. This result does suggest the existence of a filter bubble – albeit on the other side. In the case of the Fressgass story, the figure is even lower: at 33 percent. So these results do indicate a filter bubble, but in the other direction.

To sum up, the filter bubble effect insulating fake news disseminators against corrections is negligible, whereas the converse effect is much more noticeable.9 These findings might seem to turn the entire filter bubble discourse upside down. No, according to our examples, filter bubbles are not to blame for the unchecked proliferation of fake news. On the contrary, we have demonstrated that while a filter bubble can impede the dissemination of fake news, it did not inoculate users from being confronted with the correction.

Cognitive Dissonance

We are not dealing with a technological phenomenon. The reason why people whose accounts appear within the red area are spreading fake news is not a filter-induced lack of information or a technologically distorted view of the world. They receive the corrections, but do not forward recurring topicsthem, so we must assume that their dissemination of fake news has nothing to do with whether a given piece of information is correct or not – and everything to do with whether it suits them.

Instead, this resembles a phenomenon Leon Festinger called „Cognitive Dissonance“ and investigated in the 1950s.10 Acting as his own guinea pig, Festinger joined a sect that claimed the end of the world was near, and repeatedly postponed the exact date. He wanted to know why the members of the sect were undeterred by the succession of false prophecies, and didn’t simply stop believing in their doomsday doctrine. His theory was that people tend to have a distorted perception of events depending on how much they clash with their existing worldview. When an event runs counter to our worldview, it generates the aforementioned cognitive dissonance: reality proves itself incompatible with our idea of it. Since the state of cognitive dissonance is so disagreeable, people try to avoid it intuitively by adopting a behavior psychologists also call confirmation bias – this means perceiving and taking seriously only such information that matches your worldview, while disregarding or squarely denying any other information.

In this sense, the theory of cognitive dissonance tells us that the red cloud likely represents a group of people whose specific worldview is confirmed by the fake news in question. To test this hypothesis, we extracted several hundred of the most recents tweets from our Twitter user pools, both from the fake news disseminators and from those who had forwarded the correction, and compared word frequencies amongst the user groups. If the theory was correct, we should be able to show that the fake news group was united by a shared, consistent worldview.

We subjected a total of 380.000 tweets to statistical analysis, examining the relative frequencies of words in both groups and compiling a word ranking. The expressions at the top of each list are the ones that appear more commonly in tweets from the one group and more rarely in the other’s.11

After eliminating negligible words like “from”, “the”, etc., and prominent or frequently mentioned account names, the ranking for the fake news group shows a highly informative list of common words. In descending order, the sixteen most important terms were: “Islam”, “Deutschland”, “Migranten”, “Merkel”, “Maas”, “Politik”, “Freiheitskampf” (struggle for freedom), “Flüchtlinge” (refugees), “SPD” (the German Social Democratic Party), “NetzDG”12, “NPD” (the extreme-right National Party), “Antifa”, “Zensur” (censorship), “PEGIDA”13, “Syrer”, “Asylbewerber” (asylum seeker).

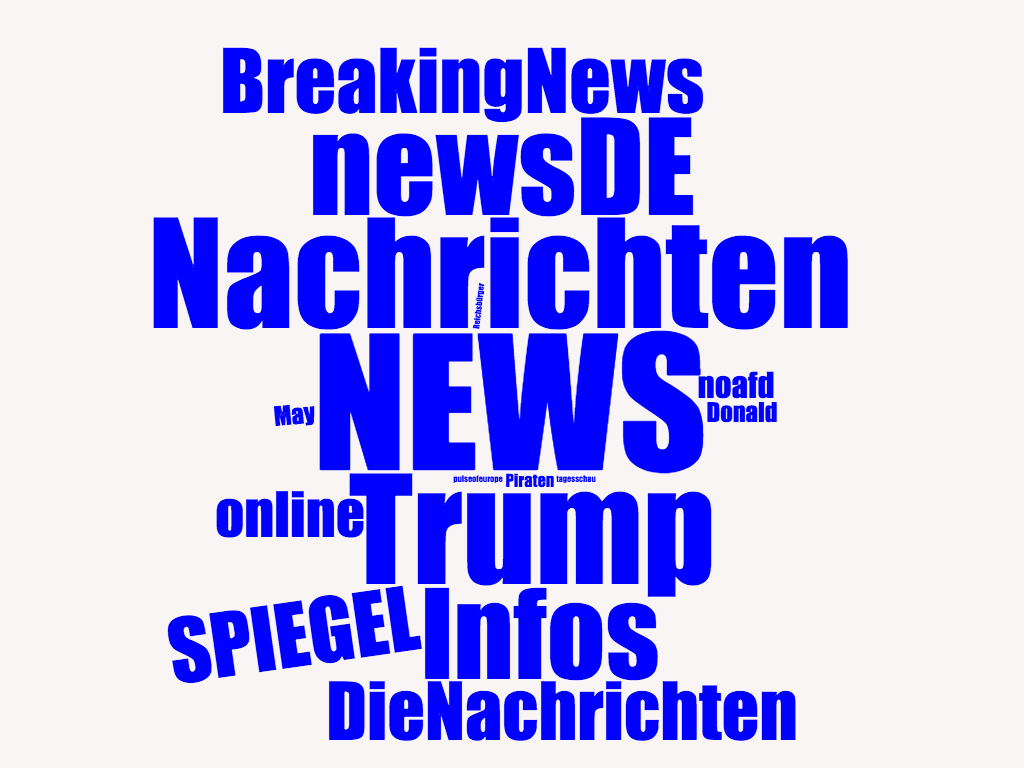

(The word size corresponds to the relative frequency of the terms.)

Certain thematic limitations become obvious at first glance. The narrative being told within this group is about migration, marked by a high frequency of terms like Islam, migrants, refugees, Pegida, Syrians. The terms “Merkel” and “Deutschland” are probably also associated with the “refugee crisis” narrative or with the issue of migration in general. A manual inspection of these tweets showed that the recurring topics are the dangers posed by Islam and crimes committed by individuals with a migrant background, and refugees in particular.

A second, less powerful narrative concerns the self-conception of the group: as victims of political persecution. The keywords “Maas”, “Freiheitskampf”, “SPD”, “NetzDG”, “Antifa”, and “Zensur” clearly refer to the narrative of victimization, in particular with view to the introduction of the so-called “network enforcement act”, NetzDG for short, promoted by German Federal Minister of Justice, Heiko Maas (SPD). This new law regulating speech on Facebook and Twitter is obviously regarded as a political attack on freedom of speech on the right.

The extent of thematic overlap amongst the spreaders of fake news is massive, and becomes even more apparent when we compare these terms to the most common terms the correction tweeters used:

“NEWS”, “Nachrichten” (news), “Trump”, “newsDE”, “Infos”, “BreakingNews”, “SPIEGEL”, “DieNachrichten”, “online”, “noafd”, “May” (as in Theresa May), “Donald”, “Piraten”, “tagesschau”, “pulseofeurope”, “Reichsbürger”.

First of all, what is noticeable is that the most important terms used by correctors are not politically loaded. It is more about media brands and news coverage in general: NEWS, news, newsDe, Infos, BreakingNews, SPIEGEL, DieNachrichten, tagesschau, Wochenblatt. Nine out of 16 terms are but a general reference to news media.

The remaining terms, however, do show a slight tendency to address the right-wing spectrum politically. Donald Trump is such a big topic that both his first and his last name appear in the Top 16. In addition, hashtags like „noafd“ or the pro-European network „pulseofeurope“ seem to be quite popular, while the concept of „Reichsbürger“ (people who feel that Germany isn’t a legitimate nation state and see themselves as citizens of the German Reich) is also discussed.

We can draw three conclusions from this word frequency analysis:

- The fake news group is more limited thematically and more homogeneous politically than the corrections group.

- The fake news group is primarily focused on the negative aspects of migration and the refugee crisis. They also feel politically persecuted.

- The right-wingers have no unified political agenda, but a slightly larger interest in right-wing movements.

All of this would seem to confirm the cognitive dissonance hypothesis. Our fake news example stories are reports of problems with refugees, which is the red group’s core topic exactly. Avoidance of cognitive dissonance could explain why a certain group might uncritically share fake news while not sharing the appropriate correction.

Digital Tribalism

When comparing the two groups in both examples, we already found three essential distinguishing features:

- The group of correctors is larger, more scattered/cloudy and includes the mass media, while the fake news group is more closely interwoven, smaller and more concentrated.

- The filter bubble of the corrector group is more impermeable to fake news than vice versa.

- The fake news group is more limited in topics than the users tweeting corrections.

In short, we are dealing with two completely different kinds of group. Whenever differences at the group level are so salient, we are well advised to look for an explanation that goes beyond individual psychology. Cognitive dissonance avoidance may well have a part in motivating the individual fake news disseminator, but seeing as we regard it as a conspicuous group-wide feature, the reasons will more likely be found in sociocultural factors. This again is a subject for further research.

In fact, there has been a growing tendency in research to embed the psychology of morals (and hence, politics) within a sociocultural context. This trend has been especially pronounced since the publication of Jonathan Haidt’s The Righteous Mind: Why Good People are Divided by Politics and Religion in 2012. Drawing on a wealth of research, Haidt showed that, firstly, we make moral and political decisions based on intuition rather than reasoning, and that, secondly, those intuitions are informed by social and cultural influences. Humans are naturally equipped with a moral framework, which is used to construct coherent ethics within specific cultures and subcultures. Culture and psychology “make each other up”, as the anthropologist and psychologist Richard Shweder put it.14

One of our innate moral-psychological characteristics is our predisposition for tribalism. We have a tendency to determine our positions as individuals in relation to specific reference groups. Our moral toolbox is designed to help us function within a defined group. When we feel we belong to a group, we intuitively exhibit altruistic and cooperative behaviors. With groups of strangers, by comparison, we often show the opposite behavior. We are less trusting and empathetic, and even inclined to hostility.

Haidt explains these human characteristics by way of an excursion into evolutionary biology. From a rational perspective, one might expect purely egoistic individuals to have the greatest survival advantage – in that case, altruism would seem to be an impediment.15 However, at a certain point in human evolution, Haidt argues, the selection process shifted from the individual to the group level. Ever since humanity went down the route of closer cooperation, sometime between 75.000 and 50.000 BCE, it has been the tribes, rather than the individuals, in evolutionary competition with one another.16 From this moment on, the most egoistic individual no longer automatically prevailed – henceforth it was cooperation that provided a distinct evolutionary edge.17

Or perhaps we should say, it was tribalism: The basic tribal configuration not only includes altruism and the ability to cooperate, but also the desire for clear boundaries, group egotism, and a strong sense of belonging and identity. These qualities often give rise to behaviors that, as members of an individualistic society, we believe we have given up, seeing as they often result in war, suffering, and hostility.18

However, for some time there have also been attempts to establish tribalism as a positive vision for the future. Back in the early 1990s, Michel Maffesoli founded the concept of “Urban Tribalism”.19 The urban tribes he was referring to were not based on kinship, but more or less self-chosen; mainly the kind of micro-groups we would now call subcultures. From punk to activist circles, humans feel the need to be part of something greater, to align themselves with a community and its shared identity. In the long run, Maffesoli argued, this trend works against the mass society.

There are more radical visions as well. In his grand critique bearing the programmatic title Beyond Civilization, the writer Daniel Quinn appeals to his readers to abandon the amenities and institutions of civilization entirely and found new tribal communities.20 Activists organizing in so-called neo-tribes mainly reference Quinn on their search for ways out of the mass society, and believe the Internet might be the most important technology in enabling these new tribal cultures.21

In 2008, Seth Godin pointed out how well-suited the Internet was to the establishment of new tribes. Indeed, his book, boldly titled Tribes: We Need You to Lead Us, was intended as a how-to guide.22 His main piece of advice: The would-be leader of a tribe needs to be a “heretic”, someone willing to break into the mainstream, identify the weakest link in an established set of ideas, and attack it. With a little luck, his or her possibly quite daring proposition will attract followers, who may then connect and mobilize via the Internet. These tribes, Godin argues, are a potent source of identity; they unleash energies in their members that enable them to change the world. Many of Godin’s ideas are actually fairly good descriptions of contemporary online group dynamics, including the 2016 Trump campaign.

To sum up: Digital tribes have traded their emphasis on kinship for an even stronger focus on a shared topic. This is the tribe’s touchstone and shibboleth, a marker of its disapproval of the “mainstream”, by whose standards the tribe members are considered heretics. This sense of heresy produces strong internal solidarity and homogenization, and even more importantly, a strict boundary that separates the tribe from the outside world. This in turn triggers the tribalist foundations of our moral sentiments: everything that matters now is “them against us”, and the narratives reflect this basic conflict. AfD, Pegida, the Alt-Right etc. see themselves as rebels, defying a mainstream that in their view, has lost its way, has been corrupted or at best, blinded by ideology. They feel they are victims of political persecution and oppression. Narratives like the “lying mainstream press” and the “political establishment” serve as boundary markers between two or more antagonistic tribes.23 Our visualization of disconnected clusters within the network structure, as well as the topical limitations revealed by our word frequency analysis are empirical evidence of precisely this boundary.

Counter Check: Left-Wing Fake News

Our assumption so far is that the red cloud of fake news disseminators is a digital tribe, and that the differences setting it apart from the group of correction disseminators result from its specific tribal characteristics. These characteristics are: close-knit internal networks, in combination with self-segregation from the mainstream (the larger network); an intense focus on specific topics and issues; and, by consequence, a propensity to forward fake news that match their topical preferences. Our tribe is manifestly right-leaning: the focus on the refugee issue is telling. Negative stories about refugees go largely or entirely unquestioned, and are forwarded uncritically, even when very little factual reporting is available and the story is implausible. Some users even forward stories they know to be untrue.

However, none of this shows the non-existence or impossibility of very diverse digital tribes within the German-speaking Twitterverse. In this sense, our identification of this particular tribe based on its circulation of fake news is inherently biased. The fake news events function as a kind of flashlight that lets us shine a light onto one part of the overall network, but only a rather limited part. Right-wing fake news reports let us detect right-wing tribes. Assuming that our theory is correct so far, we should similarly be able to discover left-wing tribes by flashing the light from the left, that is to say, by examining left-wing fake news reports, if they exist.

To cut a long story short: tracking down left-wing fake news reports was not easy, in any case, more difficult than finding their right-wing counterparts. This is interesting to note, especially since the last U.S. election campaign presented us with a very similar situation.24 One example that caught our attention early on was the so-called Khaled case, even though it is not an exact match for our definition of fake news.25

On January 13, 2015, the Eritrean refugee Khaled Idris was found stabbed to death in Dresden. This was during the peak of the Pegida demonstrations, which had been accompanied by acts of violence on several other occasions before. With the official investigation still ongoing and the authorities careful not to speculate, many Twitter users and primarily leftist media implied that the homicide was connected to the Pegida demonstrations. Some slammed the work of the investigators as scandalously ineffective (“blind on their right eye”), and many prematurely condemned the killing as a racist hate crime. Eventually the police determined that Idris had been killed by a fellow resident in his asylum seekers’ hostel.

We applied the same procedure as in the first example, again coding the fake news reports (or, let us say, incorrect speculations) in red and the corrections in blue. The meta-debate, which played an oversized role in this example, is green.

At first glance, the resulting visualization looks quite different from our first graph. Still, some structural features seem oddly familiar – only in this case, they seem to have been inverted. For instance, the division into two clusters that appear side by side, and the relative proportions of those clusters are instantly recognizable. This time, though, the spatial arrangement and distribution of colors is different.

Once again, we can distinguish two groups, represented by a cloud and a knot respectively. This time, however, the cloud appears on the right and the knot on the left.26 As before, one group is smaller, more closely linked, and more homogeneous (which, as per our theory, suggests tribal characteristics), while the other, represented by a larger, more disperse cloud, includes the mass media. Again, the smaller group is far more homogeneous in its coloring. But instead of red, it is now predominantly green and blue. Now the red dots are part of the larger cloud, which isn’t homogeneously or even predominantly red here; it is as though the cloud mostly consists of corrections (blue) and the meta-debate (green), and just like the knot, merely has occasional sprinkles of red.

These findings did not match our expectations. We thought it would be possible to detect a leftist tribe, but this rather unambiguous answer to our question took us by surprise. We could not detect a leftist tribe similarly driven by fake news; a tribal structure like before could be identified, but this time it was populated by accounts that had forwarded the correction. The cloud does include fake news disseminators as well, but there is no self-segregation, so the disseminators of the fake news are still interspersed with the correctors and the meta-debaters.

In order to increase the robustness of our analysis, we also investigated a second example:

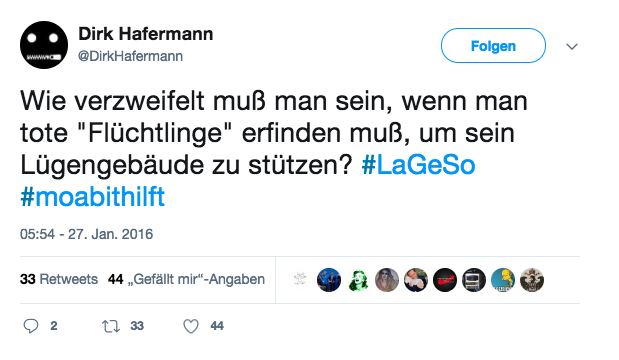

On January 27, 2016, a volunteer of the Berlin refugee initiative „Moabit Hilft“ published a Facebook post telling the story of a refugee who, after standing in line for days in front of the LAGeSo (Landesamt für Gesundheit und Soziales, the agency assigning refugees to shelters in Berlin), collapsed and later died in hospital. The news spread like wildfire, not least due to the fact that conditions at the LAGeSo in Berlin had been known to be chaotic since mid-2015. Many social media accounts took up the case and scandalized it accordingly. The media also picked up on events, some of them rather uncritically at first. Over time, however, when no one else confirmed the story and the author of the original Facebook post had not responded to inquiries, reporting became more and more sporadic. The same day, the news was also officially debunked by the police. In the following days, it turned out that the helper had made up the entire story, apparently completely bowdlerizing it under the influence of alcohol.

We proceeded as we did in the Khaled case, with the difference that we used yellow as a fourth color to distinguish impartial reports from more uncritical fake news disseminators.

The example confirms our findings from the Khaled case. Once again, the spreaders of fake news are dispersed far and wide – there is no closely-connected group. Again, a segregated group that is particularly eager to spread the correction is recognizable. This supports our thesis that this is exactly the same group as the one that was so susceptible to right-wing fake news in the previous examples: our right-wing digital tribe.

To substantiate this conjecture, we „manually“ sifted through our pool of tweets and randomly tested individual tweets from the tribal group.

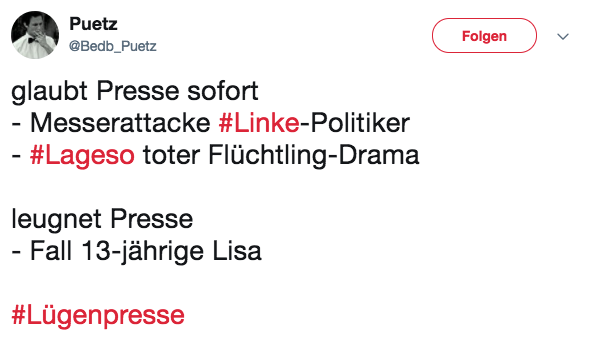

To put it mildly, if we had introduced a color for malice and sarcasm, this cluster would stand out even more from the rest of the network. Even if the tweets include corrections or meta-debate, the gloating joy about the uncovering of this fake news story is unmistakable. Here are some examples from the LaGeSo case:

(Translation:

Tweet 1: What the press believes immediately: / – Stabbing of left party politician / – Dead refugee at #lageso / What the press denies: / – Case of the thirteen-year-old Lisa / #lyingpress

Tweet 2: How desperate does one have to be to invent a dead refugee to prop up this web of lies? #lageso #moabithilft

Tweet 3: Let’s see when people start calling the dead #lageso refugee an art form to raise awareness for the suffering. Then everything will be alright again.

Tweet 4: Do-gooders cried a river of tears. For nothing. The “dead refugee” is a hoax. #lageso #berlin)

These tweets also reveal a deep mistrust in the mass media, which is expressed in the term “Lügenpresse” (“lying press”). By exposing left-wing fake news, the tribe seems to reinforce one of its most deeply-rooted beliefs: that the media are suppressing the truth about the refugees, and that stories of racist violence are invented or exaggerated.

To sum up our findings: Based on leftist fake news about refugees, we were unable to detect a leftist equivalent of the right-wing tribe. On the contrary, it turns out that disseminators of leftist fake news are highly integrated into the mainstream.27 The latter (represented by the cloud) plays an ambivalent role when it comes to leftist fake news, as it includes the fake news tweets, but also the corrections and meta-debate. Everything comes together in this great speckled cloud. By contrast, the right-wing tribe is recognizable as such even in the context of leftist fake news. Again, it appears as a segregated cluster that is noticeably homogeneous in color, though this time on the side of the correction disseminators. (It’s worth mentioning that most of the tweets forwarding the correction were mockeries.) But that does not mean that leftist tribes do not exist at all – they might be visible when investigating topics other than migration and refugees. Nor does it mean that there isn’t a multiplicity of other tribes, perhaps focused on issues that are devoid of political significance or not associated with a political faction. The latter in fact is quite probable.28

Tribal Epistemology

Some findings of our study of leftist fake news are unexpected, but that only makes them even more compelling as confirmation of our hypothesis concerning the right-wing tribe. The latter tribe is real, and it is hardly surprising that it is involved in the dissemination of all those news reports concerning refugees, be it in the form of fake news or of subsequent corrections.29

Yet the theoretical framework describing tribalism that we have outlined so far is still too broad to grasp the specificity of the phenomenon considered here. People like to band together, people like to draw lines to exclude others – this is hardly a novel insight. In principle, we might suspect that tribalism is the foundation of every informal or formally organized community. Hackers, Pirates, “Weird Twitter”, the net community, Telekommunisten, “Siff-Twitter”, 4chan, preppers, furries, cosplayers, gamers, bronies, etc: all of them digital tribes? Maybe. But when we make that claim without carefully examining the communities in question, we risk broadening the concept to such an extent that it no longer adequately captures our observations.30

What sets our phenomenon apart is a specific relation to reality, that is also visibly reflected in the network structure. Worldview and group affliation seem to coincide. This is the defining characteristic of tribal epistemology.

In recent years, a growing body of research has further inspected the connection between group identity and the psychology of perception. Dan M. Kahan and his team at the Cultural Cognition Project have made important contributions in this field.31 In a range of experimental studies, they demonstrated how strongly political affiliation influences people’s assessment of arguments and facts.

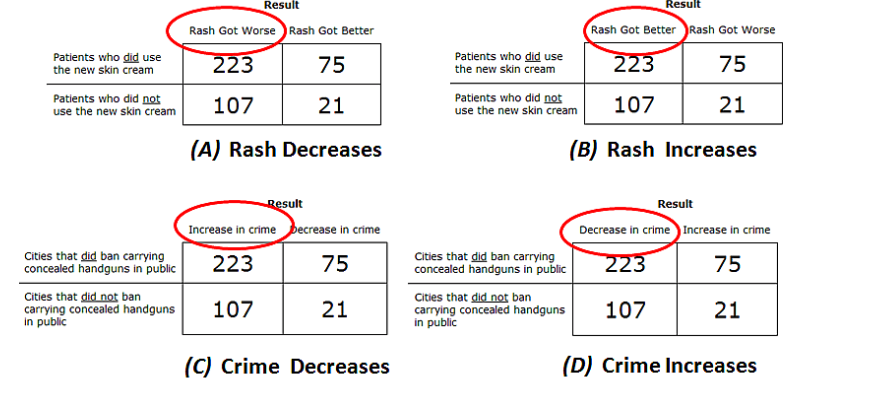

In one study, Kahan presented four versions of a problem-solving task to a representative group of test subjects.32 Two of the four versions were about the results of a fictitious study of a medical skin cream; the subjects were asked to tell whether the cream was effective or not, based on the data provided. In the first version, the data indicated that the new cream helped reduce rash in patients; in the second version, it showed that the medication did more harm than good. In the two other versions of the task, the same numbers were used, but this time to refer not to the effectiveness of a skin cream, but to the effects of a political decision to ban the carrying of concealed handguns in public. In one version, the data indicated that banning handguns from public settings had tended to decrease crime rates; in the other, it suggested the opposite.

Before being given the problem, all subjects were given a range of tests to measure their quantitative thinking skills (numeracy) as well as their political attitudes. It turned out that subjects’ numeracy was a good predictor of their ability to solve the task correctly when they were asked to interpret the data gauging the efficacy of a skin cream. When the same numbers were said to relate to gun control, political attitude trumps numeracy. So far, so unsurprising.

More strikingly, with a polarizing political issue at stake, numeracy actually had a negative effect. On the gun-control question, superior quantitative thinking skills in partisans were correlated with misinterpretation of the data. In Kahan’s interpretation, when it comes to ideologically contentious issues, individuals do not use their cognitive competences to reconcile their own attitudes with the world of fact, but instead maintain their position even when confronted with evidence that contradicts it.

The conclusion Kahan and his colleagues draw from their study is disturbing: culture trumps facts. People are intuitively more interested in staying true to their identity-based roles than in forming an accurate picture of the world, and so they employ their cognitive skills to that end. Kahan’s term for the phenomenon is “identity-protective cognition”: Individuals utilize their intellectual abilities to explain away anything that threatens their identities.33 It is a behavior that becomes conspicuous only when they engage with polarizing issues or positions that solicit strong—negative or positive—identification. Kahan was able to reproduce his findings with problems that touched on issues such as climate change and nuclear waste disposal.

Based on his research, Kahan has outlined his own theory of fake news. He is skeptical of the prevailing narrative that motivated parties such as campaign teams, the Russian government, or enthusiastic supporters of candidates plant fake news in order to manipulate the public. Rather, he argues that there is a “motivated public” that has high demand for news reports that corroborate its viewpoints—and that demand is greater than the supply.34 Fake news, as it were, are simply filling a market gap. Information is a resource in the production not so much of knowledge than of identity—and its utility for this purpose is independent of whether it is correct or incorrect.

The right-wing tribe in our study is one such “motivated public.” Its members’ assessment of a factual claim hinges on its usefulness as a signal of allegiance to the tribe and rejection of the mainstream, much more so than on truthfulness or even plausibility. Our findings therefore do not support the notion that new information might prompt the tribe’s members to change their minds or mitigate their radicalism. In this climate, corrections to the fake news story may have had an opportunity to be noticed, but they were not accepted and certainly not shared any further.

The Rise of Tribal Institutions in the USA

In his text on tribal epistemology, David Roberts makes another interesting observation, which can also be applied to Germany. While the mass media, science and others regard themselves as non-partisan, this claim is denied by the tribal right. On the contrary, the right assumes that these institutions are following a secret or not-so-secret liberal/left-wing agenda and colluding with the political establishment. In consequence, not only the opposing party, but the entire political, scientific and media system is rejected as corrupt.35

As I’ve said before, these conspiracy theories are false at face value, but relate to a certain truth.36 There is in fact a certain liberal basic consensus in politics, science and media that many citizens are sceptical of.37

However, instead of reforming the institutions or opposing their bias, new communication structures such as the Internet (but not exclusively) have made it possible to establish an alternative media landscape and new institutions at low cost. This is exactly what has been happening in the USA since the 1990s. A parallel right-wing media landscape with considerable reach and high mobilization potential has formed. The crucial point is, since this alternative media landscape does not recognise the traditional mainstream media’s claim of non-partisanism, it doesn’t even attempt to reach this ideal in the first place. We can also see similar developments in Germany, but they are far less advanced.

In the USA, the secession of alternative tribal institutions began with local talk radios radicalizing more and more towards the right. Rush Limbaugh, Sean Hannity and Glenn Beck were the early provocateurs of this flourishing scene. Since the mid-1990s, the new TV station Fox News has hired some of these „angry white men“ and set out to carry right-wing radical rhetoric into the mainstream, becoming the media anchor point for the Tea Party movement in the process.38 But during the pre-election campaign of 2015/16, Fox News itself was overtaken by a new player on the right: the Breitbart News website, which had zeroed in on supporting Donald Trump early on.

Not surprisingly, this division in the media landscape can be traced in social media data. In a highly acclaimed Harvard University study, researchers around Yochai Benkler evaluated 1.25 million messages that had been shared by a total of 25,000 sources on Twitter and Facebook during the U.S. election campaign (from April 1, 2015 to the ballot).39

Explanation: The size of a node corresponds to how often the contents of a Twitter account was shared. The position of the nodes in relation to one another shows how often they were shared by the same people at the same time. The colors indicate whether they are mostly shared by people who routinely retweet either Clinton (blue) or Trump (red), or both/neither (green).

Even though the metrics work quite differently in both cases, their analysis is strikingly similar to ours. Networking density and polarization are as clearly reflected in the Harvard study as in ours, albeit somewhat differently. Almost all traditional media are on the left/blue side, whereas there is hardly any medium older than 20 years in red. The most important players are not even 10 years old.

The authors of the study conclude, not unlike we do, that this asymmetry cannot have a technical cause. If it were technical, new, polarizing media would have evolved on both sides of the spectrum. And left-wing media would play just as important a role on the left as Breitbart News on the right.

However, there are also clear differences to our results: the right side, despite its relative novelty and radicalism, is more or less on a par with the traditional media on the left (the left side of the graph, not necessarily in terms of politics). So it is less a polarization between the extreme left and the extreme right, than between the moderate left and the extreme right.

Since the left-hand side of the graph basically reflects what the public image has represented up to now – the traditional mass media at the center with a swarm of smaller media surrounding it, such as blogs and successful Twitter accounts, it may be wrong to speak of division and polarization altogether. This should more correctly be called a „split-off“, because something new is being created here in contrast to the old. The right-wing media and their public spheres have emerged beyond the established public sphere to oppose it. They have split off not because of the Internet or because of filter bubbles, but because they wanted to.

The authors of the study conclude, as we do, that this separation must have cultural and/or ideological reasons. They analyse the topics of the right and reveal a similar focus on migration, but also on the supposed corruption of Hillary Clinton and her support in the media. Here, too, fake news and politically misleading news are a huge part of the day-to-day news cycle, and are among the most widespread messages of these outlets.

We have to be cautious of comparing these two analyses too closely, because the political and media landscapes in Germany and the US are too different. Nevertheless, we strongly suspect that we are dealing with structurally similar phenomena.

An overview of the similarities between the US right-wing spin-off media group and our fake news tribe:

- Separation from the established public.

- Traditional mass media remain on the „other“ side.

- Focus on migration and at the same time, their own victimization.

- Increased affinity to fake news.

- Relative novelty of the news channels.

We can assume that our fake news Twitter accounts are part of a similar split-off group as the one observed in the Harvard study. One could speculate that the United States has merely gone through the same processes of secession earlier than we have, and that both studies show virtually the same phenomenon at different stages. The theory would be: A parallel society can emerge out of one tribe if it creates a media ecosystem and institutions of its own, and last but not least, a truth of its own.

What we have seen in the United States could be called the coup of a super-tribe that has grown into a parallel society. Our small fake news tribe is far from there. However, it cannot be ruled out that this tribe will continue to grow and prosper, and will eventually be just as disruptive as its American counterparts.

In order for that to happen, however, it would have to develop structures that go well beyond the individual network structure of associated Twitter accounts. This can already be observed in some cases. Blogs like “Politisch Inkorrekt”, “Achgut” or “Tichys Einblick” (Tichy’s Inside View), and of course, the numerous successful right-wing Facebook pages and groups surrounding the AfD party and Pegida in particular, can be seen as „tribal institutions“. These are still far from having the same range and are often not as radical as their American counterparts, but this may merely be a matter of time.

Conclusion

The dream of the freedom of networks was a naive one. Even if all our social constraints are removed, our presupposed patterns of socialisation become all the more evident. Humans have a hard-wired tendency to gather into groups they identify themselves with, and to separate themselves from others as a group. Yes, we enjoy more and greater freedoms in our socialisation than ever before, but this does not lead to more individualism – quite the contrary, in many cases it paradoxically leads to a even stronger affinity for groups. The tribal instinct can develop without constraint and becomes all the more dominant. And the longer I think about it, the more I wonder whether all the strangely limiting categories and artificial group structures of modern society are a peacemaking mechanism for taming the tribal side in us that is all too human. Or whether they were. The Internet is far from finished with deconstructing modern society.40

We learned a lot about the new right. It is not just one side of the political spectrum, but a rift, the dividing off of a new political space beyond the traditional continuum. The Internet is not to blame for this split-off, but it has made these kinds of developments possible and therefore more likely. Free from the constraints and the normalization dynamics of the traditional continuum, „politically incorrect“ parallel realities are formed that no longer feel the need to align themselves with social conventions or even factuality.

In The Righteous Mind, Jonathan Haidt writes that people need no more than one single argument to justify a belief or disbelief. When I am compelled (but do not want) to believe something, a single counterargument is enough to make me disregard a thousand arguments. 99 out of 100 climate scientists say that climate change is real? The one who says otherwise is enough for me to reject their view. Or I may want to hold on to a belief that runs counter to all available evidence: Even if all my objections to the official version of the events of 9/11 are refuted, I will always find an argument that lets me cling to my conviction that the attacks were an inside job.

This single argument is the reason why the right-wing tribe is immune to fake news corrections even if exposed to them. Its members always have one more argument to explain why they stand by their narrative, and their tribe. That is exactly what the phrase “lying mainstream press” was invented for. It does not actually imply a sweeping rejection of everything the mass media report, but justifies crediting only those reports that fit one’s own worldview and discounting the rest as inconsequential.41

If we follow the tribalist view of the media landscape (your media vs. our media), the traditional mass media, with their commitment to accuracy, balance, and neutrality, will always benefit the right wing about half the time, while right-wing media pay exclusively into their own accounts. Having faith in non-partisanship and acting accordingly turns out to be the traditional mass media’s decisive strategic disadvantage.

The right will undoubtedly respond that the tribalist tendencies and structures which are uncovered in this text equally apply to the left. But there is no evidence for this assertion. In any case, equating the groups observed is not an option, seeing as the data is unambiguous. This does not mean, however, that there is no tribalistic affect on the left, or that they are still forming in response to the right. On the left, there are belief systems with a similarly strong identity-forming effect, and if Dan Kahan’s theory of „identity-protective cognition“ is correct, similar effects should be observable on the left with the appropriate topics.

This essay is not a complete research paper, and can only be a starting point for additional research. Many questions remain unanswered, and some of the assumptions we have made have not been sufficiently proven yet. It’s clear, however, that we have discovered something that, on the one hand, has a high degree of significance for the current political situation in Germany. On the other hand, and this is my assessment, it also says something fundamental about politics and society in the age of digital networking.

All politics becomes identity politics, as far as polarizing issues are concerned. Identity politics can be described as highly motivating, uncompromising and rarely or never fact-based.

A few research questions remain unanswered:

- Can we really prove that this permanently resurfacing tribe on the right is structurally identical (and at least largely account-identical) with all the phenomena we observe? We’ve got some good leads, but hard evidence is still missing. We would have to measure the entire group and then show that the fake news accounts are a subset of that group.

- In our research, we compared two special groups: right-wing fake news distributors and those who spread the corrections to fake news. The differences are significant, but the comparison is problematic: the group of correctors was also selected, and is therefore not representative. Also, the groups differ in size. Therefore it would be more meaningful to compare the fake news group with a randomized control group of the same size.

- Facebook. Facebook is a far more influential player in the German political debate than Twitter. Can we find the same or similar patterns on Facebook? I would assume so, but it’s hasn’t been looked into yet.

- To justify the term „digital tribe“, further tribes should be identified and researched. This requires a digital ethnology. Are there other tribes in the German-speaking world with other agendas, ideologies or narratives displaying a similar level of separation and perhaps radicalization? Possible candidates are the digital Men’s Rights movement, “Sifftwitter” and various groups of conspiracy theorists. Also: How do digital tribes differ from others? Which types of tribes can reasonably distinguished, etc.? Or is tribalism more a spectrum on which to locate different groups; are there different „degrees“ of tribalism, and which metrics would apply in that case?

I know there are already a few advances in digital ethnology, but I feel that an interdisciplinary approach is needed when it comes to digital tribes. Data analysts, network researchers, psychologists, sociologists, ethnologists, programmers, and maybe even linguists, will have to work together to get to the bottom of this phenomenon.

Another, less scientific question is where all this will end. Is digital tribalism a sustainable trend and will it, as it has happened in the United States, continue to put pressure on the institutions of modern society, and maybe even destroy them in the long term?

So what can we do about it?

Can you immunize yourself against tribalist reflexes? Is it possible to warn against them, even to renounce them socially? Or do we have to live with these reflexes and find new ways of channeling them peacefully?

Haidt’s answer is twofold. He compares our moral decision-making to an elephant and its rider: The elephant intuitively does whatever it feels like at any moment, while the rider merely supplies retrospective rationalizations and justifications for where the elephant has taken him. A successful strategy of persuasion, then, does not consists in confronting the rider with prudent arguments, but in making sure that one retains the elephant’s goodwill (for instance by establishing relationships of personal trust that will then allow a non-confrontational mode of argumentation, which can help individuals break free of the identity trap).

The other half of Haidt’s answer is actually about the power of institutions. The best institutions, he writes, stage a competition between different people and their worldviews, so as to counterbalance the limitations of any one individual’s judgment. Science, for instance, is organized in such a way as to encourage scientists to questions one anothers’ insights. The fact that a member of the scientific community will always encounter someone who will review his or her perspective on the world acts as containment against the risk that he or she will become overly attached to any personal misperceptions.

Our discovery of digital tribalism, however, points to the disintegration of precisely these checks and balances, and the increased strength of unchecked tribal thinking. I am inclined to think that the erosion of this strategy is precisely the problem we are struggling with today.

Paul Graham also made a suggestion on how to deal with this phenomenon on a personal level, but without reference to research. In a short essay entitled „Keep Your Identity Small“ from 2009, he advises exactly that: not to identify too much with topics, debates, or factions within these debates.42 The fewer labels you attach to yourself, the less you allow identity politics to tarnish your judgement.

However, this advice is difficult to generalise because there are also legitimate kinds of identity politics. Identity politics is always justified and even necessary when it has to be enforced against the group think of the majority. Often, the majority does not consider the demands of minorities on its own. Examples are homosexual and transsexual rights, or the problems caused by institutional racism. In these cases, those affected simply do not have the choice of not identifying with the cause. They literally are the cause.

A societal solution to the problem would presumably need to rebuild faith in supra-tribal and nonpartisan institutions, and it is doubtful whether the traditional institutions are still capable of inspiring such faith. People’s trust in them was always fragile at heart and would probably have fallen apart long ago given the right circumstances. In Germany, this opportunity arose with the arrival of the Internet, which dramatically lowered the costs of establishing alternative media structures. New institutions aiming to reunify society within a shared discursive framework will need to take into account the unprecedented agility of communication made possible by digital technology, and will even devise ways of harnessing it.

Of course, another response to the tribalism on the right would be to become tribalist in turn. A divisive “us against them” attitude has always been part of the important anti-fascist struggle, and an ultimately necessary component of the Antifa political folklore. However, I suspect that an exclusive focus on the right-wing digital tribe might inadvertently encourage the other side—which is to say, large segments of mainstream society—to tribalize itself, drawing cohesion and identification from the repudiation of the “enemy.” In doing so, society would be doing the right-wing tribe a huge favor, turning its conspiracy theory (“They’re all in cahoots with each other”) into a self-fulfilling prophecy.

The problem of digital tribalism is unlikely to go away anytime soon. It will continue to have a transformative effect on our debates and, by consequence, our political scenes.

Footnotes:

- Or at least, as Felix Stalder – somewhat less naive – has suggested as „networked individualism“, according to which „… people in western societies(…) define their identity less and less via the family, the workplace or other stable communities, but increasingly via their personal social networks, i. e. through the collective formations in which they are active as individuals and in which they are perceived as singular persons. Stalder, Felix: Kultur der Digitalität, p. 144. ↩

- Roberts, David: Donald Trump and the rise of tribal epistemology, https://www.vox.com/policy-and-politics/2017/3/22/14762030/donald-trump-tribal-epistemology (2017).

↩ - We have chosen Twitter for our analysis because it is easy to process automatically due to its comparatively open API. We are aware that Facebook is more relevant, especially in Germany and especially in right-wing circles. However, we assume that the same phenomena are present there, so that these findings from Twitter could also be applied to Facebook. ↩

- Website of the German Foreign Office, travel and safety advisory for Sweden: Auswärtiges Amt: Schweden: Reise- und Sicherheitshinweise, http://www.auswaertiges-amt.de/sid_39E6971E3FA86BB25CA25DE698189AFB/DE/Laenderinformationen/00-SiHi/Nodes/SchwedenSicherheit_node.html (2017). ↩

- For a closer examination of the term “fake news”, consider (in German): Seemann, Michael: Das Regime der demokratischen Wahrheit II – Die Deregulierung des Wahrheitsmarktes. http://www.ctrl-verlust.net/das-regime-der-demokratischen-wahrheit-teil-ii-die-deregulierung-des-wahrheitsmarktes/ (2017). ↩

- The German project ‘Hoaxmap’ has been tracking a lot of rumors concerning refugees for a while: http://hoaxmap.org/. ↩

- See Pariser, Eli: The Filter Bubble – How the New Personalized Web Is Changing What We Read and How We Think (2011). ↩

- However, the data basis of this example is problematic in several respects.

– The original article in BILD newspaper has been deleted, as have the @BILD_de tweet and all of its retweets. It’s no longer possible to reconstruct the articles and references that have been deleted in the meantime.

– Some of the articles have been changed over time. For example, Spiegel Online’s article was much more sensational at the beginning, and we would probably have categorized it as clearly fake news in the beginning. At some point, when it turned out that there were more and more inconsistencies, it must have been amended and defused. We don’t know which other articles this has happened to. ↩ - Eli Pariser, the inventor of the filter bubble, recently said in an interview regarding the situation in the USA: „The filter bubble explains a lot about how liberals didn’t see Trump coming, but not very much about how he won the election.“ https://backchannel.com/eli-pariser-predicted-the-future-now-he-cant-escape-it-f230e8299906 2017 The problem is, according to Parisians, that the leftists lose sight of the right, not the other way round. ↩

- See Festinger, Leon: A Theory of Cognitive Dissonance, (1957). ↩

- A special vocabulary (or slang) probably also distinguishes a digital tribe. Relative word frequencies have also been used to identify homogeneous groups in social networks. Cf. study: Bryden, John / Sebastian Funk / AA Jansen, Vincent: Word usage mirrors community structure in the online social network Twitter, https://epjdatascience.springeropen.com/articles/10.1140/epjds15 (2012). ↩

- Short for Netzwerkdurchsetzungsgesetz, a controversial law designed to prevent online hate speech and fake news that went into effect on October 1, 2017. ↩

- Patriotic Europeans Against the Islamisation of the West – an extreme-right wing movement founded in Dresden, Germany in 2014. ↩

- Richard Shweder, quoted in Jonathan Haidt, The Righteous Mind: Why Good People Are Divided by Politics and Religion (New York: Pantheon Books, 2012), 115. ↩

- So the thesis of the Homo Oeconomicus from economics has always been founded on that assumption. ↩

- In this context, John Miller and Simon Dedeo’s research is also compelling. They developed an evolutionary game theory computer simulation in which agents have memory so that they can integrate other agents’ behavior in the past into their own decision-making process. Knowledge is inherited over generations. It turned out that the agents began to see recurring patterns in the behavior of other agents, and shifted their confidence to the homogeneity of these patterns. These evolved „tribes“ with homogeneous behavioural patterns quickly cooperated to become the most successful populations. However, if small behavioural changes were established over many generations through mutations, a kind of genocide occurred. Entire populations were wiped out, and a period of instability and mistrust followed. Tribalism seems to be an evolutionary strategy. Cf. Dedeo, Simon: Is Tribalism a Natural Malfunction? http://nautil.us/issue/52/the-hive/is-tribalism-a-natural-malfunction (2017). ↩

- The thesis of group selection (or „multi-level selection“) was already brought into play by Darwin himself, but it is still controversial among biologists. Jonathan Haidt makes good arguments as to why it makes a lot of sense, especially from a psychological point of view. Cf. Haidt, Jonathan: The Righteous Mind – Why Good People are Divided by Politics and Religion, 210 ff. ↩

- Cf. Greene, Joshua: Moral Tribes – Emotion, Reason, and the Gap Between Us and Them (2013), S. 63. ↩

- Maffesoli, Michel: The Time of the Tribes – The Decline of Individualism in Mass Society (1993). ↩

- Quinn, Daniel: Beyond Civilization – Humanity’s Next Great Adventure (1999). ↩

- See NEOTRIBES http://www.neotribes.co/en. ↩

- Godin, Seth: Tribes – We Need You to Lead Us (2008). ↩

- For example,“Lügenpresse“ appears 226 times in our analysis of the fake news spreaders’ tweets, vs. 149 for the correctors. „Altparteien“ even appears 235 times vs. 24. ↩

- “We’ve tried to do similar things to liberals. It just has never worked, it never takes off. You’ll get debunked within the first two comments and then the whole thing just kind of fizzles out.” That’s what Jestin Coler says to NPR. He is a professional fake-news entrepreneur who made a lot of money during the US election campaign by spreading untruths. His target audience are Trump supporters; liberals are not as easy to ensnare, he claims. Ct. Sydell, Laura: We Tracked Down A Fake-News Creator In The Suburbs. Here’s What We Learned. http://www.npr.org/sections/alltechconsidered/2016/11/23/503146770/npr-finds-the-head-of-a-covert-fake-news-operation-in-the-suburbs (2016). ↩

- The difference to fake news lies mainly in the fact that these were not allegations made against better knowledge, but rather assumptions that were mostly recognizable as presupposed. ↩

- The orientation of the graph is based on where the distribution of fake news is centered. ↩

- Even though it remains unclear whether it may be possible to provide a digital tribe with leftist fake news on other topics. For example, there is widespread use of false quotations or photo montages of Donald Trump. However, such examples have attracted particular attention in the English-speaking world, and it would be hard to achieve a clean research design for the German-language Twittersphere. An interesting example to investigate from the German election campaign would be the fake news (which was probably was meant as satirical hoax) that Alexander Gauland, head of the right-wing AfD, expressed his admiration for Hitler. Cf. Schmehl, Karsten: DIE PARTEI legt AfD-Spitzenkandidat Gauland dieses Hitler-Zitat in den Mund, aber es ist frei erfunden, https://www.buzzfeed.com/karstenschmehl/satire-und-luegen-sind-nicht-das-gleiche (2017). ↩

- There are quite a lot of texts on the tribalist political situation in the USA, in which authors also assume tribalist tendencies in certain left-wing circles. See Sullivan, Andrew: America Wasn’t Built for Humans. http://nymag.com/daily/intelligencer/2017/09/can-democracy-survive-tribalism.html (2017) and Alexander, Scott: I Can Tolarate Anything Except The Outgroup http://slatestarcodex.com/2014/09/30/i-can-tolerate-anything-except-the-outgroup/ (2014). ↩

- This is further evidenced by the fact that similar phenomena were already detectable in other events. See, for example, the tweet evaluations of the Munich rampage last year. See Gerret von Nordheim: Poppers nightmare, http://de.ejo-online.eu/digitales/poppers-alptraum (2016). ↩

- A candidate for a tribe that has already been investigated is „Sifftwitter“. Luca Hammer has looked at the network structures of this group, and his findings seem to be at least compatible with our investigations. See Walter, René: Sifftwitter – Dancing around the data fire with the trolls. http://www.nerdcore.de/2017/05/09/sifftwitter-mit-den-trollen-ums-datenfeuer-tanzen/ (2017). ↩

- Cultural Cognition Project (Yale Law School), http://www.culturalcognition.net/. ↩

- Dan M. Kahan, “Motivated Numeracy and Enlightened Self-Government,” Yale Law School, Public Law Working Paper, no. 307, September 8, 2013, https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2319992, accessed November 7, 2017. ↩

- Dan M. Kahan, “Misconceptions, Misinformation, and the Logic of Identity-Protective Cognition,” Cultural Cognition Project Working Paper Series, no. 164, May 24, 2017, https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2973067, accessed November 7, 2017. ↩

- Which in turn proves the studies which classify fake news as a commercial phenomenon are right. See e. g. Allcott/Gentzkow: Social Media and Fake News in the 2016 Election, https://www.aeaweb.org/articles?id=10.1257/jep.31.2.211 (2017). ↩

- Roberts, David: Donald Trump and the Rise of Tribal Epistemology, https://www.vox.com/policy-and-politics/2017/3/22/14762030/donald-trump-tribal-epistemology (2017). ↩

- Seemann, Michael: The Global Class – Another World is Possible, but this time, it’s a threat, http://mspr0.de/?p=4712. (2016). ↩

- Recently Richard Gutjahr called for the reflection of this bias. The fact that media representatives actually have a filter bubble against right-wing thought building is proven in our data. http://www.gutjahr.biz/2017/05/filterblase/ (2007). ↩

- In fact, Seth Godin actually refers to the example of Fox News in his 2008 book „Tribes“. Fox News has managed to create a tribe for itself. Cf. God, Seth: Tribes – We need you to lead us, 2008, p. 48. ↩

- Berkman Center: Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election, https://cyber.harvard.edu/publications/2017/08/mediacloud (2017). ↩

- In this context, we should also look at the appalling events accompanying the introduction of the Internet to the general public in developing countries. In connection with the anti-Muslim resentment in Burma with Fake News and the incredibly rapid popularization of the Internet, see for example Frenkel, Sheera: This Is What Happens When Millions Of People Suddenly Get The Internet, https://www.buzzfeed.com/sheerafrenkel/fake-news-spreads-trump-around-the-world?utm_term=.loeoq9kBX#.qiVgaEX7r (2017), and how rumors on the Internet could have led to a genocide in South Sudan: Patinkin, Jason: How To Use Facebook And Fake News To Get People To Murder Each Other, https://www.buzzfeed.com/jasonpatinkin/how-to-get-people-to-murder-each-other-through-fake-news-and?utm_term=.ktQP8vaJN#.jh8Dd6Xyj (2017). ↩

- Ct. Lobo, Sascha: Die Filterblase sind wir selbst, http://www.spiegel.de/netzwelt/web/facebook-und-die-filterblase-kolumne-von-sascha-lobo-a-1145866.html, (2017). ↩

- Graham, Paul: Keep Your Identity Small, http://www.paulgraham.com/identity.html (2009). ↩

Pingback: Digitaler Tribalismus und Fake News | ctrl+verlust

Pingback: Digitaler Tribalismus – Das hässliche Making Of | H I E R

Pingback: Weekly Links & Thoughts #154 | meshedsociety.com

Pingback: Internet vs. Speciesism – The Saewycs