There are two endpoints in any network connection, and you have to focus on both the server adapter and the switch to get the best and most balanced performance out of the network and the proper return on what amounts to be a substantial investment in a cluster.

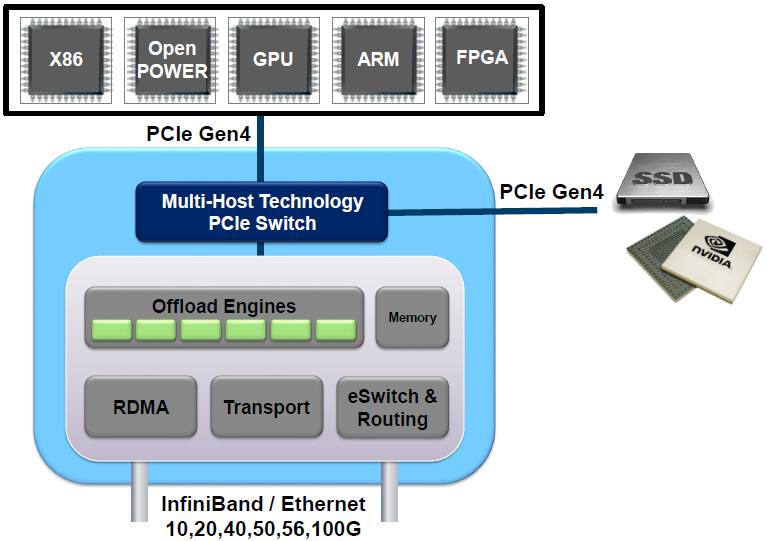

With the upcoming ConnectX-5 server adapters, Mellanox Technologies is continuing in its drive to have more and more of the network processing in a server node offloaded to its adapter cards. And it is also rolling out significant new functionality such as background checkpointing and switchless networking, and of course there is the usual improvements in message rate handling and lower latencies across the card that end users expect with a new generation of server adapters.

The ConnectX-5 adapter ASIC is designed to support 100 Gb/sec bandwidth running either the Ethernet or InfiniBand protocol, and has been co-designed to work well with the Spectrum Ethernet and SwitchIB-2 InfiniBand switches that Mellanox currently sells. The ConnectX-5 chip at the heart of the new adapters has an end-to-end latency of 600 nanoseconds, which is a measure of the time it takes to go from the main memory of one node in the cluster, through the adapter and out over the network wire and into the other server node adapter and into main memory.

The prior generation of ConnectX-4 adapters, which also supported 100 Gb/sec ports, had an end-to-end latency of 700 nanoseconds, so this is a 14 percent improvement in latency. Such improvements are harder and harder to come by, and as Gilad Shainer, vice president of marketing at Mellanox, has told The Next Platform time and again, making a faster and fast network interface card has diminishing returns. In the case of the ConnectX-5, the NICs have a latency of 100 nanoseconds, so the other parts of the network stack account for more of the overall latency (200 nanoseconds out of 600 nanoseconds), and this is where you want to focus. Offloading functions from the CPU can cut that latency on specific workloads, which is why Mellanox is a big believer in offload.

For instance, with the combination of the ConnectX-4 adapters and the original Switch-IB InfiniBand switches, something on the order of 30 percent of the MPI cluster memory stack could be offloaded from the CPU to the network infrastructure, and with the combination of the ConnectX-5 adapters and the SwitchIB-2 switches, that percentage is somewhere around 60 percent. As we have previously reported, the SwitchIB-2 switches incorporate MPI collective operations that logically should be in the switches and in fact run better there, and more MPI collective and tag matching algorithms are offloaded to the ConnectX-5 cards as well. Shainer says that in the fullness of time, all MPI operations could be offloaded to the switches and adapters, freeing up even more CPU for actual simulation and modeling.

The message rate on the ConnectX-5 adapters is also 33 percent higher, at 200 million messages per second, than the 150 million messages per second rate that the ConnectX-4 adapter cards delivered. This increase in messaging rate is important because it now more or less matches the 195 million messages per second rate that a 100 Gb/sec port on the SwitchIB-2 delivers. (The SwitchIB-2 ASIC can handle 36 ports running at EDR speeds with an aggregate bandwidth of 7.2 Tb/sec with a 90 nanosecond latency and a throughout of 7.02 billion messages per second.) According to data from Intel provided at SC215 last fall, the raw feeds and speeds of its Omni-Path 100 switch has a port-to-port latency of between 100 nanoseconds and 110 nanoseconds, and on MPI workloads using the Ohio State Micro Benchmarks, the “Prairie River” switch ASIC can run at a peak of 195 million messages per second per port; the “Wolf Creek” Omni-Path adapter card was said to handle 160 million messages per second on the MPI test. In a more recent set of tests cited by Intel, which we covered here, the MPI latency on an Omni-Path network was 930 microseconds and the message rate was 89 million messages per second. Those Intel tests also showed EDR InfiniBand not handling as much messages (only 69.8 million messages per second) and having a higher latency (at 1.03 microseconds).

This just shows how everyone needs to benchmark actual clusters and do so on machines with as many nodes as possible and then have an accurate way of predicting how network performance scales as a system is built out.

There are a number of interesting features on the new ConnectX-5 adapters that go beyond the improving feeds and speeds. The ability to do checkpointing of interim application processing in the background is potentially a game changer. With normal checkpointing, you have to stop the application all at once across the nodes, save the state of the memory and application off to persistent storage, and then resume processing. Precisely how this background checkpointing works is unclear, and we will be investigating it as the ConnectX-5 adapters come to market in the third quarter of this year. As with the prior ConnectX-4 cards, the ConnectX-5 adapter includes an on-chip eSwitch PCI-Express switch, and with the update to the ASIC it now also has a switchless interconnect topology that allows for four nodes to be linked in a ring topology using just the two ports of the adapter and not using a switch at all. Like this:

This switchless interconnect would allow for multiple rings of baby clusters to be interlinked, and Shainer says Mellanox is not exactly sure how customers might use this functionality. “We are not just doing things because we can, but at the same time, we are not deciding ahead of time what they should do,” he says. For starters, end users who want to build baby clusters on the cheap where latency between the nodes is not a big issue can now do so. Small rings could be interconnected with switches if that made sense, perhaps for distributed storage clusters. There are lots of possibilities.

The ConnectX-5 adapters also support the NVM-Express over Fabrics protocol extensions to PCI-Express, which are being used to accelerate links between servers and clusters of storage servers that employ NVM-Express to link flash (and soon other persistent storage like 3D XPoint) to the storage server in a low latency, high bandwidth fashion. The ConnectX-5 adapters will support current PCI-Express 3.0 and future PCI-Express 4.0 peripheral slots in systems, so they are future proof. IBM is expected to have PCI-Express 4.0 support with the Power9 processor next year, and thus far it does not look like the future “Skylake” Xeon v5 processors from Intel will support PCI-Express 4.0. But we think this could change.

The ConnectX-5 adapters top out at 100 Gb/sec and will come in variants that have one or two ports. Moving up to 200 Gb/sec speeds in 2017 with HDR InfiniBand and a next-generation Spectrum Ethernet switching perhaps in 2018 will require a new ASIC in the adapter cards, says Shainer. (Presumably to be called ConnectX-6.) The ConnectX-5 adapters will have about the same price as the ConnectX-4 adapters they replace in the Mellanox line. The top-end ConnectX-5 adapter should cost around $1,600 or so, Shainer estimates, which is a slight premium to the $1,355 street price of a two-port ConnectX-4 EN adapter.

What about 16-node switchless hypercube clusters using 2 2 -port adapters/server – will that be supported?

Is there redundancy (re-routing) or single failure makes cluster unusable?