Earlier this summer, three economic history journals were suppressed from the 2017 edition of the Journal Citation Reports (JCR), the annual metrics source that reports Journal Impact Factors along with other citation metrics.

The three journals were the Journal of the History of Economic Thought (JHET), The European Journal of the History of Economic Thought (EJHET) and the History of Economic Ideas (HEI).

The grounds for suppressing all three journals was “citation stacking,” a pattern wherein a large number of citations from one or more journals affects the performance and ranking of others. In some instances, I have referred to this pattern as a “citation cartel“. Clarivate Analytics, the publisher of the JCR, has defined their methods for detecting and evaluating citation stacking.

Shortly after the JCR release, Nicola Giocoli, Editor of HEI, posted an open letter dated June 21, 2018 to Tom Ciavarella, Manager of Publisher Relations for Clarivate, arguing that the anomalous citation pattern was caused by a single annual review of the literature. This type of review, Giocoli argued, was “a serious piece of research that we commission to junior scholars in the field (usually, scholars who just finished their PhD) to let them recognize and assess the research trends in the discipline.” HEI had published a similar review in 2016 and was planning to publish others in 2018 and 2019.

While I am not privy to Ciavarella’s response to the appeal, Clarivate did not reverse its editorial decision. Exasperated, Giocoli lashed out in a second open letter, dated July 3, 2018:

“By a single stroke, namely, by excluding one single item published in HEI, you could indeed publish a very meaningful 2017 metric for the JHET (and the EJHET, too!). I cannot believe your team is unable to (manually?) do this very simple operation and cancel all its references from your metrics for any journal the authors may have quoted. It is just one paper!”

Following a similar communications approach, seven presidents of history of economics societies, together with eight journal editors, published another open letter, appealing Clarivate’s decision, arguing that their methods for detecting citation distortion were biased against small fields:

“In many areas of the natural sciences, or in economics, for example, none of those initiatives would cause any citation distortions because of their broad net and higher level of citations.”

The problematic paper, “From Antiquity to Modern Macro: An Overview of Contemporary Scholarship in the History of Economic Thought Journals, 2015-2016” was published in 2017 and includes 212 references. Like its predecessor (“Annual Survey of Ideas in History of Economic Thought Journals, 2014-2015”), the authors restrict their review entirely to papers that count toward Journal Impact Factor (JIF) calculations. While this is enough to raise some eyebrows, it was not the factor that resulted in three journal suspensions.

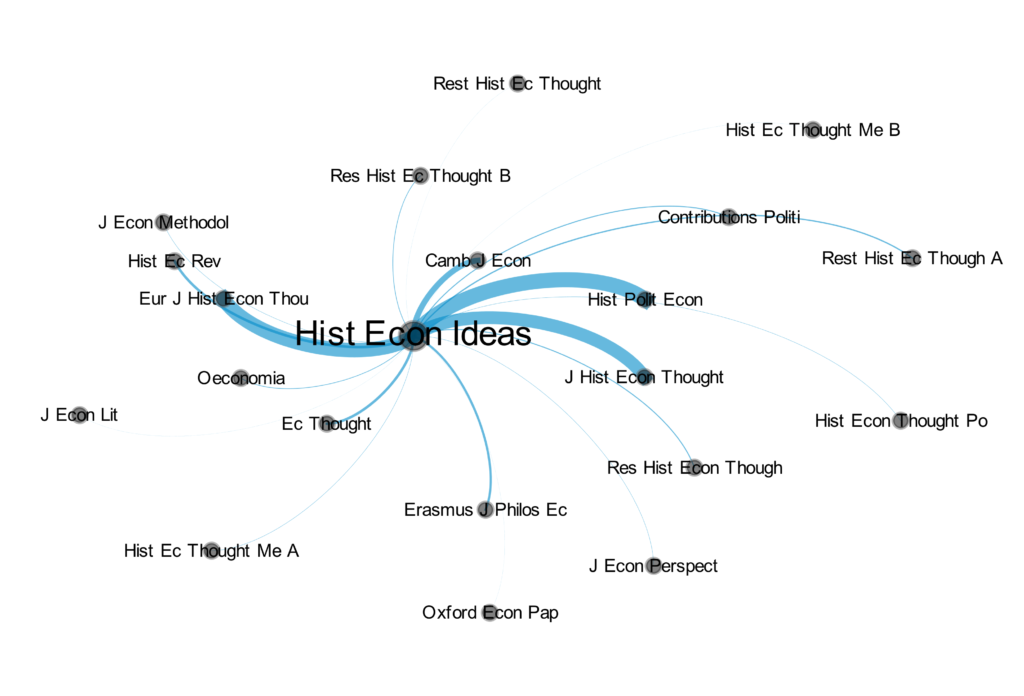

Of the 212 references in this review, 41 were directed to papers published in JHET, 46 to EJHET, but just one citation to itself. The remaining 124 citations referenced other journals.

According to the Web of Science, there were a total of 81 citations made in 2017 from indexed sources to JHET, 66 (81%) of which were directed to papers published in the previous two years. Between 2015 and 2016, JHET published 49 citable items. If we use these numbers to calculate JHET’s 2017 Impact Factor, we arrive at a score of 1.347 (66/49). If we remove the 41 references from HEI’s literature survey, JHET’s score would be just 0.510 (25/49). For context, JHET’s 2016 JIF score was 0.490. For EJHET, we calculate a 2017 JIF score of 1.200 (90/75) including citations from the review and 0.587 (44/75) excluding the review. The latter figure is similar to the JIF score EJHET received in 2016 (0.325).

Conspicuously, one journal that avoided suppression was the History of Political Economy (HPC), which received 48 JIF-directed citations from the literature review, more than half of the 92 citations that counted towards its JIF score in 2017. With these citations, HPC received a 2017 JIF score of 1.415 (92/65); without it, just 0.677.

The only apparent fault of the suppressed journals was that they suffered from a general lack of citation interest.

There is no doubt that the literature survey from the HEI was responsible for greatly inflating the scores and rankings of three journals. This led to suppression of two of the three citation recipients along with HEI itself. Apart from the obvious fact that the literature search conveniently focused on papers published in the previous two years (as did an earlier HEI review), there are no other suspicious circumstances surrounding the paper: There was just one self-citation to HEI itself; all three journals were published by different organizations and the authors did not concurrently serve as editors of the recipient journals. I could not find any conflicts of interest among the authors, journals, and publishers. The only apparent fault of the suppressed journals was that they suffered from a general lack of citation interest.

There are many fields that suffer from the same ailment. Consider Library Science.

The library journal, Interlending & Document Supply (I&DS) was suppressed for high levels of self-citation. In 2014, 73% of all self-citations were directed at its JIF. When reinstated in the JCR in 2015, JIF-directed self-citation rates grew to 96%. Most of the citations came from its editor, Mike McGrath, who routinely published surveys on related library topics. While McGrath was comprehensive in reviewing the relevant literature, I&DS generally receives very few citations. Its 2015 JIF was defined with just 24 citations in the numerator, 18 of which came from the McGrath’s reviews.

The Law Library Journal (LLJ) was also suspended from the JCR from 2011 through 2013. In 2013, 90% of the citations defining its Impact Factor were self-citations. When LLJ was reinstated in the JCR in 2014, self-citations accounted for 64% of its score. In real terms, this was just 10 citations out of a total of 28.

In contrast, consider the following review paper, “Highlights of the Year in JACC 2013,” published in February 2014 by the Editor-in-Chief and associate editors of the Journal of the American College of Cardiology (JACC). This review cites 267 papers, 261 of which were JIF-directed self-citations. In 2014, JACC received 14,539 JIF-directed citations, meaning, this one review contributed less than 2% of JACC’s IF score — huge in absolute terms but minimal in relative terms.

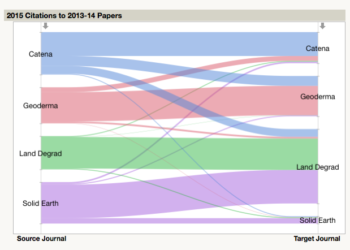

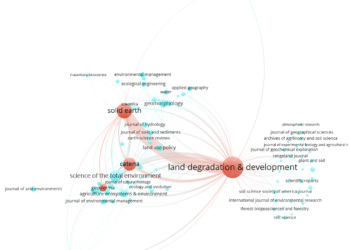

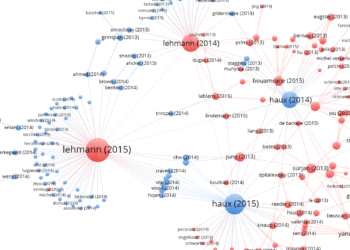

Also consider Land Degradation & Development (LDD), a journal in which its society had determined that its editor abused his position to coerce authors to cite LDD and his own publications. The journal went from rags to citation riches within a few years, quintupling its Impact Factor within four years and moving from 10th place to 1st place within its field.

While both JACC and LDD are examples of citation behaviors that artificially load hundreds of citations onto the performance scale, neither practice was sufficient to tip Clarivate into taking action to suppress either journal. Reverse-engineering JCR’s suppression decisions illustrate that decisions are based on high proportional thresholds.

Clarivate relies upon several criteria when determining when titles should be suppressed and “does not assume motive on behalf of any party.” To Clarivate, this is purely an exercise in measurement. Nevertheless, the very methods for defining citation distortion makes the JCR highly sensitive to tiny aberrations when they affect specialist journals in small fields and largely insensitive to big distortions when they affect large, highly cited journals.

It may be time to review Clarivate’s grounds for suppression and decide whether its scales require rebalancing.

Editor’s note: Tom Ciavarella was originally identified as the Manager of Public Relations for Clarivate. He is in fact the Manager of Publisher Relations for Clarivate and the post has been updated to correct this.

Discussion

5 Thoughts on "Tipping the Scales: Is Impact Factor Suppression Biased Against Small Fields?"

An important piece which demonstrates how algorithms, even if developed for the best of motivations, can be harmful.

Dear Phil Davis, your post is really illuminating about what is at stake. Not only the HEI editor and presidents of societies and editors of other history of economics (not economic history) journals wrote letters to Clarivate, but also the editors of JHET and EJHET (available here: https://historyofeconomics.org/jhet-and-ejhet-editors-appeal-against-impact-factor-suppression/). All letters point to the small community / small citation network in history of economics. While Clarivate has answered HEI, it has not answered the joint JHET-EJHET letter (sent on July 2nd, 2018) and the subsequent letter by presidents of societies and other journal editors (sent on July 30). In the acknowledgment letter Clarivate sent to the president of the History of Economics Society (HES), it promised an answer by mid-Aug. Not only Clarivate, a private company running the JIF statistics, cause harm not only by following algorithms that, even if developed for the best of motivations (as Charles Oppenheim said above), are very sensitive to outliers (such as one single literature review published in HEI in 2017), but also for not taking full account of its responsibilities in handling alleged problems in a transparent way and by responding promptly to substantiated calls for reversion of its decision.

Excluding reviews and literature surveys from the JIF or having separate values for primary citation rate JIF and secondary literature citation rate JIF would go far toward eliminating the ability to manipulate JIF (intentionally or algorithmically) with self-cited review articles.

To clarify, one wouldn’t exclude citations to reviews and surveys from counting in the JIF to make the difference in what’s proposed here, one just wouldn’t count any citations made by review and survey articles. So you’d still have motivation for publishing a good review article (helpful to the field) but no motivation to load up that article with lots of citations to your own journal.

Dear dr. Davis, thank you for your post.

May I add what follows for TSK readers’ further information?

Here at HEI we offered Clarivate our most complete collaboration to avoid similar incidents in the future, while retaining our (sacrosanct) freedom to publish what we deem scientifically worth to be published.

Our proposals included tagging our surveys (starting from the title itself), in order to allow Clarivate’s algorithm to identify and exclude them from IF metrics, or even devising a new method to write references in those surveys, in order to guarantee that Clarivate’s algorithm would NOT count them, while still providing readers with enough information to identify the sources.

Despite publicly admitting our good faith, Clarivate alleged to be unable to handle journals or essays individually and thus refused to collaborate in any way. They told us that it was simply up to HEI to decide whether to stop publishing those surveys altogether or, at most, instruct our authors to make a maximum number of references (!) to the most recent (2 years) articles in the same few journals. Needless to say, both suggestions sounded outrageous to us.

Nicola Giocoli

(Editor, History of Economic Ideas)