In 2012, Google made a breakthrough: It trained its AI to recognize cats in YouTube videos. Google’s neural network, software which uses statistics to approximate how the brain learns, taught itself to detect the shapes of cats and humans with more than 70% accuracy. It was a 70% improvement over any other machine learning at the time.

Five years later, a contest Google is sponsoring speaks volumes about the field’s advancement. Instead of finding cats, researchers will be required to train an AI to identify more than 5000 different species of plants and animals. The contest, called iNat, will open in June and conclude in July.

“Over the last five years it’s been pretty incredible, the progress of deep [neural] nets,” says Grant Van Horn, lead competition organizer and graduate student at California Institute of Technology. “I think bigger and more complex datasets are the way to go make sure we keep making progress.”

To get an idea of what competitors will face, iNat organizers trained their own network on the data and turned in an impressive performance. Using open-source neural networks from Google, the team achieved up to 60% accuracy when given one chance to predict the answer, and more than 80% when given five chances. (For the AI-knowledgable, these results are on Google’s Inception network, and results were measured against the validation set.) Benchmarks like the famous ImageNet competition improve by a few percentage points each year, and some argue that simple competitions can’t recognize the true “intelligence” of an algorithm. But the competitions do indicate overall trends.

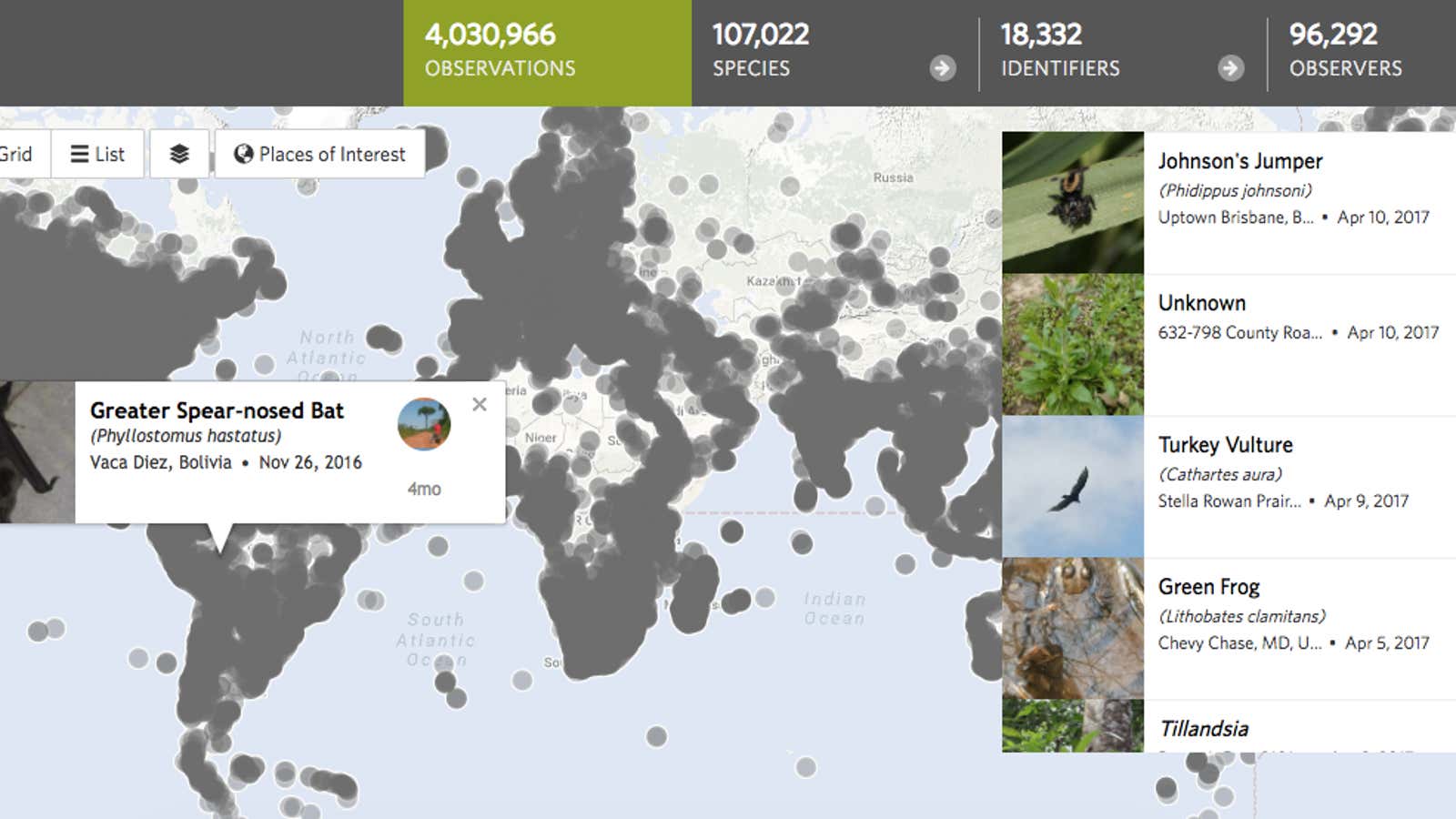

Van Horn says this latest Google competition differs from ImageNet, which forces algorithms to identify a wide variety of objects like cars and houses and boats, because iNat requires AI to examine the “nitty-gritty details” that separate one species from another. This field is called fine-grain image classification. The data is provided by iNaturalist.org, a website used by nature enthusiasts to upload pictures in order to correctly identify different species as a community. The dataset consists of more than 575,000 images, and nearly 100,000 images to validate that the AI actually learned.

On a scale from general image recognition (ImageNet) to specific (facial recognition,where most faces generally look the same and only slight variations matter), iNat lies somewhere in the middle, Van Horn says.

Artificial intelligence research has progressed more in the last five years than in the last 50 in part because so much more data is available to use in training the AI. Much of that progress can be seen in products from Google, Amazon, and Facebook: Your photos can be tagged automatically, your email app knows how you like to respond to emails, or a new smart speaker can use AI to recognize what you’re saying.

Van Horn, who has specialized in building AI that distinguishes differences between birds, said that the iNat competition illustrates how AI is beginning to help people learn about the world around them, rather than just help them organize their photos, for instance. iNat may build the software into an app that could help people identify plants and animals by just taking a picture.

“You start building algorithms that can actually answer the questions that people have,” Van Horn says. “Like what bird am I actually looking at? I know it’s a bird. Please computer, don’t just tell me it’s a bird.”