Summary: Autonomous Vehicles (AVs) are supposed to be just around the corner but the anecdotal evidence is that their claims to safety are way out ahead of reality. The solution may be in a shared segment of on-board telematics, part of the SLAM group (simultaneous localization and mapping) and sharing some of that data car-to-car.

According to many in the autonomous vehicle (AV) industry we’re supposed to see self-driving cars on the road as early as 2018. At the outside that’s only 18 or 20 months from now. These won’t necessarily be level 5 AVs (no steering wheel, no pedals, works in all environments all the time) but to get in the game these need to be at least level 3 (autonomous in most but not all situations with driver standing by to take control). If you’re wondering where we are currently, I’m told by industry experts that we’re at about 2 ½ with Tesla in the lead and Tesla’s Model 3 the most likely candidate for a level 3 vehicle.

According to many in the autonomous vehicle (AV) industry we’re supposed to see self-driving cars on the road as early as 2018. At the outside that’s only 18 or 20 months from now. These won’t necessarily be level 5 AVs (no steering wheel, no pedals, works in all environments all the time) but to get in the game these need to be at least level 3 (autonomous in most but not all situations with driver standing by to take control). If you’re wondering where we are currently, I’m told by industry experts that we’re at about 2 ½ with Tesla in the lead and Tesla’s Model 3 the most likely candidate for a level 3 vehicle.

But if you listen to the experts working on the systems and read the press about on-the-road incidents, this timeline seems very optimistic. The problem curiously enough may lie in the telematics, the signals sent from the car to home base and exactly who owns them and for what purposes.

The headline of this article is meant to remind us of how imperfect this technology is today. It’s not widely reported (for obvious reasons) but for example recently a Google AV on a test run merging on the freeway moved directly into the side of a public transit bus because it was programmed to believe the bus would let it merge. That’s right. It thought like a human being, but not like a human being in a bad mood.

Also, if you look back at the March 20 Bloomberg Business Week article on the brouhaha between Google and Uber, you’ll see that when Uber dispatched 16 self-driving cars in a December test in San Francisco (without seeking California DMV approval) the first thing one did was run a red light (even with a safety driver on board).

So what’s this go to do with telematics? At the March Strata+Hadoop conference in San Jose Ms. Jay White Bear, a lead IBM AV data scientist gave us a master class in SLAM (simultaneous localization and mapping) and just why the telematics are important.

So what’s this go to do with telematics? At the March Strata+Hadoop conference in San Jose Ms. Jay White Bear, a lead IBM AV data scientist gave us a master class in SLAM (simultaneous localization and mapping) and just why the telematics are important.

Telematics, the signal from the car to home base, are designed to do at least three broad categories of things.

- Confirm and continue to improve the algorithms allowing the AV to function. This is clearly the magic under the hood and every OEM would want this to be proprietary.

- Maintain a detailed record of any irregular incidents (aka accidents). There isn’t universal agreement on this yet but several states that have put forth regs on AVs have required that at least the few seconds before and after an accident should be publically available to resolve insurance and legal disputes.

- Update geographic maps and particularly changes and anomalies that crop up all the time because of construction, weather, or the trash cans your neighbor left out in the street. It’s this category of telematics that we want to focus on.

When you think about AVs the very word ‘autonomous’ leads you to believe that each car is an independent actor capable of safe independent operation. Well safe operation is the goal but maybe a little less independent than we were thinking. The issue is about car-to-car communication which brings us back to SLAM.

Ms. White Bear says the key challenges in SLAM are these:

- That the computer vision has correctly identified the images it sees. Is that a pedestrian, a post, or a tumbleweed?

- Collision avoidance. More generally the problem of a non-static environment and how to interpret the path and proximity of moving objects in order to avoid collision. By the way, Ms. White Bear says sheets of glass like store windows still gives Lidar a problem.

- Piecing those image elements together. That is in a set of video frames does the object identified in frame 1 correspond to the same object in frame 2 when the cause could be the movement of the vehicle, the object, differences in elapsed time between frames, or even jitters in the camera.

- Updating the map. This is a very key piece called loop-closure meaning recognizing a previously visited location and updating the reference map to account for any changes like that huge new pothole or your neighbor’s trash cans.

It’s the first element, collision avoidance, and the last one, updating the map that is our focus.

Right now there are essentially no vehicles (except test AVs and a handful of Teslas) sending these types of comprehensive telematics back to the mothership and essentially no vehicles communicating cooperatively with each other. When AVs finally hit the road there will finally be some robust telematics sent home but these will definitely not, as of now, be shared with other OEMs.

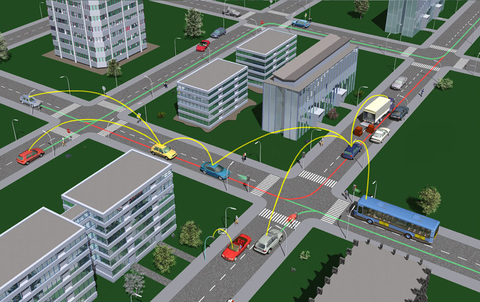

- AV-to-AV communication that would resolve the car-bus collision scenario by allowing the two AVs to negotiate paths in real time that avoid conflicts. Yes it will be years before there are enough AVs on the road to make this a significant factor but it would certainly give us peace of mind that at least in some cases our AV will make the right decision.

- Common mapping updates. Asking an AV to store and/or transmit every bit of data about terrain its covering is probably more data than we can handle. Oh and yes, the load of AV telematics on our wireless communication grid is a separate problem that needs to be worked out. But we could ask our AV to make note of anything new about a location it’s already visited before and transmit the changes. This might exclude pedestrians but would intentionally include the new pot hole and the trash cans. It would also account for the endless stream of road repairs, detours, and weather related incidents like flooding.

So we come to the central question. Who owns the telematics or at least who owns what parts. The AV industry exemplifies the many ways that a fast changing high value industry can get out ahead of government rule making. We certainly don’t want a patchwork of state by state rules nor some heavy handed approach that actually impedes progress. But Ms. White Bear and IBM seem to be onto something here. Shouldn’t we be advocating for a tier of shared telematics to address at least these two obvious issues.

This is clearly an area that the OEMs are not currently addressing or even interested in cooperating. There’s a separate issue also. There are a number of stealth startups out there just waiting to provide third-party services to the AV crowd. I’ve talked to some of these folks and the biggest problem is getting access to the telematics that will trigger their service, whatever that service might be. Their alternative would be to provide their AV customers with yet another telematics capture device akin to what a few insurance companies are providing today to measure driving habits or duration. This layer of cost and complexity is holding back startups that might be able to use the shared data stream Ms. White Bear proposes. I imagine that IBM would be happy to position itself to manage this data stream. But leaving this profit motive aside, it makes a lot of sense.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at: