‘Artificial Intelligence’ Has Become Meaningless

It’s often just a fancy name for a computer program.

In science fiction, the promise or threat of artificial intelligence is tied to humans’ relationship to conscious machines. Whether it’s Terminators or Cylons or servants like the “Star Trek” computer or the Star Wars droids, machines warrant the name AI when they become sentient—or at least self-aware enough to act with expertise, not to mention volition and surprise.

What to make, then, of the explosion of supposed-AI in media, industry, and technology? In some cases, the AI designation might be warranted, even if with some aspiration. Autonomous vehicles, for example, don’t quite measure up to R2D2 (or Hal), but they do deploy a combination of sensors, data, and computation to perform the complex work of driving. But in most cases, the systems making claims to artificial intelligence aren’t sentient, self-aware, volitional, or even surprising. They’re just software.

* * *

Deflationary examples of AI are everywhere. Google funds a system to identify toxic comments online, a machine learning algorithm called Perspective. But it turns out that simple typos can fool it. Artificial intelligence is cited as a barrier to strengthen an American border wall, but the “barrier” turns out to be little more than sensor networks and automated kiosks with potentially-dubious built-in profiling. Similarly, a “Tennis Club AI” turns out to be just a better line sensor using off-the-shelf computer vision. Facebook announces an AI to detect suicidal thoughts posted to its platform, but closer inspection reveals that the “AI detection” in question is little more than a pattern-matching filter that flags posts for human community managers.

AI’s miracles are celebrated outside the tech sector, too. Coca-Cola reportedly wants to use “AI bots” to “crank out ads” instead of humans. What that means remains mysterious. Similar efforts to generate AI music or to compose AI news stories seem promising on first blush—but then, AI editors trawling Wikipedia to correct typos and links end up stuck in infinite loops with one another. And according to human-bot interaction consultancy Botanalytics (no, really), 40 percent of interlocutors give up on conversational bots after one interaction. Maybe that’s because bots are mostly glorified phone trees, or else clever, automated Mad Libs.

AI has also become a fashion for corporate strategy. The Bloomberg Intelligence economist Michael McDonough tracked mentions of “artificial intelligence” in earnings call transcripts, noting a huge uptick in the last two years. Companies boast about undefined AI acquisitions. The 2017 Deloitte Global Human Capital Trends report claims that AI has “revolutionized” the way people work and live, but never cites specifics. Nevertheless, coverage of the report concludes that artificial intelligence is forcing corporate leaders to “reconsider some of their core structures.”

And both press and popular discourse sometimes inflate simple features into AI miracles. Last month, for example, Twitter announced service updates to help protect users from low-quality and abusive tweets. The changes amounted to simple refinements to hide posts from blocked, muted, and new accounts, along with other, undescribed content filters. Nevertheless, some takes on these changes—which amount to little more than additional clauses in database queries— conclude that Twitter is “constantly working on making its AI smarter.”

* * *

I asked my Georgia Tech colleague, the artificial intelligence researcher Charles Isbell, to weigh in on what “artificial intelligence” should mean. His first answer: “Making computers act like they do in the movies.” That might sound glib, but it underscores AI’s intrinsic relationship to theories of cognition and sentience. Commander Data poses questions about what qualities and capacities make a being conscious and moral—as do self-driving cars. A content filter that hides social media posts from accounts without profile pictures? Not so much. That’s just software.

Isbell suggests two features necessary before a system deserves the name AI. First, it must learn over time in response to changes in its environment. Fictional robots and cyborgs do this invisibly, by the magic of narrative abstraction. But even a simple machine-learning system like Netflix’s dynamic optimizer, which attempts to improve the quality of compressed video, takes data gathered initially from human viewers and uses it to train an algorithm to make future choices about video transmission.

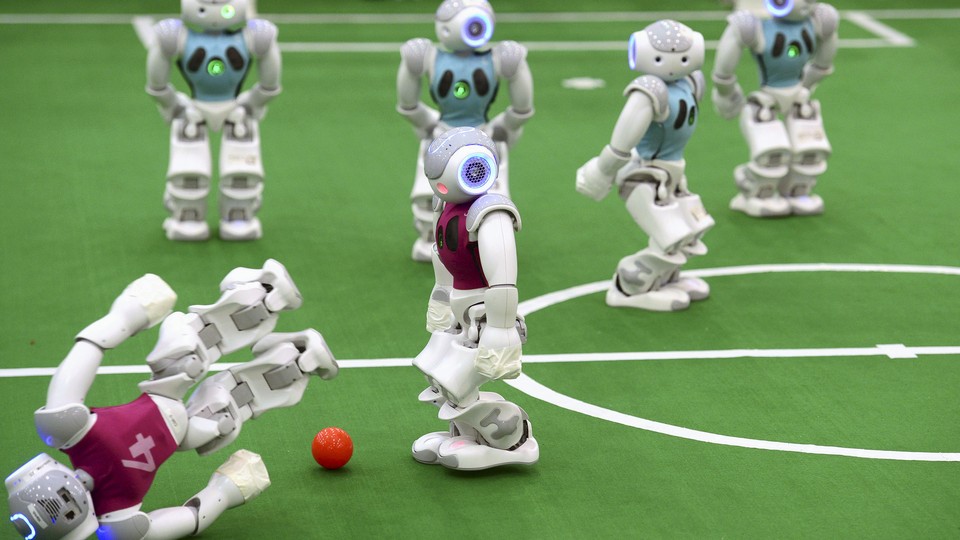

Isbell’s second feature of true AI: what it learns to do should be interesting enough that it takes humans some effort to learn. It’s a distinction that separates artificial intelligence from mere computational automation. A robot that replaces human workers to assemble automobiles isn’t an artificial intelligence, so much as machine programmed to automate repetitive work. For Isbell, “true” AI requires that the computer program or machine exhibit self-governance, surprise, and novelty.

Griping about AI’s deflated aspirations might seem unimportant. If sensor-driven, data-backed machine learning systems are poised to grow, perhaps people would do well to track the evolution of those technologies. But previous experience suggests that computation’s ascendency demands scrutiny. I’ve previously argued that the word “algorithm” has become a cultural fetish, the secular, technical equivalent of invoking God. To use the term indiscriminately exalts ordinary—and flawed—software services as false idols. AI is no different. As the bot author Allison Parrish puts it, “whenever someone says ‘AI’ what they're really talking about is ‘a computer program someone wrote.’”

Writing at the MIT Technology Review, the Stanford computer scientist Jerry Kaplan makes a similar argument: AI is a fable “cobbled together from a grab bag of disparate tools and techniques.” The AI research community seems to agree, calling their discipline “fragmented and largely uncoordinated.” Given the incoherence of AI in practice, Kaplan suggests “anthropic computing” as an alternative—programs meant to behave like or interact with human beings. For Kaplan, the mythical nature of AI, including the baggage of its adoption in novels, film, and television, makes the term a bogeyman to abandon more than a future to desire.

* * *

Kaplan keeps good company—when the mathematician Alan Turing accidentally invented the idea of machine intelligence almost 70 years ago, he proposed that machines would be intelligent when they could trick people into thinking they were human. At the time, in 1950, the idea seemed unlikely; Even though Turing’s thought experiment wasn’t limited to computers, the machines still took up entire rooms just to perform relatively simple calculations.

But today, computers trick people all the time. Not by successfully posing as humans, but by convincing them that they are sufficient alternatives to other tools of human effort. Twitter and Facebook and Google aren’t “better” town halls, neighborhood centers, libraries, or newspapers—they are different ones, run by computers, for better and for worse. The implications of these and other services must be addressed by understanding them as particular implementations of software in corporations, not as totems of otherworldly AI.

On that front, Kaplan could be right: abandoning the term might be the best way to exorcise its demonic grip on contemporary culture. But Isbell’s more traditional take—that AI is machinery that learns and then acts on that learning—also has merit. By protecting the exalted status of its science-fictional orthodoxy, AI can remind creators and users of an essential truth: today’s computer systems are nothing special. They are apparatuses made by people, running software made by people, full of the feats and flaws of both.