Take a look at this function:

for (j = 0; j < n; j++) {

for (i = 0; i < m; i++) {

float total(0);

for (l = 0; l < k; l++) {

const size_t a_index = ((i * a_i_stride) + (l * a_l_stride));

const float a_value = a[a_index];

const size_t b_index = ((j * b_j_stride) + (l * b_l_stride));

const float b_value = b[b_index];

total += (a_value * b_value);

}

const size_t c_index = ((i * c_i_stride) + (j * c_j_stride));

c[c_index] = total;

}

}

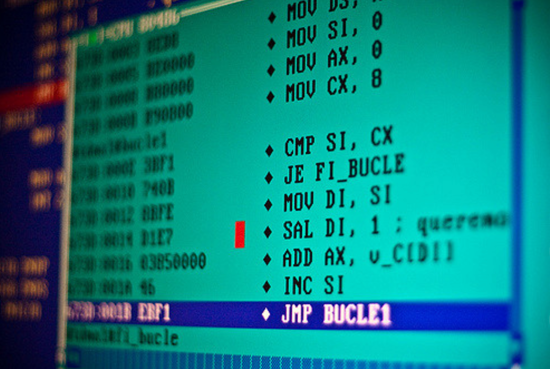

For something so simple, it turns out it’s amazingly hard for compilers to speed up without a lot of human intervention. This is the heart of the GEMM matrix multiply function, which powers deep learning, and every fast implementation I know has come from old-school assembler jockeys hand-tweaking instructions!

When I first started looking at the engineering side of neural networks, I assumed that I’d be following the path I’d taken on the rest of my career and getting most of my performance wins from improving the algorithms, writing clean code, and generally getting out of the way so the compiler could do its job of optimizing it. Instead I spend a large amount of my time worrying about instruction dependencies and all the other hardware details that we were supposed to be able to escape in the 21st century. Why is this?

Matrix multiplies are a hard case for modern compilers to handle. The inputs used for neural networks mean that one function call may require millions of operations to complete, which magnifies the latency impact of any small changes to the code. The access patterns are entirely predictable for a long period, but not purely linear, which doesn’t fit well with cache line algorithms as written in the naive way above. There are lots of choices about how to accumulate intermediate results and reuse memory reads, which will have different outcomes depending on the sizes of the matrices involved.

All this means that an endangered species, hand-coding assembler experts, write all of the best implementations. GotoBlas (which evolved into OpenBlas) showed how much speed could be gained on Intel CPUs. Eigen has had a lot of work put into it to run well on both x86 and ARM with float, and gemmlowp is optimized for eight-bit on ARM. Even if you’re running on a GPU, Scott Gray (formerly at Nervana, now at OpenAI) has shown how much faster hand-coded solutions can be.

This is important because it means that there’s a lot of work involved in getting good performance from new platforms, and there’s often a big gap between existing highly-optimized solutions and those ported from other architectures. This is visible for example with gemmlowp on x86, where the hand optimization is still a work in progress and so the speed still lags behind float alternatives right now.

It’s also exciting, because the real-world performance of even most optimized libraries lags behind the theoretical limits of the hardware, so there are still opportunities to squeeze more speed out of them with some clever hacking. There are also exciting developments in fundamentally different approaches to the problem like the Winograd algorithm. The good news is that if you’re an old-school assembler hacker there’s still an important place for you in the brave new world of deep learning, so I hope we can pull you in!

Pingback: Why Deep Learning Needs Assembler Hackers | Natural Language Processing Blog - NLP Blog

Sounds like I could put my long-dormant Forth skills to work!

Reblogged this on The Secret Guild of Silicon Valley.

Assume the title here is tongue-in-cheek – everyone now knows compilers are way way better than human-hand-coded assembly. In the case of VLIW DSPs, which are the most power efficient micro-architecture today for GEMM acceleration, hand-coded assembler would utterly fail.

The TensorFlow HVX integration for a VLIW DSP uses hand-coded assembler extensively and was contributed by the manufacturer of the device:

https://source.codeaurora.org/quic/hexagon_nn/nnlib/tree/hexagon/asm_src

How wrong you are. Compilers are steadily getting better, but fail spectacularly at optimizing certain algorithms. A good example – try using your best auto-vectorizing compiler and let it try to create SIMD for a loop which has a conditional statement in the middle. Conditionals can be handled with SIMD instructions which create bit masks from the true/false results, but compilers never learned that trick. Also, VLIW machines (e.g. Movidius Myriad2) don’t have the same resources devoted to their tools due to the narrow audience. I can beat the compiler handily at writing VLIW assembly language EVERY SINGLE TIME.