AMD's FreeSync 2 displays will require low latency and HDR in 2017

Premium quality displays with HDR, LFC, and low latency will be certified by AMD.

For the past few years, there's been an ongoing war between AMD and Nvidia. No, not that war—the GPU battle goes back much further. Instead, I'm talking about the debate over G-Sync and FreeSync and the various merits of each technology. Here's the basic summary of the current technologies:

G-Sync:

- First variable refresh rate technology to market

- Requires Nvidia G-Sync module (scaler) on desktops (but not laptops)

- Nvidia works with partners to 'certify' products

- This means the displays have to meet certain standards that Nvidia sets

- Only works with Nvidia GPUs

FreeSync:

- Uses DisplayPort adaptive refresh open standard

- AMD charges no royalties or other fees

- Standard industry scalers support FreeSync

- AMD does not certify any products

- Currently only supported by AMD GPUs (but in theory could work with other GPUs)

The result of the above situation is that there are a lot more FreeSync displays on the market than there are G-Sync displays—AMD says there were 121 as of December 2016 compared to 18 G-Sync displays—and most of the FreeSync displays cost less than equivalent G-Sync offerings. But that doesn't really tell the whole story, as many of the FreeSync displays are clearly lower quality than the competing G-Sync monitors.

Like it or not, Nvidia's certification of all G-Sync displays has generally resulted in better quality results, including reasonable refresh rate ranges and good performance. To be clear, there are also great FreeSync displays, but they also tend to cost nearly as much as the G-Sync equivalent. Nvidia has always claimed that the higher price of G-Sync has had more to do with the necessary quality of other elements and not from royalties, but there's no denying the fact that G-Sync displays are expensive.

Into this two-way battle, AMD is now launching a third alternative: Radeon FreeSync 2. (The Radeon branding is becoming common these days, e.g. Radeon Instinct and Radeon Pro WX.) This will build on the current FreeSync platform, but along with new features and requirements AMD will also be certifying the displays in some fashion. Put another way, all FreeSync 2 displays are also FreeSync displays, but not all FreeSync displays will qualify to be marketed as FreeSync 2 displays.

So what makes FreeSync 2 better? It takes the best FreeSync elements, some of which are optional, and makes them mandatory.

PC Gamer Newsletter

Sign up to get the best content of the week, and great gaming deals, as picked by the editors.

Low Framerate Compensation (LFC) in FreeSync requires that the maximum supported variable refresh rate be at least 2.5 times the minimum supported refresh rate—so if the minimum is 30Hz, the maximum needs to be at least 75Hz, or 40-100Hz would also work. Many FreeSync displays meet the LFC requirement, but a few have more limited ranges (e.g., 48-75Hz, or 30-60Hz) and do not work with LFC. FreeSync 2 requires LFC support.

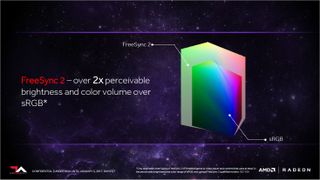

FreeSync 2 also mandates that the display be HDR capable, which will immediately eliminate the vast majority of existing display panels. HDR is the future of high quality video content, and there's broad support from video, photo, and technology companies including AMD and Nvidia. Making it mandatory for FreeSync 2 should help spur the market presence of HDR content, but it will invariably mean the displays cost more than non-HDR models.

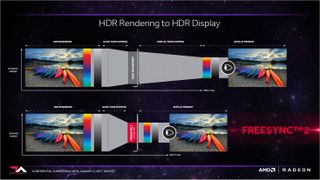

Along with HDR support, FreeSync 2 will require that the displays meet certain latency (input lag) standards, though they didn't go into all the specifics about what this entails. There will be a FreeSync 2 software / API element that will allow games and applications that support the standard to bypass certain tone mapping work.

Currently, most games as an example render everything in HDR internally. Then they perform a tone mapping to prepare the content for output on an SDR display—or they could tone map for an HDR display if the game supports HDR modes. The problem is that most HDR displays will then perform a second tone mapping of whatever the game/application sends over, which increases latency. With a FreeSync 2 application/game running on a FreeSync 2 monitor, the application will be able to query the display for its tone mapping table and use that, removing some latency.

Getting back to the latency certification, it appears AMD's latency requirement is only for FreeSync 2 applications, meaning AMD will test that the displays are below some average input lag value in that mode. I'd like for this to be tested on standard SDR content as well, to ensure that all inputs are free from serious lag, but that's probably beyond the scope of AMD's intent. Hopefully the display vendors will keep in mind that the vast majority of current content is still SDR and work to keep input lag low in other modes.

Putting it all together, here's…

FreeSync 2:

- Uses DisplayPort adaptive refresh open standard

- Low Framerate Compensation is required

- Some form of HDR must be present (but not necessarily HDR10)

- Scalers and processing must meet latency requirements (with HDR)

- AMD charges no royalties or other fees

- AMD certifies all FreeSync 2 products (unclear if this is 'free')

- Currently only supported by AMD GPUs

Obviously, the FreeSync 2 requirements are higher than standard FreeSync. There's no explicit requirement that the displays will cost more, but it's inherent in the quality standards AMD intends to enforce. Combine HDR panels with lower latency and a wider range of refresh rates, not to mention the 'premium' nature of FreeSync 2, and I wouldn't be surprised to see the first FreeSync 2 displays cost substantially more than the current FreeSync monitors. As with all things, prices will trend downward over time, but it could take years before FreeSync 2 becomes anything near ubiquitous.

Taking the larger view, FreeSync 2 feels destined to become AMD's true alternative to G-Sync—provably better than generic displays, but with far fewer models to choose from and higher prices. That's not necessarily good or bad, but given AMD touts the existence of substantially more FreeSync displays compared to G-Sync displays, I expect that in two years we may find that the number of FreeSync 2 monitors is roughly the equal to the number of G-Sync displays.

I've often said that displays are the component that gets upgraded most infrequently. If you're hanging onto an older display and are thinking it might finally be time to retire the old and get something new, HDR is one of the few items that would entice me to upgrade—higher refresh rates being the other. With 4K HDR 120-144Hz displays coming out this year (and both FreeSync/FreeSync 2 and G-Sync models planned), it could be a good time to invest in a monitor that will take you through the next decade. Too bad you still need to decide whether to buy into the AMD or Nvidia ecosystem.

Here's AMD's complete slide deck for FreeSync 2, which shows several monitors coming this year:

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular