Popular apps like PokemonGO and SnapChat have brought the term “augmented reality” into the spotlight. In July 2016, Niantic, the parent company of PokemonGO (and of the popular augmented-reality game Ingress) reported revenue streams of $10 million per day from PokemonGO alone, proving that augmented-reality features can be successful in a mainstream market. This newly sparked interest in augmented reality has led to the term being liberally applied to many technologies, including some that might not necessarily qualify, such as this recorded hologram of Michael Jackson performing for a live audience.

Definition: Augmented reality (AR) refers to technology that incorporates real-time inputs from the existing world to create an output that combines both real-world data and some programmed, interactive elements which operate on those real-world inputs.

In order to qualify as “augmented reality,” the technology must:

- Respond contextually to new external information and account for changes to users’ environments

- Interpret gestures and actions in real time, with minimal to no explicit commands from users

- Be presented in a way that does not restrict users’ movements in their environment

Thus, the hologram of Michael Jackson superimposed on a live show in which real dancers move in sync to the singer’s actions is not augmented reality. The hologram does not respond to any real-world inputs and is essentially a static contraption; in fact, the real world (represented by the dancers) augments the hologram, and not vice versa.

Augmented reality is an enhancement of the real world that responds dynamically to its changes. It is distinct from virtual reality (VR), which isolates users and shows them a fully simulated environment, comprised of mostly fabricated elements. (Typical VR examples include science-fiction games or a walkthrough of a gigantic model of the human heart.) However, both virtual and augmented reality have in common the real-time, contextual response to users’ actions and interactions with the environment.

Examples

The concept of augmented reality is not a new one. A frequently overlooked, yet widespread example of augmented reality that has existed for quite a while is the automobile parking-assistance system. In these systems, the vehicle’s computer calculates the vehicle’s distance from surrounding obstacles, and, based on the steering wheel’s position, determines the vehicle’s trajectory. Then, the computer augments this external input, either by playing an audible noise that changes intensity and frequency as distance lessens, or by overlaying symbols of vehicle proximity and trajectory onto the rear-camera video feed.

Source: PriusChat.com

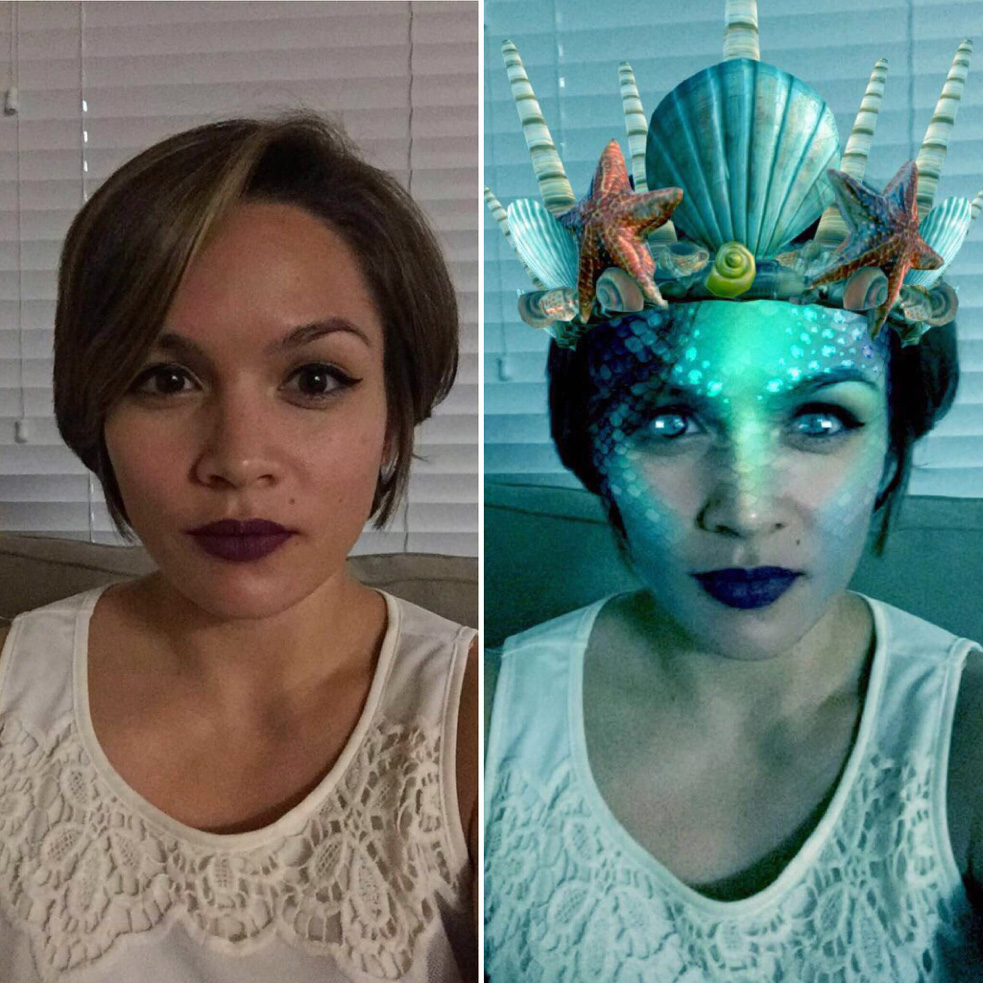

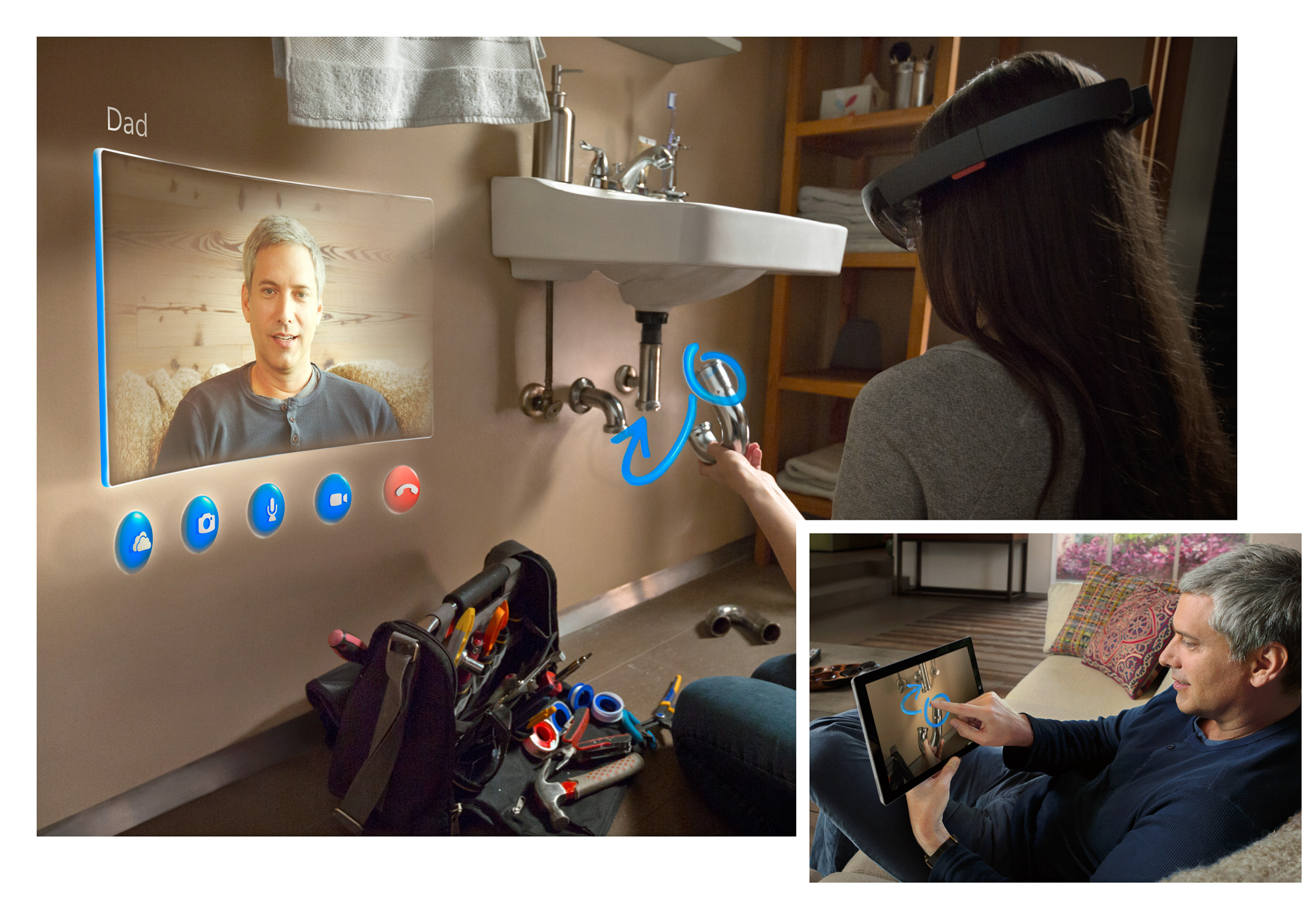

Here are a few other examples of augmented-reality systems:

Why Is Augmented Reality Important for UX?

Augmented-reality interfaces are an example of noncommand user interfaces in which tasks are accomplished using contextual information collected by the computer system, and not through commands explicitly provided by the user. To be able to interpret the current context and “augment” the reality, an “agent” runs in the background to analyze the many external inputs and act on them, or provide actionable information.

For example, Waverly Labs’ earpiece called “The Pilot” actively “listens” for another language in order to translate it in real time to English (or the user’s language of choice). The user does not need to tell the earpiece to listen every time a nearby person speaks; instead, the earpiece “agent” constantly interprets the real-world auditory input and starts translating based on the context of the situation. Other apps, such as Ingress, display an associated “portal” as soon as users approach a landmark. Similarly, the parking-assistance system does not require any additional input or commands from the user; it offers actionable information based on the vehicle’s current state (reverse gear) and position relative to surrounding obstacles.

As a type of noncommand UI, AR interfaces provide excellent opportunities for improving user experience. To see why, consider an airplane mechanic who crawls around inside the guts of an aircraft for an inspection and needs to check for how long a certain part has been in service. With a traditional screen-based user interface, the mechanic would have to somehow “save” the part number (by remembering it, taking a picture of it on a smartphone, or writing it down on a piece of paper) and then access a phone or computer-based system to determine for how long that part has been in operation. But with an AR technology like HoloLens or Google Glass, the service record could be displayed right on top of the item, with little to no commands from the user.

The information overlaid over the physical world would help the mechanic check the records of any suspected part in situ, without the need of any external device or implement. The operation could be repeated rapidly, with any other part and will allow quick interception and diagnosis of other problems before they worsen or cause an accident.

In this scenario (and in many others), AR can help the UX in 3 fundamental ways:

- By decreasing the interaction cost to perform a task

The mechanic in our example can remain in the current environment and have relevant data displayed right there, without doing any special action. In contrast, with a nonAR UI, the mechanic needs to take a (potentially effortful) explicit action to access the information — namely, she would have to resort to a special device (phone or computer) and interact with it.

The lack of commands in AR interfaces makes the interaction efficient and requires little user effort: AR systems are proactive and take the appropriate action whenever the outside context requires it. - By reducing the user’s cognitive load

In the absence of an AR system, the mechanic would have to remember not only how to use the smartphone or the desktop to find information about the part, but also the part number itself (unless the mechanic would have chosen to write it down or use another form of external memory — a decision which in itself would have increased the interaction cost). With an AR system, the useful part information is displayed automatically and the mechanic does not need to commit a part number to working memory or spend effort to ”save” it on paper or elsewhere.

Thus, AR UIs decrease working-memory load in two ways:- Like any noncommand UI, they do not require users to learn commands.

- They allow users to move information smoothly from one context to another.

- By combining multiple sources of information and minimizing attention switches

With a nonAR system, if the mechanic wanted to “save” the part number and use a different system to find its history, she would have to switch attention from the plane to the that external source of information providing the part’s age. With AR, the two sources of information are combined because the relevant information is displayed in an overlay on top of the part itself, so the mechanic won’t need to divide attention. Many complex tasks (e.g., surgery, writing a report) do involve putting together multiple sources of information; some of them will benefit from AR.

Unlike this last benefit of AR interfaces, the other two — reducing the interaction cost and the cognitive load — are shared with all noncommand UIs.

Note that we assumed a well-designed user interface in our example of aircraft repair: we said that the mechanic would see “useful information” displayed next to each part. It’s easy to imagine a poorly designed system that would overwhelm the mechanic with too much information, or with a confusing display, making the necessary information hard to spot. As always, good user experience only comes from close attention to users’ needs, and any new UI technology opens up even more opportunities for careless design. We’re sure that there will be many lousy AR systems shipped in the coming years — that’s why UX professionals have long-term job security, despite changes in technology.

Conclusion

AR has seen massive success in recent years and is an opportunity for a seamless, low-effort, yet rich user interaction with the real world. As more technologies take advantage of this growing trend, augmented reality’s definition may certainly grow to encompass much more than it does now, but by understanding the users’ goals and contexts, developers and designers will ultimately be able to create a successful and effective augmented reality.