So you have this motivated, tightly controlled, highly competent non-government organization (NGO). And they implement an innovative educational experiment, using a randomized controlled trial to test it. It really seems to improve student learning. What next? You try to scale it or implement it within government systems, and it doesn’t work nearly as well. An

evaluation of a nationwide contract teacher program in Kenya did not deliver the same results as earlier contract teacher evaluations in

Kenya and

India. A

primary literacy program in Uganda that was effective on various learning outcomes when implemented by NGO workers, but when those workers were replaced by government officials, the range of effective outcomes narrowed. In the latest version of my review of reviews of what works to improve learning outcomes (with Popova and coming soon from the

WBRO), we see that only 3 out of 20 evaluated teacher training interventions were implemented by governments.

Don’t get me wrong: More and more impact evaluations are carried out using government systems, at scale. This is a positive move. But even many of these evaluations are one-time events: A program is implemented, you test whether it works, and – best case scenario – the government uses the results to inform decisions to improve, further expand, or cut the program. Rarely do we learn how effective the Intervention 2.0 is.

In my reading for the 2018 World Development Report, I came across a new paper that effectively demonstrates both (1) the process of testing, diagnosing challenges, retesting, and achieving success; and (2) what are the specific challenges faced – and solutions to be found – when integrating a new model into government schools: Mainstreaming an Effective Intervention: Evidence from Randomized Evaluations of “Teaching at the Right Level” in India, by Banerjee et al.

The initial evidence: Several recent studies have demonstrated that introducing pedagogy that helps instructors teach to whatever level students are at – rather than clinging to an overambitious government curriculum – can lead to substantial learning gains. This can happen through remedial education provided by community teachers (in India and in Ghana), computer assisted learning that helps the children at their level ( one and two), and grouping children by ability (in Kenya). Recent reviews ( one and two) see this as one of the key takeaways from the impact evaluation literature in education.

Much of the evidence above comes from the work of Indian NGO Pratham, in partnership with researchers from J-PAL. Pratham’s approach centers on two principal components: (1) Group children by learning level, and (2) teach children at that level, using effective materials. ( Principal components joke redacted.)

One more recent entry in this literature also comes from Pratham: Lightly trained but carefully monitored volunteers provided basic reading classes outside of school over 2-3 months, leading to sizeable, significant reading gains in treatment villages relative to control villages. But take-up was low for this intervention: Among students with the lowest learning levels, fewer than 20 percent participated. Getting the intervention mainstreamed into government classes could significantly increase its reach. The effort to accomplish this required multiple attempts, with multiple RCTs to test effectiveness.

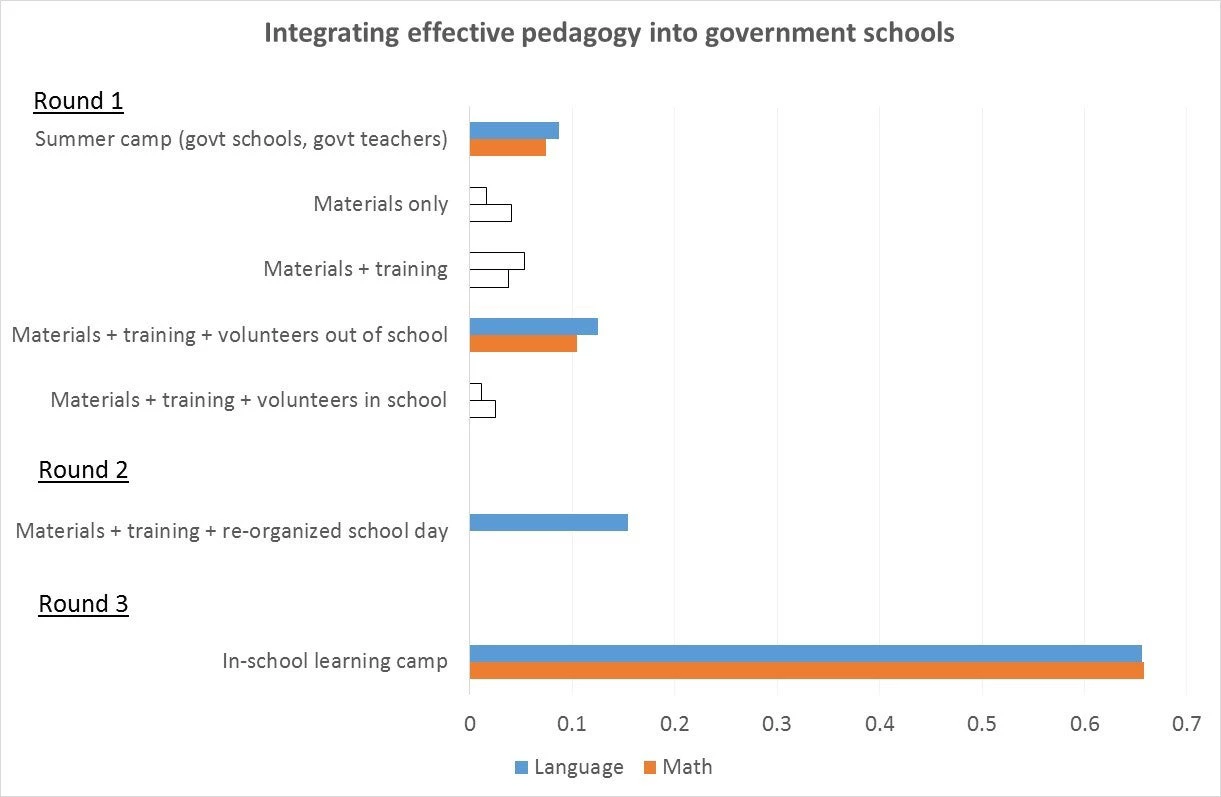

Getting government teachers involved – Round 1: The first phase of testing involved four interventions. First, there were one-month summer reading camps for weak readers in Grades 1-5, “conducted in school buildings by government school teachers” but with Pratham materials, basic training, and volunteers to provide support. Second, during the school year, some schools simply received Pratham materials with no training or support. Third, some schools received the materials plus training (to reorganize the class around student abilities and then teach to those abilities using the materials) and monitoring. Fourth, some schools received all that plus volunteers who were supposed to help the students who were weak in arithmetic. In practice, how the volunteers were used varied across two intervention states: In one state, the volunteers mostly worked outside of school hours, whereas in the other, they worked at the school during school hours.

What happened? The summer camp led to gains in both language and math (0.07-0.09 standard deviations). The materials intervention did nothing. (Not a big surprise, but wouldn’t it have been awesome if it had worked?) The materials plus training intervention did nothing. The materials plus training plus volunteers led to gains in the state where volunteers worked outside of school (0.11-0.13 standard deviations) but had no impact when the volunteers were in the school. In practice, it seems that when the volunteers were working in school, the teachers co-opted them as teaching assistants in using traditional methods (no grouping by student ability) for the government curriculum (over the head of many students).

So essentially, while it was possible to use government teachers (in the summer camp), efforts to integrate the Pratham methods into the actual school day proved ineffective.

Getting government teachers involved – Round 2: A couple of years later, in another state, Pratham gave it another shot, with two new features. First, they really sought to signal government support: They provided training to pre-existing school supervisors to implement the methodology, re-organizing classes by ability and instructing at appropriate levels. Then, those supervisors went to program schools and tried out the method for 15-20 days. They then trained the teachers in their area. Second, the state lengthened the school day by one hour and – in program schools – that hour was used for reorganizing students by their level of ability in Hindi, independent of whether they were in Grade 3, 4, or 5, and then instructing using Pratham materials. So not only was there clear government support, but there was a distinct time set aside for the Pratham methodology; teachers didn’t have to figure out how to integrate the Pratham materials into the government curriculum.

What happened? Student language scores increased by 0.15 standard deviations. (No effect on math, but there was no math instruction, so that seems fair.) So it was possible to integrate this program in government schools, but it took not only government buy-in but also required a reorganization that made implementation significantly easier for teachers.

Getting government teachers schools involved – Round 3: The following year, Pratham took the program to another state with a weaker education system, including higher rates of teacher absenteeism. So the objective was to use volunteers but within government schools. But wait: In Round 1, the volunteers just got coopted by teachers. So this time, the volunteers essentially took over the schools for 40 days during the school year (plus a 10-day booster during the summer) to offer “bursts” of Pratham instruction. Even though government teachers weren’t implementing the program, the use of government schools allowed an expansive reach. The results were striking: Language and math scores increased by 0.61-0.70 standard deviations.

Source: Adapted from Table 3 of Banerjee et al. (2016), with weighted averages (by number of students) of “Materials + training” and “in-school learning camps.” Color indicates statistical significance.

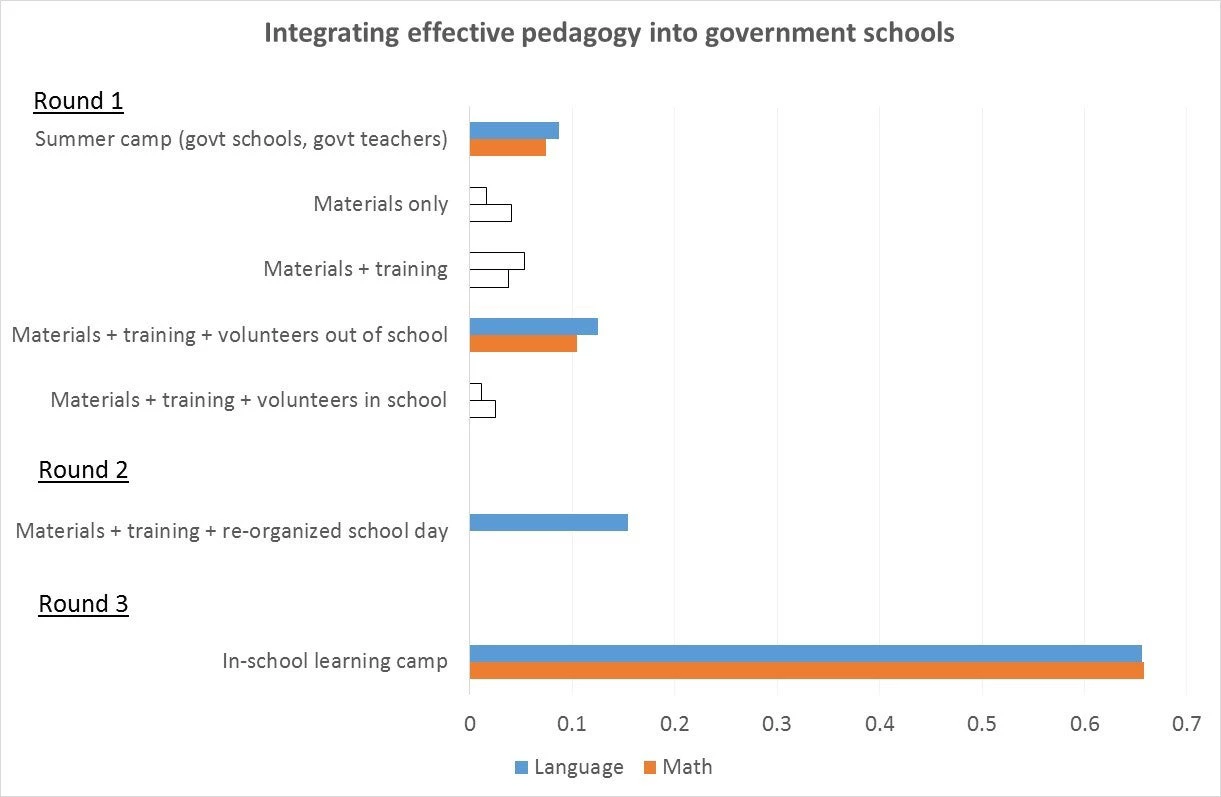

Source: Adapted from Table 3 of Banerjee et al. (2016), with weighted averages (by number of students) of “Materials + training” and “in-school learning camps.” Color indicates statistical significance.

Conclusions

This work provides further evidence for pedagogical interventions that help teachers – whether government or volunteer – to teach students at their current level of learning, or “teaching at the right level” ( as J-PAL labels it).

But it also demonstrates that integration of effective interventions into government systems can be possible – sometimes completely, sometimes partially – even if it doesn’t work on the first round. This provides a useful model of iterative learning about institutions, beyond the technical intervention.

Deaton and Cartwright recently wrote that “Technical knowledge, though always worth having, requires suitable institutions if it is to do any good.” This work shows that part of having suitable institutions is making the effort to learn to work with the institutions that are in place.

Don’t get me wrong: More and more impact evaluations are carried out using government systems, at scale. This is a positive move. But even many of these evaluations are one-time events: A program is implemented, you test whether it works, and – best case scenario – the government uses the results to inform decisions to improve, further expand, or cut the program. Rarely do we learn how effective the Intervention 2.0 is.

In my reading for the 2018 World Development Report, I came across a new paper that effectively demonstrates both (1) the process of testing, diagnosing challenges, retesting, and achieving success; and (2) what are the specific challenges faced – and solutions to be found – when integrating a new model into government schools: Mainstreaming an Effective Intervention: Evidence from Randomized Evaluations of “Teaching at the Right Level” in India, by Banerjee et al.

The initial evidence: Several recent studies have demonstrated that introducing pedagogy that helps instructors teach to whatever level students are at – rather than clinging to an overambitious government curriculum – can lead to substantial learning gains. This can happen through remedial education provided by community teachers (in India and in Ghana), computer assisted learning that helps the children at their level ( one and two), and grouping children by ability (in Kenya). Recent reviews ( one and two) see this as one of the key takeaways from the impact evaluation literature in education.

Much of the evidence above comes from the work of Indian NGO Pratham, in partnership with researchers from J-PAL. Pratham’s approach centers on two principal components: (1) Group children by learning level, and (2) teach children at that level, using effective materials. ( Principal components joke redacted.)

One more recent entry in this literature also comes from Pratham: Lightly trained but carefully monitored volunteers provided basic reading classes outside of school over 2-3 months, leading to sizeable, significant reading gains in treatment villages relative to control villages. But take-up was low for this intervention: Among students with the lowest learning levels, fewer than 20 percent participated. Getting the intervention mainstreamed into government classes could significantly increase its reach. The effort to accomplish this required multiple attempts, with multiple RCTs to test effectiveness.

Getting government teachers involved – Round 1: The first phase of testing involved four interventions. First, there were one-month summer reading camps for weak readers in Grades 1-5, “conducted in school buildings by government school teachers” but with Pratham materials, basic training, and volunteers to provide support. Second, during the school year, some schools simply received Pratham materials with no training or support. Third, some schools received the materials plus training (to reorganize the class around student abilities and then teach to those abilities using the materials) and monitoring. Fourth, some schools received all that plus volunteers who were supposed to help the students who were weak in arithmetic. In practice, how the volunteers were used varied across two intervention states: In one state, the volunteers mostly worked outside of school hours, whereas in the other, they worked at the school during school hours.

What happened? The summer camp led to gains in both language and math (0.07-0.09 standard deviations). The materials intervention did nothing. (Not a big surprise, but wouldn’t it have been awesome if it had worked?) The materials plus training intervention did nothing. The materials plus training plus volunteers led to gains in the state where volunteers worked outside of school (0.11-0.13 standard deviations) but had no impact when the volunteers were in the school. In practice, it seems that when the volunteers were working in school, the teachers co-opted them as teaching assistants in using traditional methods (no grouping by student ability) for the government curriculum (over the head of many students).

So essentially, while it was possible to use government teachers (in the summer camp), efforts to integrate the Pratham methods into the actual school day proved ineffective.

Getting government teachers involved – Round 2: A couple of years later, in another state, Pratham gave it another shot, with two new features. First, they really sought to signal government support: They provided training to pre-existing school supervisors to implement the methodology, re-organizing classes by ability and instructing at appropriate levels. Then, those supervisors went to program schools and tried out the method for 15-20 days. They then trained the teachers in their area. Second, the state lengthened the school day by one hour and – in program schools – that hour was used for reorganizing students by their level of ability in Hindi, independent of whether they were in Grade 3, 4, or 5, and then instructing using Pratham materials. So not only was there clear government support, but there was a distinct time set aside for the Pratham methodology; teachers didn’t have to figure out how to integrate the Pratham materials into the government curriculum.

What happened? Student language scores increased by 0.15 standard deviations. (No effect on math, but there was no math instruction, so that seems fair.) So it was possible to integrate this program in government schools, but it took not only government buy-in but also required a reorganization that made implementation significantly easier for teachers.

Getting government teachers schools involved – Round 3: The following year, Pratham took the program to another state with a weaker education system, including higher rates of teacher absenteeism. So the objective was to use volunteers but within government schools. But wait: In Round 1, the volunteers just got coopted by teachers. So this time, the volunteers essentially took over the schools for 40 days during the school year (plus a 10-day booster during the summer) to offer “bursts” of Pratham instruction. Even though government teachers weren’t implementing the program, the use of government schools allowed an expansive reach. The results were striking: Language and math scores increased by 0.61-0.70 standard deviations.

Conclusions

This work provides further evidence for pedagogical interventions that help teachers – whether government or volunteer – to teach students at their current level of learning, or “teaching at the right level” ( as J-PAL labels it).

But it also demonstrates that integration of effective interventions into government systems can be possible – sometimes completely, sometimes partially – even if it doesn’t work on the first round. This provides a useful model of iterative learning about institutions, beyond the technical intervention.

Deaton and Cartwright recently wrote that “Technical knowledge, though always worth having, requires suitable institutions if it is to do any good.” This work shows that part of having suitable institutions is making the effort to learn to work with the institutions that are in place.

Join the Conversation