Note: As the ecosystem has developed a lot over the last few years, we moved our user services away from fleet into Kubernetes running on a lightweight underlying fleet layer. However, with recent developments in Kubernetes and lightweight container engines like rkt, we revisited our decision and decided to also migrate our base layer to Kubernetes and thus won’t be using fleet anymore in the future.

Giant Swarm offers a microservices infrastructure on which developers can run their containerized apps. This infrastructure uses multiple open source tools, incl. systemd, CoreOS, etcd, Docker, and many more. However, there is an underlying tool which runs all the infrastructure components and allows us to deploy and manage user applications across our cluster.

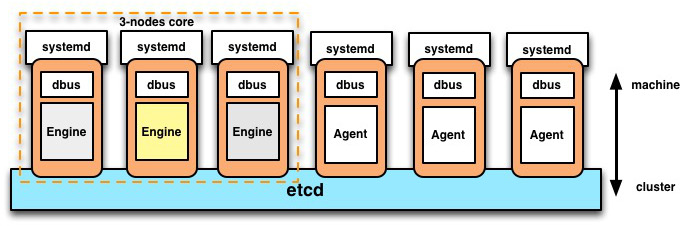

This open source tool is called fleet. Fleet is a distributed init system that manages systemd units in a cluster. In other words, fleet is a distributed systemd scheduler that relies on dbus and etcd to coordinate the state of the systemd units in a cluster. The main building blocks of the fleet architecture are the engine (master) and the agents (workers). In this architecture, fleet agents and engines use dbus to talk to systemd to assure the desired state of the systemd units on each node. The fleet engine is in charge of tasks such as the decision making, scheduling, and enforcement of the correct state of the cluster.

As shown in the the figure above, etcd is used as datastore and coordination mechanism to keep the nodes aware of the cluster’s state. Moreover, this figure shows a fleet cluster composed of 3-nodes as core member(masters) and another group of 3-nodes as workers.

At Giant Swarm we use fleet as init system for our core infrastructure. We decided to use fleet because we loved its robustness, intuitive app definitions, and its main functionality of enabling us to manage systemd units in a cluster. Usually our fleet clusters aren’t composed of many nodes (<50), but are expected to handle a non trivial (at this time) amount of units (>3000). The latter is true especially in our first generation infrastructure, where we run user apps with fleet. In that early generation of our infrastructure, the user apps are deployed as systemd unit chains.

As a consequence, our fleet cluster has to handle thousands of units offering good performance and being able to scale on demand. However, we encountered many problems with felet that turned out to be limitations of its current implementation:

list-units: fleet agents don’t always get the global picture of the cluster’s state.In that direction, we found through testing in our infrastructure that a high workload over etcd induces poor or wrong behavior of fleet. Besides, we believe that the use of etcd to provide inter-process communication for the agents could end up in potential bottlenecks and impact performance negatively.

As a consequence, we started to investigate how to reduce the dependencies on etcd. We aimed to mitigate the scalability issues of fleet, to improve the fail recovery mechanism, and to obtain better throughput (units/sec) and capacity of the fleet cluster.

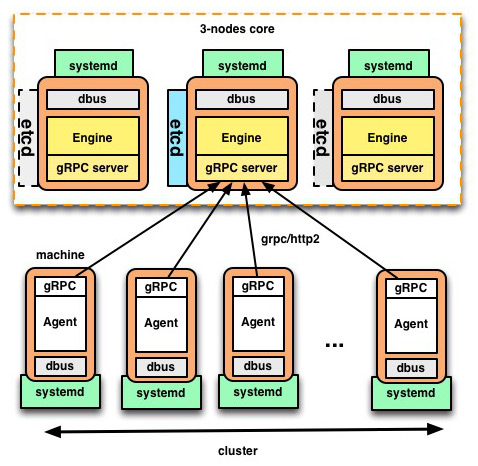

gRPC appeared as a technology which could help us reduce the friction with etcd while providing good performance to coordinate agents with engines. In our modification gRPC represents the new communication layer between the engine and agents. We used gRPC/HTTP2 as a framework to expose all the required operations (schedule unit, destroy unit, save state, etc.) that will allow to coordinate the main engine (the fleet node elected as leader) with the agents. Therefore, we don’t need to use etcd in each node of the cluster to know what to do or to gather the state of the cluster. Agents talk over gRPC to the engine to get the state of the cluster or tasks to be computed. With this new implementation, we also provided a new registry that stores all the information in-memory, thereby the engine doesn’t need to interact with etcd to know how to coordinate the cluster. Etcd is now used only to store persistent data rather than transient data as was the case until now.

Further, we maintained backwards compatibility, keeping the possibility to use etcd to store the coordination mechanism instead of in-memory. All these changes have been merged upstream in the CoreOS fleet repository.

Our new implementation offers a multiplexing layer on top of the former etcd registry used for coordination. This means:

This new implementation is running in our production cluster for quite a while already. We ran experiments in our cluster to validate this new implementation.

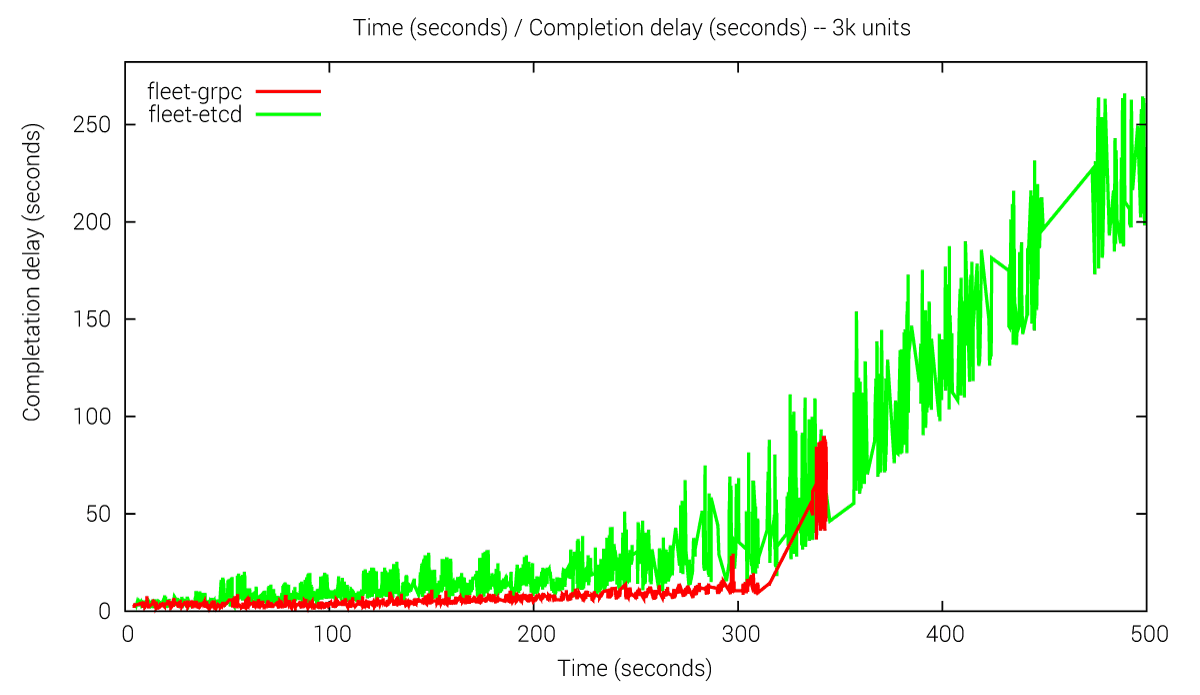

Benchmarks were composed of a CoreOS cluster composed of 3 machines as engines and 4 machines as agents. To highlight the improvements we ran two experiments to evaluate the performance and scalability of the fleet-grpc approach versus the former fleet-etcd one.

fleet-etcd: 75% of units started in between 1.729 - 28.14 secs. In the following, we show a histogram on which you can better appreciate the completion time to schedule the units.

1.729-28.14 75.5% █████████████████████ 2266

28.14-54.56 6.2% ██ 186

54.56-80.97 2.3% ▋ 69

80.97-107.4 2.43% ▋ 73

107.4-133.8 3.57% █ 107

133.8-160.2 3.53% █ 106

160.2-186.6 2.2% ▋ 66

186.6-213.1 2.17% ▋ 65

213.1-239.5 1.37% ▍ 41

239.5-265.9 0.7% ▏ 21

fleet-grpc: 85% of units started in between 1.004 - 9.907 secs.In the following, we show a histogram on which you can better appreciate the completion time to schedule the units.

1.004-9.907 84.1% █████████████████████ 2522

9.907-18.81 13.6% ████ 407

18.81-27.71 0.0333% ▏ 1

27.71-36.62 0.0667% ▏ 2

36.62-45.52 0.3% ▏ 9

45.52-54.42 0.4% ▏ 12

54.42-63.32 0.433% ▏ 13

63.32-72.23 0.3% ▏ 9

72.23-81.13 0.333% ▏ 10

81.13-90.03 0.5% ▏ 15

We observe performance issues with the old version when having to handle e.g. 3k units. The grpc approach schedules the units much faster than fleet-etcd. Moreover we can see that the linear complexity is growing, which indicates the scalability issues of the fleet-etcd approach.

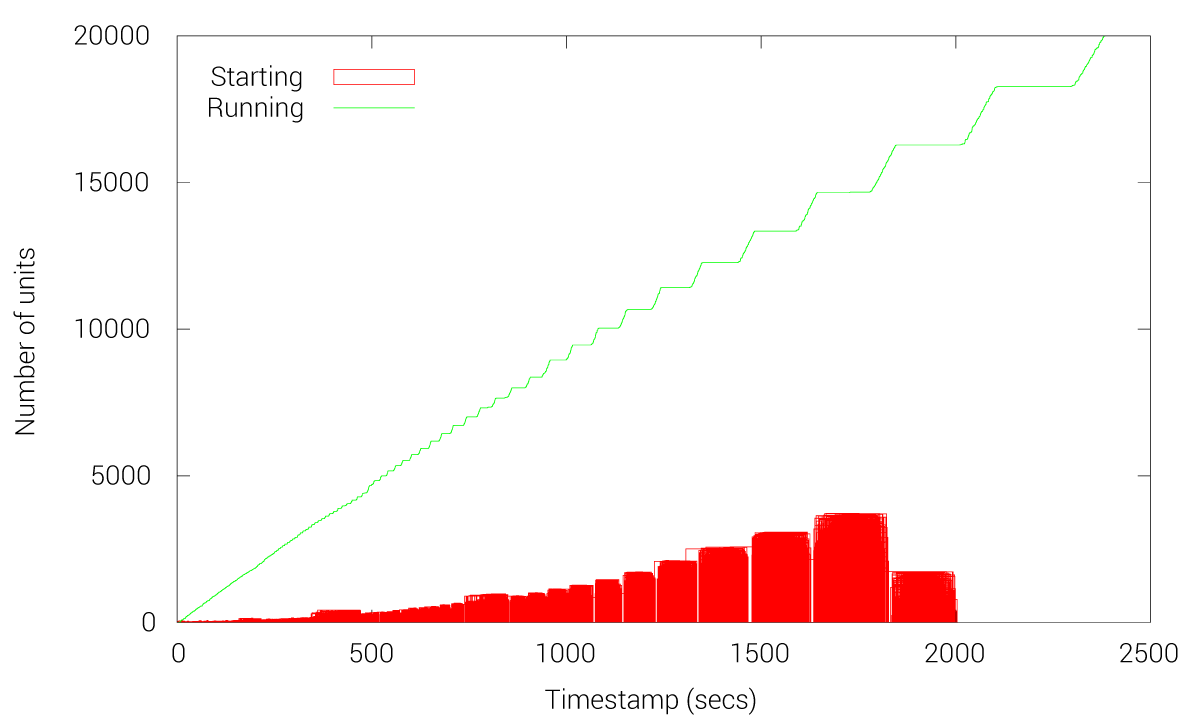

Fleet’s threshold in terms of amount of units that can handle:

However, we can the time to run 20k units grows linearly due to other fleet issues like an expensive reconciliation loop and cluster state recalculations (operations to periodically learn the state of the cluster). This shows the limits of this new fleet approach but obviously exposes a huge benefit as 20k units can be handled with this new implementation. As mentioned before, with fleet-etcd we cannot handle more than 3k units without starting to experience multiple issues.

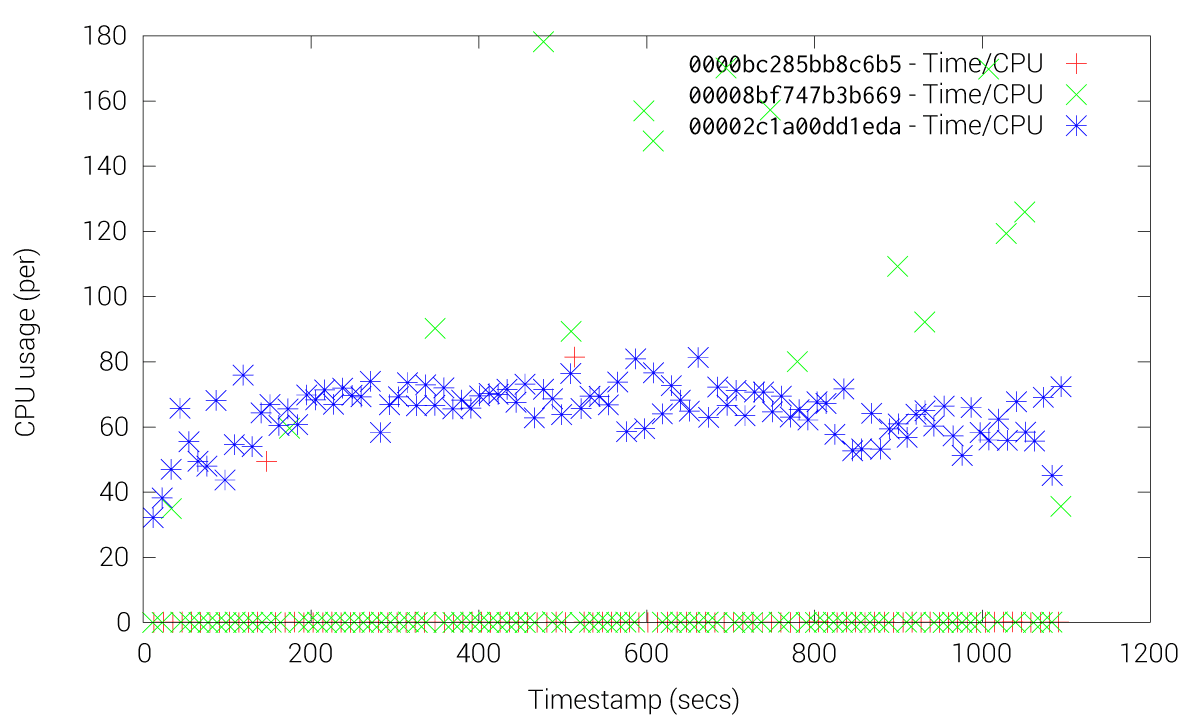

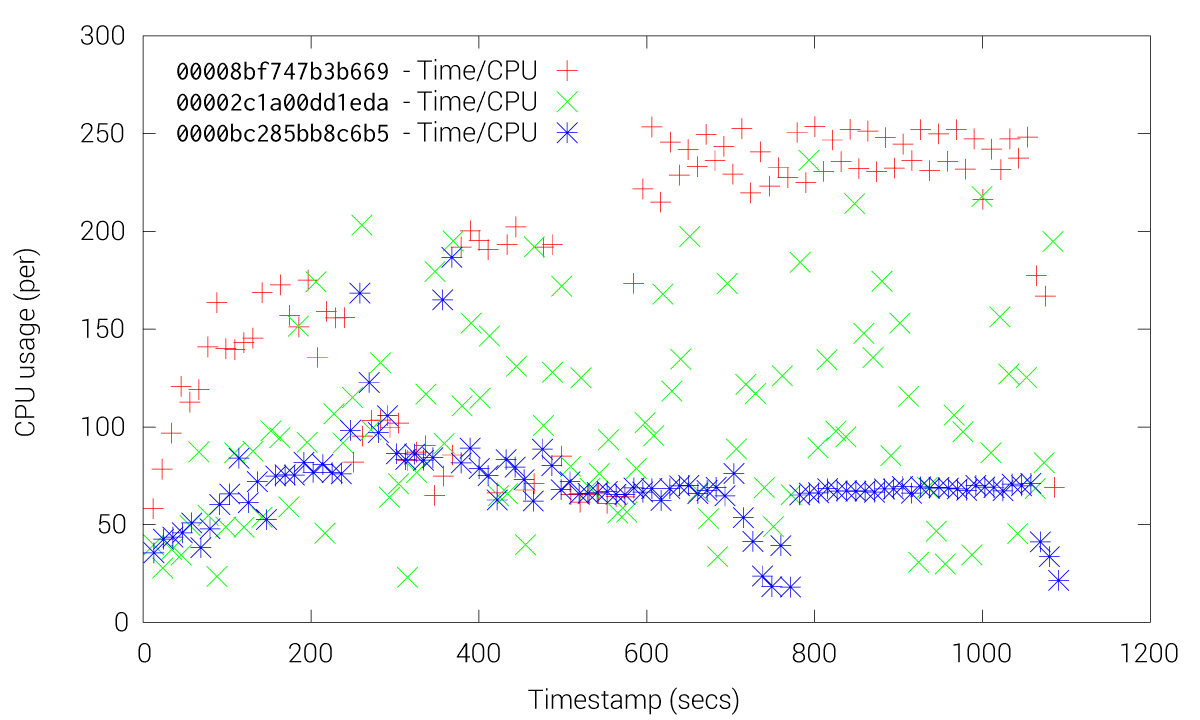

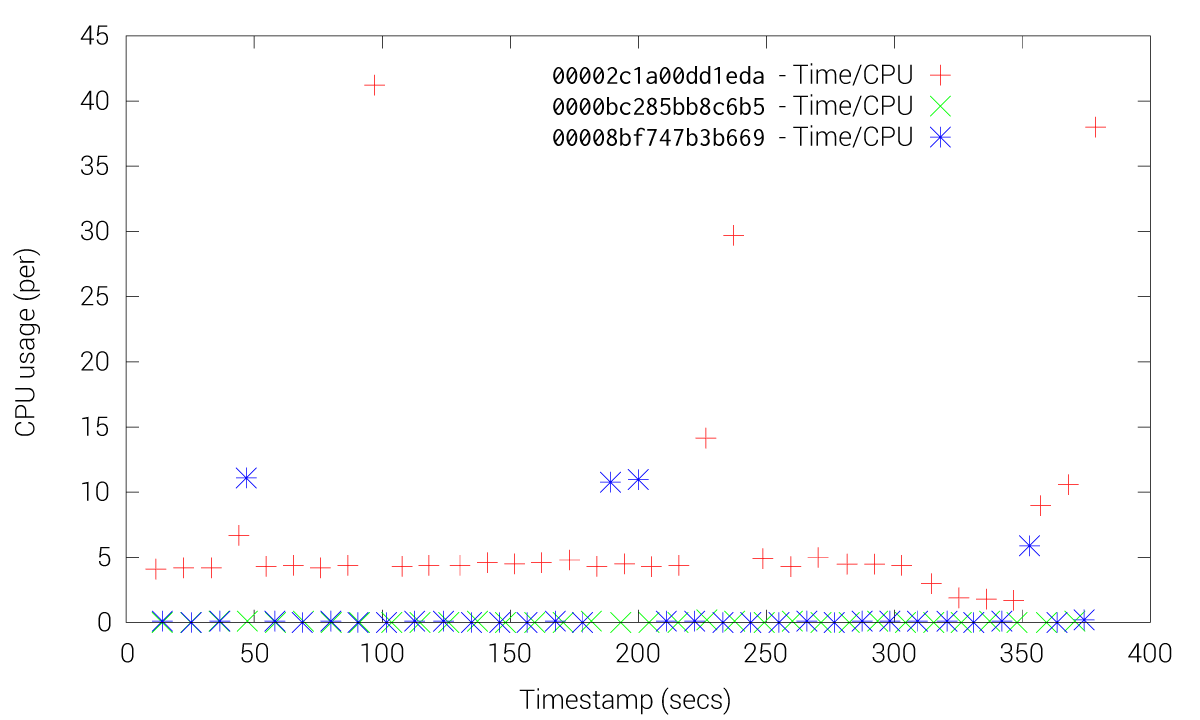

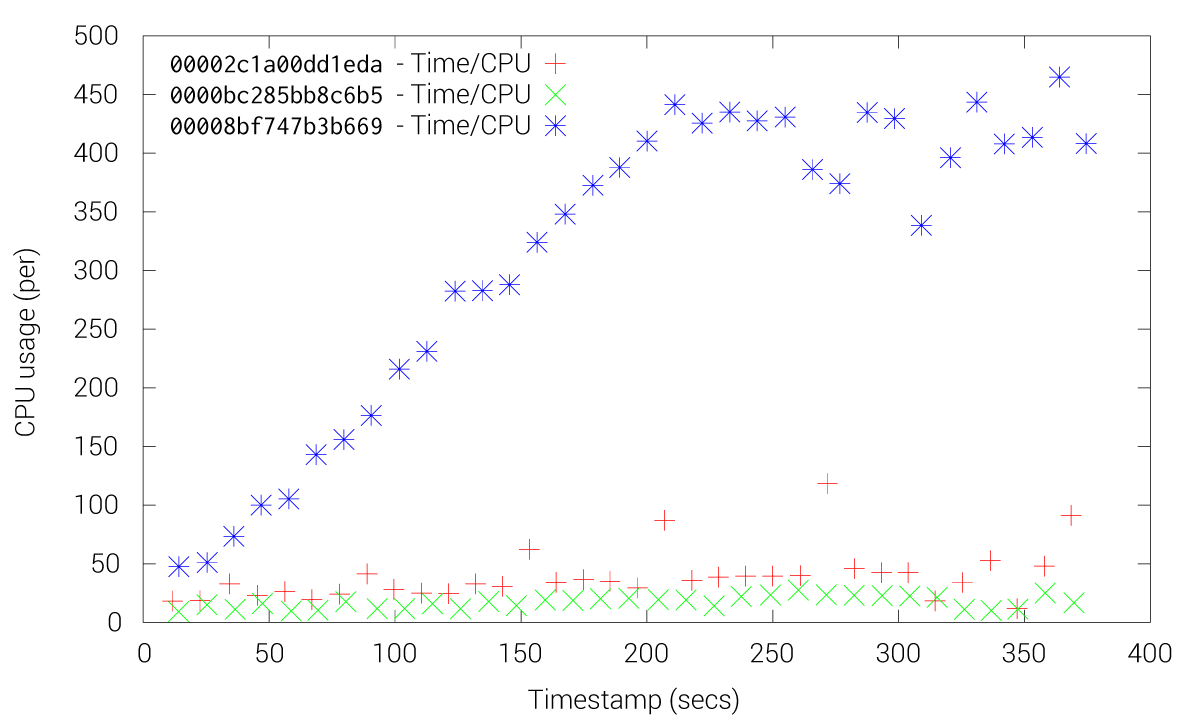

These Figures shows how etcd friction is drastically reduced when using the fleet-grpc approach. With the etcd approach most of the fleet agents and engines experience high CPU usage, especially the engines, while our fleet-grpc approach offers a performance behavior where the fleet engine elected as the leader is the only one with high CPU usage. Accordingly, the fleet-grpc agents have low CPU usage, which saves resources to run user applications on those nodes.

This new implementation of fleet using gRPC offers multiple benefits such as:

Note: The fleet version used to run the above mentioned experiments contains some additional improvements which we might also push upstream as PRs to the main CoreOS fleet repository. If you want to play around with these changes, you can use the following fleet branch in our forked repository.

These Stories on Tech

A look into the future of cloud native with Giant Swarm.

A look into the future of cloud native with Giant Swarm.

A Technical Product Owner explores Kubernetes Gateway API from a Giant Swarm perspective.

We empower platform teams to provide internal developer platforms that fuel innovation and fast-paced growth.

GET IN TOUCH

General: hello@giantswarm.io

CERTIFIED SERVICE PROVIDER

No Comments Yet

Let us know what you think