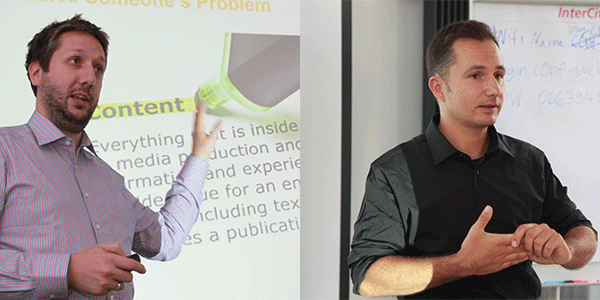

For my first post here on my new blog at WebMarketingSchool.com, I’ve been lucky enough to interview Fili Wiese and Kaspar Szymanski:

If you’re not familiar with them, they have been “on the other side of the fence” to the rest of us SEO’s for the better part of the last decade – both having spent their recent careers at Google Search Quality, or Product Quality Operations – commonly known to the rest of us as the dreaded “webspam” team!

If you’re not familiar with them, they have been “on the other side of the fence” to the rest of us SEO’s for the better part of the last decade – both having spent their recent careers at Google Search Quality, or Product Quality Operations – commonly known to the rest of us as the dreaded “webspam” team!

Now its not every day you get the opportunity to speak to not only one, but TWO recently “retired” members of the Google Webspam team – so I took advantage of that, with the following questions:

Hi Guys,

First off thanks for taking the time to allow me to ask you some questions about your past history at Google. For the readers sakes could you just fill us in on what you did at Google, how long for, and when you left?

Hello Martin, thanks for having us. The two of us, Kaspar Szymanski and Fili Wiese, we were with PQO (Product Quality Operations) at Google for approximately 15 years combined. We’ve done and seen it all: web spam, link spam, reconsiderations, Google webmaster guidelines, webmaster outreach and so much more. We have a long history of working together and as the opportunity presented itself, we decided to continue doing what we’re best at: helping webmasters and site owners to get the maximum potential out of their websites, as SEO consultants. That is what we do these days 🙂

OK – onto some questions – Just to clarify for the readers, I’ve been allowed to ask any questions I like, and am publishing the responses in their full format from both Kaspar and Fili where appropriate.

Google’s Structure

A lot of people are interested in the structure of how Google’s organic teams function. Could you explain the difference between search quality and web spam, and what you guys do generally?

The web spam engineering team and the manual spam fighting group, where we gained our experience, work hand in hand on the quality of the search. The general objective of the engineering team is to develop and improve scalable, global answers to web spam. Manual spam fighters look after all the bits and pieces of spam that gets through under the radar and new trends that may pop up. That is what we have been doing, although we can not go greatly into details for obvious reasons. It has been great fun and we highly recommend the team and the professional challenge to anyone.

How many people work in web spam, globally, and do you have people for every language yet? Or will you ever have people for every language.

We can not disclose the exact number but we can share this much: the web spam team never rest. There are several location around the globe and at any given time somewhere there are web spam folks taking care of the quality of Google Search. That and the diversity of the team gives you a bit the feeling of a global village. Working on web spam at Google gives you an unique opportunity to meet people with very exciting and diverse backgrounds. Web skills aside we had all kind of folks at Google at our time, like kite surfers (a lot of them), marathon runners, scuba divers, boat skippers, sommeliers, combat pilots, even former submarine captains! It’s a very competitive, exciting group of people to work with:)

As for language coverage, we were not part of the long term planning team hence we can not predict whether all of the 6.500 languages spoken worldwide will be covered by individual native speakers one day. That said, team and web spam fighting are quite language agnostic. At the end of the day, everyone at Search Quality understands source code.

How much interaction are there between your two teams, and the team that is responsible for building and refining new algorithms, ie. How good is the feedback loop?

From our experience, this collaboration is great and the engineers receive constant feedback through different channels from the manual web spam fighting team. In the end of the day the goals of the two teams are the same: fight web spam so that the user gets the best possible result.

How much interaction is there between the ad sales guys (AdWords, AdSense) and the natural search guys, for instance have you been in the circumstance where you’ve been asked if its possible to adjust rankings for commercial reasons?

We tried to debunk that particular industry myth many times, even recently in a guest post on State of Search. Commercial motives have no impact on natural search results, period. Anyone who says anything else really does not know.

Having said that, what does happen is people move between teams within Product Quality Operations, which is the group caring for Google product quality or Google in general. This is one of the great things about working for Google: you get plenty of career opportunities to grow and develop skills.

I’m presuming its not possible for the advertising guys to have any influence in these matters, but can you absolutely confirm that?

In the seven plus years each of us has been with Google we have never experienced that this was ever possible. Anyone who tells you anything different is in our opinion mistaken.

Negative SEO & The Penguin Update

Prior to the penguin update did you guys have frequent issues with negative SEO attacks?

Perceived negative SEO has been escalated now and then but only a handful of times it’s been actual negative SEO attempts and they were not very successful. Negative SEO is generally overrated, the methods discussed at times are often not even legal according to the laws of many countries. On top of that, there are easy ways of countermeasures if a potential negative SEO attempt was imminent, for example by utilizing the Disavow Link Tool.

Subsequent to the penguin update, did you guys have an increased problem with negative SEO attacks?

Not that we are aware of.

Lots of spammy links have very similar footprints, for example profile links on popular forums, comment spam on wordpress blogs etc. Do you actively discount the value of links that match these common footprints?

You are right, low quality boilerplate links can be rather easily detected and as such are not likely to have any lasting value for the sites they are targeting to. Anybody hoping to build lasting authority in Google Search results based on such an old school link spam strategy is ill advised.

If Google is serious about combating web spam, why don’t you just employ some really good blackhats to learn the trade from their perspective?

Google is serious about tackling web spam and they do hire top notch people, without necessarily bragging about it all the time 😉

On site Content and the Panda Updates

We get told regularly that unique content is key, and its certainly got harder to rank pages with little or no content in the last few years. If a page has no unique value but an overwhelming link profile is it still possible to rank for competitive terms?

Probably not for long. If you’re willing to burn a site you can try but this is not a strategy legitimate business can or should follow and we only consult legitimate businesses. After leaving the manual web spam team at Google we have been asked time and again whether our approach to black hat techniques has changed in any way. The answer is no! We still believe that long term white hat is the only way for legitimate site owners to serve their users and build their brand and their user base. Anyone who’s convinced otherwise has been warned and we won’t be able to help them.

If a site has a large amount of duplicate content (externally duplicate) is it a rational recommendation to noindex pages with a large percentage of that type of content? If so, what would that percentage be?

Potentially, yes. There’s no percentage we can share or even would make sense as every site is different and the internet is in constant flux. Instead we suggest you use common sense. Put – for a moment – the user cap on and ask yourself what’s the unique business proposition of a particular site? What makes it different, better than the next site in the same vertical? Answering that question first should be the foundation of any long term SEO and marketing strategy.

Freshness: Do you need fresh content to rank a small/average sized site with a small back link profile? Is it better, or is it irrelevant?

No. It depends on the relevancy of the niche and topic. And of course also on the quality of the back links. Think of highly specialized technology or history topics for example. Quality always wins over continuously lean content updates. Think quality, not quantity.

Other Quality Signals:

We read a lot about correlation between social signals and rankings. Can you confirm whether tweets, likes or Plus Ones have any direct (ie. Non secondary) influence in the ranking algorithm?

To some extent, yes. There’s an interesting Matt Cutts video on that topic, which we’d recommend to watch. That said, we tend to think of social signals in a different way. If you think about it, tweeting about a new piece of content can help to spread the word and get it crawled in the first place. In that sense, there’s an obvious impact from the social signals allowing great content to be found, crawled and indexed faster.

If a site has lots of rich media, that’s unique to that site, ie. Video content that is self hosted, is that considered a trust or relevance factor?

Rich media can be great content. Users tend to love it and Google really cares about their users. Of course it can help to provide a ton of quality content, let it be written or rich media. Site owners should however not obsess with perceived trust or relevance factors Google might value or not. Instead, it’s best to serve the target audience the content they need. And make sure your content is crawlable for search bots.

At the moment, would authorship signals help with the perceived trust of a website?

Absolutely! it get’s you more real estate in the serps and helps to build and maintain authority in your niche. Having an author image attached to your quality content can greatly enhanced the perceived quality of a source, not to mention it has the potential to increase overall CTR.

If they aren’t currently considered, can you foresee a point in the future where they might be? What would then be the pivot for that to be used, simply breadth of usage?

Anything that is available but maybe not excessively used at this point of time, like using certain structured data schemas from schema.org for example, can give a webmaster the first mover advantage once certain schema reaches a critical point.

The Disavow Tool:

Are link disavows currently fully automated in your systems – or does every request have a manual review?

Think about if for a moment: thousands, potentially more disavow links files with again potentially hundreds of thousands of patterns reviewed manually? That is simply not a scalable approach. Google is all about speed and automation and the disavow link tool is no exception there.

If a site with low trust factors crops up in lots of disavow requests, would that eventually damage that sites ability to rank?

If a site add little value to the web it’s ranking would most likely not be damaged by signals, as its rankings should be low to start with. Whether disavow links tool data may become a source for ranking signals, that is a great question for Google folks still involved in the development process. However we would not be surprised if Google should decide in the future to start using this data.

Bonus Questions

WTF is it with this whole “not provided” thing anyway! Can you really hand on heart argue its to protect users privacy, and not to bottleneck the efficiency of SEOs?

We can not, because we only share our personal opinions, we don’t speak on behalf of Google anymore. That said, we argue that a) Google has shown tremendous support for webmasters and site owners increasing their official communication channels (YouTube Webmaster Channel, weekly Webmaster Office Hours), is sharing more and more data (manual spam action checker, search queries reports, spam link examples etc.) and b) Google was always open about carrying most about their users, not site owners but the people who use Google Products, first and foremost Google Search users like you and me. Having said that, we feel and share your pains.

Thanks guys for taking the time to answer my questions!

Kaspar & Fili are organising an SEO workshop:

London, October 8th 2013.

During the workshop they will discussing penalties, algorithms, long term link building, on page SEO and more. Together with Jonas and Ariel (colleagues and also ex-googlers, they represent more than 20 years (of combined) web spam fighting experience from inside Google.

If thats the kind of thing you might be interested in, I recommend you check out their program for the day (external link) and sign up as soon as possible to secure your spot, I’ll be going to carry on grilling them!